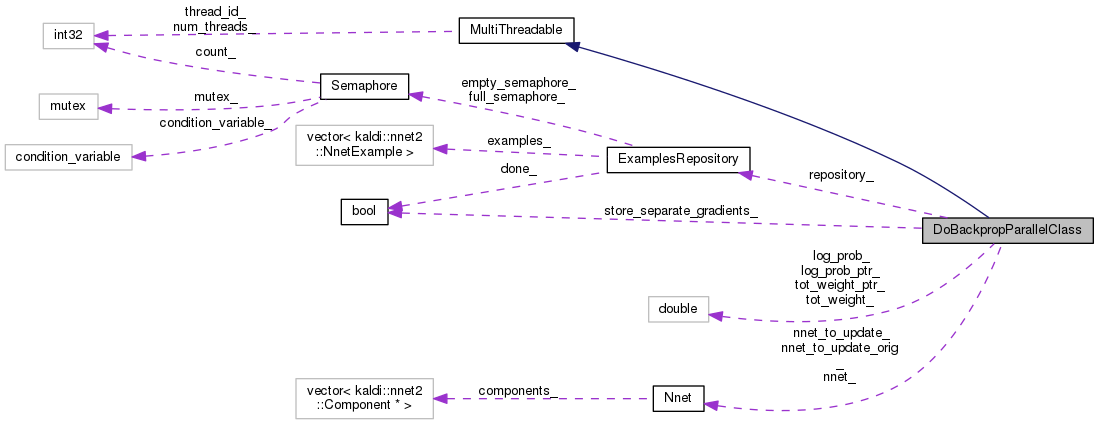

Public Member Functions | |

| DoBackpropParallelClass (const Nnet &nnet, ExamplesRepository *repository, double *tot_weight_ptr, double *log_prob_ptr, Nnet *nnet_to_update, bool store_separate_gradients) | |

| DoBackpropParallelClass (const DoBackpropParallelClass &other) | |

| void | operator() () |

| ~DoBackpropParallelClass () | |

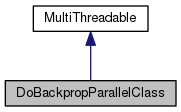

Public Member Functions inherited from MultiThreadable Public Member Functions inherited from MultiThreadable | |

| virtual | ~MultiThreadable () |

Private Attributes | |

| const Nnet & | nnet_ |

| ExamplesRepository * | repository_ |

| Nnet * | nnet_to_update_ |

| Nnet * | nnet_to_update_orig_ |

| bool | store_separate_gradients_ |

| double * | tot_weight_ptr_ |

| double * | log_prob_ptr_ |

| double | tot_weight_ |

| double | log_prob_ |

Additional Inherited Members | |

Public Attributes inherited from MultiThreadable Public Attributes inherited from MultiThreadable | |

| int32 | thread_id_ |

| int32 | num_threads_ |

Definition at line 29 of file nnet-update-parallel.cc.

|

inline |

Definition at line 33 of file nnet-update-parallel.cc.

|

inline |

Definition at line 50 of file nnet-update-parallel.cc.

References DoBackpropParallelClass::nnet_to_update_, Nnet::SetZero(), and DoBackpropParallelClass::store_separate_gradients_.

|

inline |

Definition at line 98 of file nnet-update-parallel.cc.

References Nnet::AddNnet(), DoBackpropParallelClass::log_prob_, DoBackpropParallelClass::log_prob_ptr_, DoBackpropParallelClass::nnet_to_update_, DoBackpropParallelClass::nnet_to_update_orig_, DoBackpropParallelClass::tot_weight_, and DoBackpropParallelClass::tot_weight_ptr_.

|

inlinevirtual |

Implements MultiThreadable.

Definition at line 79 of file nnet-update-parallel.cc.

References kaldi::nnet2::ComputeNnetObjf(), kaldi::nnet2::DoBackprop(), KALDI_VLOG, DoBackpropParallelClass::log_prob_, DoBackpropParallelClass::nnet_, DoBackpropParallelClass::nnet_to_update_, ExamplesRepository::ProvideExamples(), DoBackpropParallelClass::repository_, MultiThreadable::thread_id_, DoBackpropParallelClass::tot_weight_, and kaldi::nnet2::TotalNnetTrainingWeight().

|

private |

Definition at line 119 of file nnet-update-parallel.cc.

Referenced by DoBackpropParallelClass::operator()(), and DoBackpropParallelClass::~DoBackpropParallelClass().

|

private |

Definition at line 117 of file nnet-update-parallel.cc.

Referenced by DoBackpropParallelClass::~DoBackpropParallelClass().

|

private |

Definition at line 111 of file nnet-update-parallel.cc.

Referenced by DoBackpropParallelClass::operator()().

|

private |

Definition at line 113 of file nnet-update-parallel.cc.

Referenced by DoBackpropParallelClass::DoBackpropParallelClass(), DoBackpropParallelClass::operator()(), and DoBackpropParallelClass::~DoBackpropParallelClass().

|

private |

Definition at line 114 of file nnet-update-parallel.cc.

Referenced by DoBackpropParallelClass::~DoBackpropParallelClass().

|

private |

Definition at line 112 of file nnet-update-parallel.cc.

Referenced by DoBackpropParallelClass::operator()().

|

private |

Definition at line 115 of file nnet-update-parallel.cc.

Referenced by DoBackpropParallelClass::DoBackpropParallelClass().

|

private |

Definition at line 118 of file nnet-update-parallel.cc.

Referenced by DoBackpropParallelClass::operator()(), and DoBackpropParallelClass::~DoBackpropParallelClass().

|

private |

Definition at line 116 of file nnet-update-parallel.cc.

Referenced by DoBackpropParallelClass::~DoBackpropParallelClass().