#include <nnet-training.h>

Public Member Functions | |

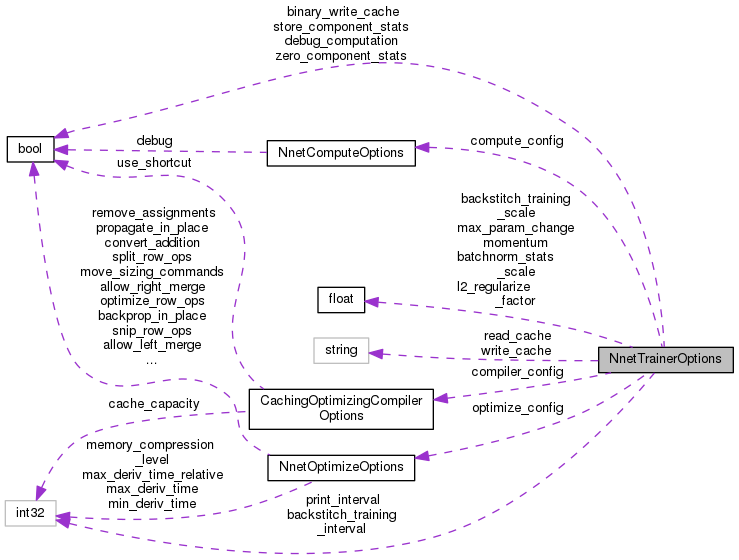

| NnetTrainerOptions () | |

| void | Register (OptionsItf *opts) |

Definition at line 34 of file nnet-training.h.

|

inline |

Definition at line 51 of file nnet-training.h.

|

inline |

Definition at line 63 of file nnet-training.h.

References OptionsItf::Register(), NnetComputeOptions::Register(), NnetOptimizeOptions::Register(), and CachingOptimizingCompilerOptions::Register().

Referenced by main(), NnetChainTrainingOptions::Register(), and NnetDiscriminativeOptions::Register().

| int32 backstitch_training_interval |

Definition at line 42 of file nnet-training.h.

Referenced by NnetChainTrainer::NnetChainTrainer(), NnetTrainer::NnetTrainer(), NnetChainTrainer::Train(), and NnetTrainer::Train().

| BaseFloat backstitch_training_scale |

Definition at line 41 of file nnet-training.h.

Referenced by NnetChainTrainer::Train(), NnetTrainer::Train(), NnetChainTrainer::TrainInternalBackstitch(), and NnetTrainer::TrainInternalBackstitch().

| BaseFloat batchnorm_stats_scale |

Definition at line 43 of file nnet-training.h.

Referenced by NnetChainTrainer::TrainInternal(), NnetTrainer::TrainInternal(), NnetChainTrainer::TrainInternalBackstitch(), and NnetTrainer::TrainInternalBackstitch().

| bool binary_write_cache |

Definition at line 46 of file nnet-training.h.

Referenced by NnetChainTrainer::~NnetChainTrainer(), NnetDiscriminativeTrainer::~NnetDiscriminativeTrainer(), and NnetTrainer::~NnetTrainer().

| CachingOptimizingCompilerOptions compiler_config |

Definition at line 50 of file nnet-training.h.

| NnetComputeOptions compute_config |

Definition at line 49 of file nnet-training.h.

Referenced by NnetDiscriminativeTrainer::Train(), NnetChainTrainer::TrainInternal(), NnetTrainer::TrainInternal(), NnetChainTrainer::TrainInternalBackstitch(), and NnetTrainer::TrainInternalBackstitch().

| bool debug_computation |

Definition at line 38 of file nnet-training.h.

| BaseFloat l2_regularize_factor |

Definition at line 40 of file nnet-training.h.

Referenced by NnetChainTrainer::TrainInternal(), NnetTrainer::TrainInternal(), NnetChainTrainer::TrainInternalBackstitch(), and NnetTrainer::TrainInternalBackstitch().

| BaseFloat max_param_change |

Definition at line 47 of file nnet-training.h.

Referenced by NnetChainTrainer::NnetChainTrainer(), NnetDiscriminativeTrainer::NnetDiscriminativeTrainer(), NnetTrainer::NnetTrainer(), NnetDiscriminativeTrainer::Train(), NnetChainTrainer::TrainInternal(), NnetTrainer::TrainInternal(), NnetChainTrainer::TrainInternalBackstitch(), and NnetTrainer::TrainInternalBackstitch().

| BaseFloat momentum |

Definition at line 39 of file nnet-training.h.

Referenced by NnetChainTrainer::NnetChainTrainer(), NnetDiscriminativeTrainer::NnetDiscriminativeTrainer(), NnetTrainer::NnetTrainer(), NnetChainTrainer::Train(), NnetDiscriminativeTrainer::Train(), NnetTrainer::Train(), NnetChainTrainer::TrainInternal(), and NnetTrainer::TrainInternal().

| NnetOptimizeOptions optimize_config |

Definition at line 48 of file nnet-training.h.

| int32 print_interval |

Definition at line 37 of file nnet-training.h.

Referenced by NnetChainTrainer::ProcessOutputs(), NnetDiscriminativeTrainer::ProcessOutputs(), and NnetTrainer::ProcessOutputs().

| std::string read_cache |

Definition at line 44 of file nnet-training.h.

Referenced by NnetChainTrainer::NnetChainTrainer(), NnetDiscriminativeTrainer::NnetDiscriminativeTrainer(), and NnetTrainer::NnetTrainer().

| bool store_component_stats |

Definition at line 36 of file nnet-training.h.

Referenced by NnetChainTrainer::Train(), NnetDiscriminativeTrainer::Train(), and NnetTrainer::Train().

| std::string write_cache |

Definition at line 45 of file nnet-training.h.

Referenced by NnetChainTrainer::~NnetChainTrainer(), NnetDiscriminativeTrainer::~NnetDiscriminativeTrainer(), and NnetTrainer::~NnetTrainer().

| bool zero_component_stats |

Definition at line 35 of file nnet-training.h.

Referenced by NnetChainTrainer::NnetChainTrainer(), NnetDiscriminativeTrainer::NnetDiscriminativeTrainer(), and NnetTrainer::NnetTrainer().