77 using namespace kaldi;

81 typedef kaldi::int64 int64;

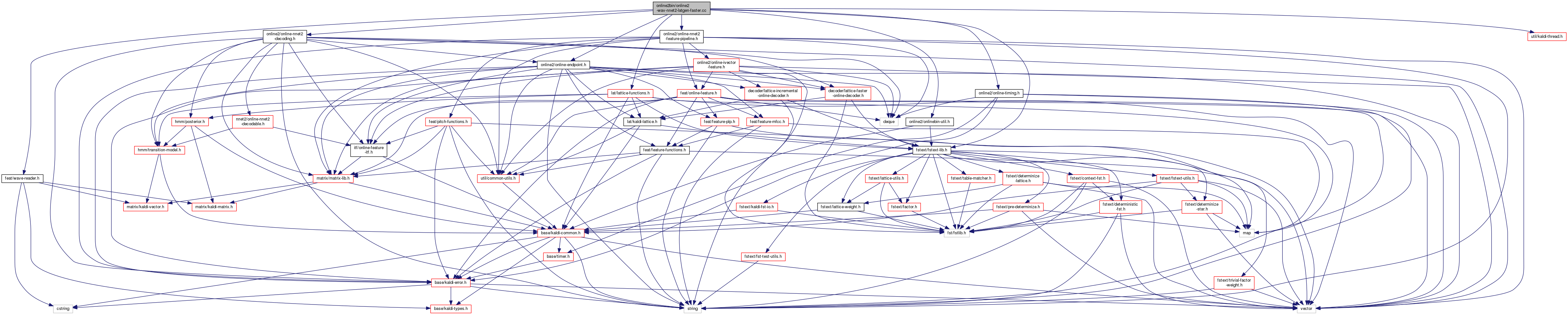

84 "Reads in wav file(s) and simulates online decoding with neural nets\n" 85 "(nnet2 setup), with optional iVector-based speaker adaptation and\n" 86 "optional endpointing. Note: some configuration values and inputs are\n" 87 "set via config files whose filenames are passed as options\n" 89 "Usage: online2-wav-nnet2-latgen-faster [options] <nnet2-in> <fst-in> " 90 "<spk2utt-rspecifier> <wav-rspecifier> <lattice-wspecifier>\n" 91 "The spk2utt-rspecifier can just be <utterance-id> <utterance-id> if\n" 92 "you want to decode utterance by utterance.\n" 93 "See egs/rm/s5/local/run_online_decoding_nnet2.sh for example\n" 94 "See also online2-wav-nnet2-latgen-threaded\n";

98 std::string word_syms_rxfilename;

108 bool do_endpointing =

false;

111 po.Register(

"chunk-length", &chunk_length_secs,

112 "Length of chunk size in seconds, that we process. Set to <= 0 " 113 "to use all input in one chunk.");

114 po.Register(

"word-symbol-table", &word_syms_rxfilename,

115 "Symbol table for words [for debug output]");

116 po.Register(

"do-endpointing", &do_endpointing,

117 "If true, apply endpoint detection");

118 po.Register(

"online", &online,

119 "You can set this to false to disable online iVector estimation " 120 "and have all the data for each utterance used, even at " 121 "utterance start. This is useful where you just want the best " 122 "results and don't care about online operation. Setting this to " 123 "false has the same effect as setting " 124 "--use-most-recent-ivector=true and --greedy-ivector-extractor=true " 125 "in the file given to --ivector-extraction-config, and " 126 "--chunk-length=-1.");

128 "Number of threads used when initializing iVector extractor.");

131 nnet2_decoding_config.

Register(&po);

136 if (po.NumArgs() != 5) {

141 std::string nnet2_rxfilename = po.GetArg(1),

142 fst_rxfilename = po.GetArg(2),

143 spk2utt_rspecifier = po.GetArg(3),

144 wav_rspecifier = po.GetArg(4),

145 clat_wspecifier = po.GetArg(5);

149 feature_info.ivector_extractor_info.use_most_recent_ivector =

true;

150 feature_info.ivector_extractor_info.greedy_ivector_extractor =

true;

151 chunk_length_secs = -1.0;

155 if (feature_info.global_cmvn_stats_rxfilename !=

"")

163 Input ki(nnet2_rxfilename, &binary);

164 trans_model.

Read(ki.Stream(), binary);

165 nnet.

Read(ki.Stream(), binary);

170 fst::SymbolTable *word_syms = NULL;

171 if (word_syms_rxfilename !=

"")

172 if (!(word_syms = fst::SymbolTable::ReadText(word_syms_rxfilename)))

173 KALDI_ERR <<

"Could not read symbol table from file " 174 << word_syms_rxfilename;

176 int32 num_done = 0, num_err = 0;

177 double tot_like = 0.0;

178 int64 num_frames = 0;

186 for (; !spk2utt_reader.Done(); spk2utt_reader.Next()) {

187 std::string spk = spk2utt_reader.Key();

188 const std::vector<std::string> &uttlist = spk2utt_reader.Value();

191 feature_info.ivector_extractor_info);

194 for (

size_t i = 0;

i < uttlist.size();

i++) {

195 std::string utt = uttlist[

i];

196 if (!wav_reader.HasKey(utt)) {

197 KALDI_WARN <<

"Did not find audio for utterance " << utt;

201 const WaveData &wave_data = wav_reader.Value(utt);

207 feature_pipeline.SetAdaptationState(adaptation_state);

208 feature_pipeline.SetCmvnState(cmvn_state);

212 feature_info.silence_weighting_config);

223 if (chunk_length_secs > 0) {

224 chunk_length =

int32(samp_freq * chunk_length_secs);

225 if (chunk_length == 0) chunk_length = 1;

227 chunk_length = std::numeric_limits<int32>::max();

230 int32 samp_offset = 0;

231 std::vector<std::pair<int32, BaseFloat> > delta_weights;

233 while (samp_offset < data.Dim()) {

234 int32 samp_remaining = data.Dim() - samp_offset;

235 int32 num_samp = chunk_length < samp_remaining ? chunk_length

239 feature_pipeline.AcceptWaveform(samp_freq, wave_part);

241 samp_offset += num_samp;

242 decoding_timer.WaitUntil(samp_offset / samp_freq);

243 if (samp_offset == data.Dim()) {

245 feature_pipeline.InputFinished();

248 if (silence_weighting.Active() &&

249 feature_pipeline.IvectorFeature() != NULL) {

250 silence_weighting.ComputeCurrentTraceback(decoder.Decoder());

251 silence_weighting.GetDeltaWeights(

252 feature_pipeline.IvectorFeature()->NumFramesReady(),

254 feature_pipeline.IvectorFeature()->UpdateFrameWeights(

258 decoder.AdvanceDecoding();

260 if (do_endpointing && decoder.EndpointDetected(endpoint_config))

263 decoder.FinalizeDecoding();

266 bool end_of_utterance =

true;

267 decoder.GetLattice(end_of_utterance, &clat);

270 &num_frames, &tot_like);

272 decoding_timer.OutputStats(&timing_stats);

276 feature_pipeline.GetAdaptationState(&adaptation_state);

277 feature_pipeline.GetCmvnState(&cmvn_state);

284 clat_writer.Write(utt, clat);

285 KALDI_LOG <<

"Decoded utterance " << utt;

289 timing_stats.

Print(online);

291 KALDI_LOG <<

"Decoded " << num_done <<

" utterances, " 292 << num_err <<

" with errors.";

293 KALDI_LOG <<

"Overall likelihood per frame was " << (tot_like / num_frames)

294 <<

" per frame over " << num_frames <<

" frames.";

297 return (num_done != 0 ? 0 : 1);

298 }

catch(

const std::exception& e) {

299 std::cerr << e.what();

nnet2::DecodableNnet2OnlineOptions decodable_opts

This code computes Goodness of Pronunciation (GOP) and extracts phone-level pronunciation feature for...

class OnlineTimer is used to test real-time decoding algorithms and evaluate how long the decoding of...

This configuration class is to set up OnlineNnet2FeaturePipelineInfo, which in turn is the configurat...

Fst< StdArc > * ReadFstKaldiGeneric(std::string rxfilename, bool throw_on_err)

For an extended explanation of the framework of which grammar-fsts are a part, please see Support for...

void Read(std::istream &is, bool binary)

A templated class for writing objects to an archive or script file; see The Table concept...

BaseFloat SampFreq() const

const Matrix< BaseFloat > & Data() const

void Register(OptionsItf *opts)

void GetDiagnosticsAndPrintOutput(const std::string &utt, const fst::SymbolTable *word_syms, const CompactLattice &clat, int64 *tot_num_frames, double *tot_like)

This class is responsible for storing configuration variables, objects and options for OnlineNnet2Fea...

void ReadKaldiObject(const std::string &filename, Matrix< float > *m)

Allows random access to a collection of objects in an archive or script file; see The Table concept...

std::vector< std::vector< double > > AcousticLatticeScale(double acwt)

The class ParseOptions is for parsing command-line options; see Parsing command-line options for more...

void Print(bool online=true)

Here, if "online == false" we take into account that the setup was used in not-really-online mode whe...

void Register(OptionsItf *opts)

You will instantiate this class when you want to decode a single utterance using the online-decoding ...

void ScaleLattice(const std::vector< std::vector< ScaleFloat > > &scale, MutableFst< ArcTpl< Weight > > *fst)

Scales the pairs of weights in LatticeWeight or CompactLatticeWeight by viewing the pair (a...

void Read(std::istream &is, bool binary)

Struct OnlineCmvnState stores the state of CMVN adaptation between utterances (but not the state of t...

A templated class for reading objects sequentially from an archive or script file; see The Table conc...

void Register(OptionsItf *opts)

fst::VectorFst< CompactLatticeArc > CompactLattice

This class's purpose is to read in Wave files.

OnlineNnet2FeaturePipeline is a class that's responsible for putting together the various parts of th...

class OnlineTimingStats stores statistics from timing of online decoding, which will enable the Print...

Represents a non-allocating general vector which can be defined as a sub-vector of higher-level vecto...