This header contains a simple facility for endpointing, that should be used in conjunction with the "online2" online decoding code; see ../online2bin/online2-wav-gmm-latgen-faster-endpoint.cc. More...

#include <online-endpoint.h>

Public Member Functions | |

| OnlineEndpointRule (bool must_contain_nonsilence=true, BaseFloat min_trailing_silence=1.0, BaseFloat max_relative_cost=std::numeric_limits< BaseFloat >::infinity(), BaseFloat min_utterance_length=0.0) | |

| void | Register (OptionsItf *opts) |

| void | RegisterWithPrefix (const std::string &prefix, OptionsItf *opts) |

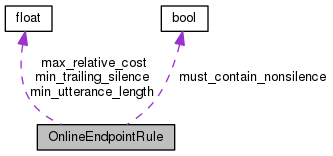

Public Attributes | |

| bool | must_contain_nonsilence |

| BaseFloat | min_trailing_silence |

| BaseFloat | max_relative_cost |

| BaseFloat | min_utterance_length |

This header contains a simple facility for endpointing, that should be used in conjunction with the "online2" online decoding code; see ../online2bin/online2-wav-gmm-latgen-faster-endpoint.cc.

By endpointing in this context we mean "deciding when to stop decoding", and not generic speech/silence segmentation. The use-case that we have in mind is some kind of dialog system where, as more speech data comes in, we decode more and more, and we have to decide when to stop decoding.

The endpointing rule is a disjunction of conjunctions. The way we have it configured, it's an OR of five rules, and each rule has the following form:

(<contains-nonsilence> || !rule.must_contain_nonsilence) && <length-of-trailing-silence> >= rule.min_trailing_silence && <relative-cost> <= rule.max_relative_cost && <utterance-length> >= rule.min_utterance_length)

where: <contains-nonsilence> is true if the best traceback contains any nonsilence phone; <length-of-trailing-silence> is the length in seconds of silence phones at the end of the best traceback (we stop counting when we hit non-silence), <relative-cost> is a value >= 0 extracted from the decoder, that is zero if a final-state of the grammar FST had the best cost at the final frame, and infinity if no final-state was active (and >0 for in-between cases). <utterance-length> is the number of seconds of the utterance that we have decoded so far.

All of these pieces of information are obtained from the best-path traceback from the decoder, which is output by the function GetBestPath(). We do this every time we're finished processing a chunk of data. [ Note: we're changing the decoders so that GetBestPath() is efficient and does not require operations on the entire lattice. ]

For details of the default rules, see struct OnlineEndpointConfig.

It's up to the caller whether to use final-probs or not when generating the best-path, i.e. whether to call decoder.GetBestPath(&lat, (true or false)), but my recommendation is not to use them. If you do use them, then depending on the grammar, you may force the best-path to decode non-silence even though that was not what it really preferred to decode.

Definition at line 88 of file online-endpoint.h.

|

inline |

Definition at line 94 of file online-endpoint.h.

|

inline |

Definition at line 103 of file online-endpoint.h.

References OptionsItf::Register().

Referenced by OnlineEndpointRule::RegisterWithPrefix().

|

inline |

Definition at line 121 of file online-endpoint.h.

References OnlineEndpointRule::Register().

Referenced by OnlineEndpointConfig::Register().

| BaseFloat max_relative_cost |

Definition at line 91 of file online-endpoint.h.

Referenced by kaldi::RuleActivated().

| BaseFloat min_trailing_silence |

Definition at line 90 of file online-endpoint.h.

Referenced by kaldi::RuleActivated().

| BaseFloat min_utterance_length |

Definition at line 92 of file online-endpoint.h.

Referenced by kaldi::RuleActivated().

| bool must_contain_nonsilence |

Definition at line 89 of file online-endpoint.h.

Referenced by kaldi::RuleActivated().