This class takes unlabeled iVectors from the domain of interest and uses their mean and variance to adapt your PLDA matrices to a new domain. More...

#include <plda.h>

Public Member Functions | |

| PldaUnsupervisedAdaptor () | |

| void | AddStats (double weight, const Vector< double > &ivector) |

| void | AddStats (double weight, const Vector< float > &ivector) |

| void | UpdatePlda (const PldaUnsupervisedAdaptorConfig &config, Plda *plda) const |

Private Attributes | |

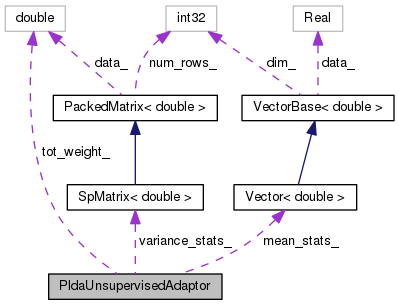

| double | tot_weight_ |

| Vector< double > | mean_stats_ |

| SpMatrix< double > | variance_stats_ |

This class takes unlabeled iVectors from the domain of interest and uses their mean and variance to adapt your PLDA matrices to a new domain.

This class also stores stats for this form of adaptation.

|

inline |

| void AddStats | ( | double | weight, |

| const Vector< double > & | ivector | ||

| ) |

Definition at line 595 of file plda.cc.

References VectorBase< Real >::Dim(), and KALDI_ASSERT.

Referenced by main().

Definition at line 607 of file plda.cc.

| void UpdatePlda | ( | const PldaUnsupervisedAdaptorConfig & | config, |

| Plda * | plda | ||

| ) | const |

Definition at line 613 of file plda.cc.

References SpMatrix< Real >::AddMat2Sp(), MatrixBase< Real >::AddMatMat(), MatrixBase< Real >::AddMatTp(), SpMatrix< Real >::AddTp2Sp(), VectorBase< Real >::AddVec(), SpMatrix< Real >::AddVec2(), PldaUnsupervisedAdaptorConfig::between_covar_scale, TpMatrix< Real >::Cholesky(), MatrixBase< Real >::CopyFromMat(), VectorBase< Real >::CopyFromVec(), Plda::Dim(), SpMatrix< Real >::Eig(), rnnlm::i, TpMatrix< Real >::Invert(), MatrixBase< Real >::Invert(), KALDI_ASSERT, KALDI_LOG, kaldi::kNoTrans, kaldi::kTrans, Plda::mean_, PldaUnsupervisedAdaptorConfig::mean_diff_scale, Plda::psi_, MatrixBase< Real >::Row(), PackedMatrix< Real >::Scale(), kaldi::SortSvd(), Plda::transform_, and PldaUnsupervisedAdaptorConfig::within_covar_scale.

Referenced by main().