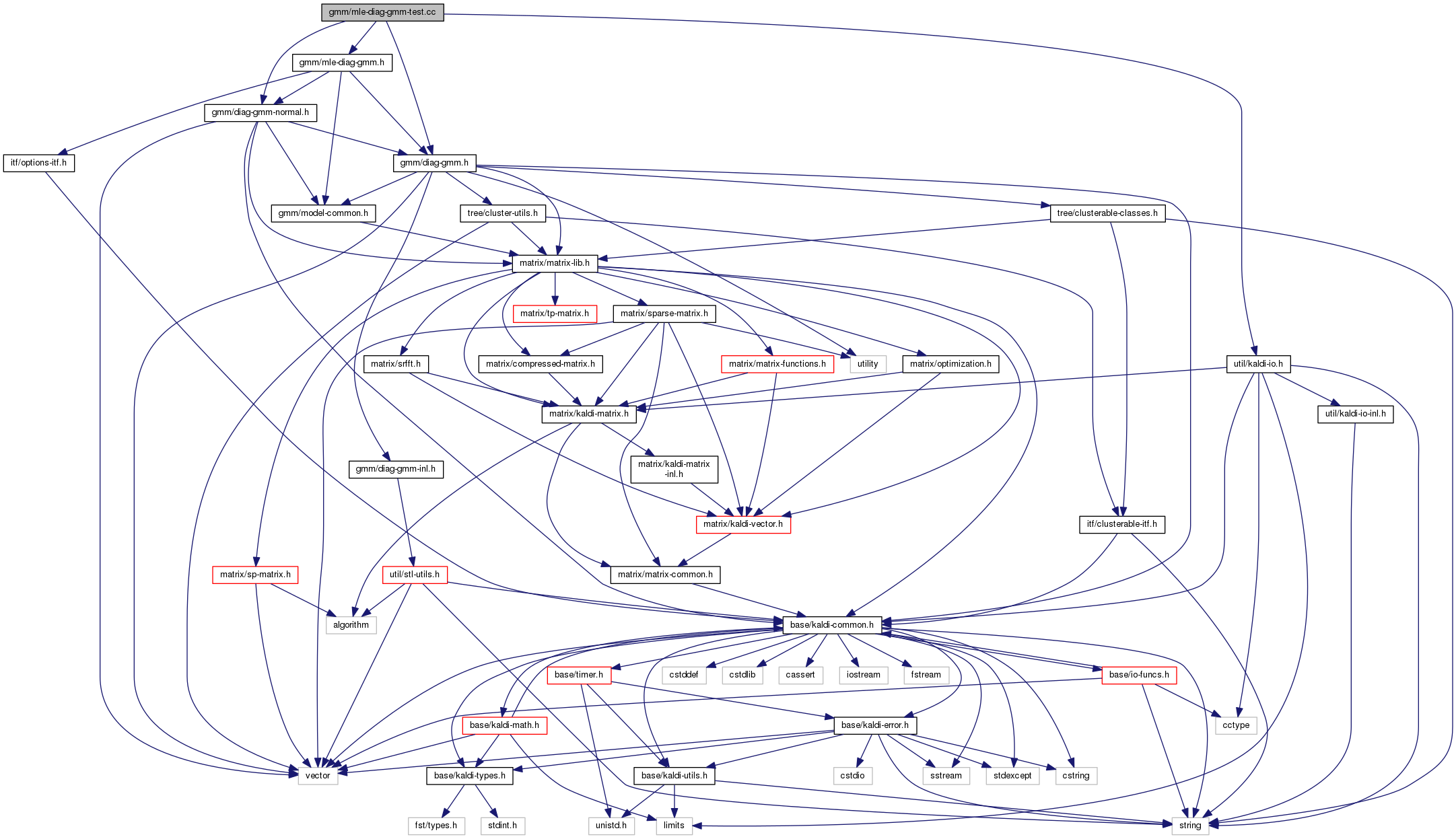

#include "gmm/diag-gmm.h"#include "gmm/diag-gmm-normal.h"#include "gmm/mle-diag-gmm.h"#include "util/kaldi-io.h"

Go to the source code of this file.

Functions | |

| void | TestComponentAcc (const DiagGmm &gmm, const Matrix< BaseFloat > &feats) |

| void | test_flags_driven_update (const DiagGmm &gmm, const Matrix< BaseFloat > &feats, GmmFlagsType flags) |

| void | test_io (const DiagGmm &gmm, const AccumDiagGmm &est_gmm, bool binary, const Matrix< BaseFloat > &feats) |

| void | UnitTestEstimateDiagGmm () |

| int | main () |

| int main | ( | ) |

Definition at line 407 of file mle-diag-gmm-test.cc.

References rnnlm::i, and UnitTestEstimateDiagGmm().

| void test_flags_driven_update | ( | const DiagGmm & | gmm, |

| const Matrix< BaseFloat > & | feats, | ||

| GmmFlagsType | flags | ||

| ) |

Definition at line 87 of file mle-diag-gmm-test.cc.

References AccumDiagGmm::AccumulateFromDiag(), kaldi::AssertEqual(), DiagGmm::ComputeGconsts(), DiagGmm::CopyFromDiagGmm(), DiagGmm::Dim(), DiagGmm::GetMeans(), DiagGmm::GetVars(), rnnlm::i, KALDI_WARN, kaldi::kGmmAll, kaldi::kGmmMeans, kaldi::kGmmVariances, kaldi::kGmmWeights, DiagGmm::LogLikelihood(), kaldi::MleDiagGmmUpdate(), DiagGmm::NumGauss(), AccumDiagGmm::NumGauss(), MatrixBase< Real >::NumRows(), AccumDiagGmm::Resize(), MatrixBase< Real >::Row(), DiagGmm::SetInvVars(), DiagGmm::SetMeans(), DiagGmm::SetWeights(), AccumDiagGmm::SetZero(), and DiagGmm::weights().

Referenced by UnitTestEstimateDiagGmm().

| void test_io | ( | const DiagGmm & | gmm, |

| const AccumDiagGmm & | est_gmm, | ||

| bool | binary, | ||

| const Matrix< BaseFloat > & | feats | ||

| ) |

Definition at line 161 of file mle-diag-gmm-test.cc.

References kaldi::AssertEqual(), DiagGmm::CopyFromDiagGmm(), AccumDiagGmm::Dim(), AccumDiagGmm::Flags(), rnnlm::i, kaldi::kGmmAll, DiagGmm::LogLikelihood(), kaldi::MleDiagGmmUpdate(), AccumDiagGmm::NumGauss(), MatrixBase< Real >::NumRows(), AccumDiagGmm::Read(), AccumDiagGmm::Resize(), MatrixBase< Real >::Row(), AccumDiagGmm::Scale(), Input::Stream(), and AccumDiagGmm::Write().

Referenced by UnitTestEstimateDiagGmm().

Definition at line 28 of file mle-diag-gmm-test.cc.

References AccumDiagGmm::AccumulateForComponent(), AccumDiagGmm::AccumulateFromDiag(), kaldi::AssertEqual(), DiagGmm::ComponentPosteriors(), DiagGmm::Dim(), rnnlm::i, KALDI_ASSERT, KALDI_WARN, kaldi::kGmmAll, DiagGmm::LogLikelihood(), kaldi::MleDiagGmmUpdate(), DiagGmm::NumGauss(), AccumDiagGmm::NumGauss(), MatrixBase< Real >::NumRows(), DiagGmm::Resize(), AccumDiagGmm::Resize(), MatrixBase< Real >::Row(), and AccumDiagGmm::SetZero().

Referenced by UnitTestEstimateDiagGmm().

| void UnitTestEstimateDiagGmm | ( | ) |

Definition at line 202 of file mle-diag-gmm-test.cc.

References AccumDiagGmm::AccumulateFromDiag(), AccumDiagGmm::AccumulateFromDiagMultiThreaded(), VectorBase< Real >::AddVec(), VectorBase< Real >::AddVec2(), VectorBase< Real >::ApplyPow(), kaldi::ApproxEqual(), AccumDiagGmm::AssertEqual(), kaldi::AssertEqual(), DiagGmm::ComputeGconsts(), MatrixBase< Real >::CopyFromMat(), VectorBase< Real >::CopyRowFromMat(), DiagGmmNormal::CopyToDiagGmm(), count, rnnlm::d, DiagGmm::Dim(), kaldi::Exp(), rnnlm::i, DiagGmm::inv_vars(), MatrixBase< Real >::InvertElements(), KALDI_ASSERT, KALDI_LOG, kaldi::kGmmAll, kaldi::kGmmMeans, kaldi::kGmmVariances, kaldi::kGmmWeights, DiagGmmNormal::means_, DiagGmm::means_invvars(), MleDiagGmmOptions::min_variance, kaldi::MleDiagGmmUpdate(), DiagGmm::NumGauss(), kaldi::Rand(), kaldi::RandGauss(), AccumDiagGmm::Read(), DiagGmm::Resize(), AccumDiagGmm::Resize(), MatrixBase< Real >::Row(), VectorBase< Real >::Scale(), DiagGmm::SetInvVarsAndMeans(), DiagGmm::SetWeights(), AccumDiagGmm::SetZero(), DiagGmm::Split(), Input::Stream(), test_flags_driven_update(), test_io(), TestComponentAcc(), DiagGmmNormal::vars_, DiagGmm::weights(), and AccumDiagGmm::Write().

Referenced by main().