Estimation functions for basis fMLLR. More...

#include <basis-fmllr-diag-gmm.h>

Public Member Functions | |

| BasisFmllrEstimate () | |

| BasisFmllrEstimate (int32 dim) | |

| void | Write (std::ostream &out_stream, bool binary) const |

| Routines for reading and writing fMLLR basis matrices. More... | |

| void | Read (std::istream &in_stream, bool binary) |

| void | EstimateFmllrBasis (const AmDiagGmm &am_gmm, const BasisFmllrAccus &basis_accus) |

| Estimate the base matrices efficiently in a Maximum Likelihood manner. More... | |

| void | ComputeAmDiagPrecond (const AmDiagGmm &am_gmm, SpMatrix< double > *pre_cond) |

| This function computes the preconditioner matrix, prior to base matrices estimation. More... | |

| int32 | Dim () const |

| int32 | BasisSize () const |

| double | ComputeTransform (const AffineXformStats &spk_stats, Matrix< BaseFloat > *out_xform, Vector< BaseFloat > *coefficients, BasisFmllrOptions options) const |

| This function performs speaker adaptation, computing the fMLLR matrix based on speaker statistics. More... | |

Private Attributes | |

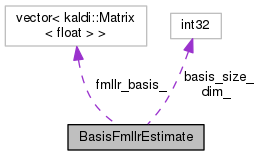

| std::vector< Matrix< BaseFloat > > | fmllr_basis_ |

| Basis matrices. More... | |

| int32 | dim_ |

| Feature dimension. More... | |

| int32 | basis_size_ |

| Number of bases D*(D+1) More... | |

Estimation functions for basis fMLLR.

Definition at line 107 of file basis-fmllr-diag-gmm.h.

|

inline |

Definition at line 110 of file basis-fmllr-diag-gmm.h.

|

inlineexplicit |

Definition at line 111 of file basis-fmllr-diag-gmm.h.

|

inline |

Definition at line 139 of file basis-fmllr-diag-gmm.h.

This function computes the preconditioner matrix, prior to base matrices estimation.

Since the expected values of G statistics are used, it takes the acoustic model as the argument, rather than the actual accumulations AffineXformStats See section 5.1 of the paper.

Definition at line 156 of file basis-fmllr-diag-gmm.cc.

References VectorBase< Real >::AddVec2(), SpMatrix< Real >::CopyFromMat(), rnnlm::d, AmDiagGmm::Dim(), BasisFmllrAccus::dim_, DiagGmm::GetMeans(), AmDiagGmm::GetPdf(), DiagGmm::GetVars(), rnnlm::i, MatrixBase< Real >::IsSymmetric(), rnnlm::j, KALDI_ASSERT, KALDI_ERR, kaldi::kSetZero, kaldi::kTakeLower, DiagGmm::NumGauss(), AmDiagGmm::NumPdfs(), PackedMatrix< Real >::NumRows(), VectorBase< Real >::Range(), MatrixBase< Real >::Range(), SpMatrix< Real >::Resize(), MatrixBase< Real >::Row(), and DiagGmm::weights().

| double ComputeTransform | ( | const AffineXformStats & | spk_stats, |

| Matrix< BaseFloat > * | out_xform, | ||

| Vector< BaseFloat > * | coefficients, | ||

| BasisFmllrOptions | options | ||

| ) | const |

This function performs speaker adaptation, computing the fMLLR matrix based on speaker statistics.

It takes fMLLR stats as argument. The basis weights (d_{1}, d_{2}, ..., d_{N}) are also optimized explicitly. Finally, it returns objective function improvement over all the iterations, compared with the value at the initial value of "out_xform" (or the unit transform if not provided). The coefficients are output to "coefficients" only if the vector is provided. See section 5.3 of the paper for more details.

Definition at line 270 of file basis-fmllr-diag-gmm.cc.

References MatrixBase< Real >::AddMat(), VectorBase< Real >::AddVec(), AffineXformStats::beta_, kaldi::CalBasisFmllrStepSize(), MatrixBase< Real >::CopyFromMat(), rnnlm::d, AffineXformStats::dim_, BasisFmllrAccus::dim_, kaldi::FmllrAuxFuncDiagGmm(), AffineXformStats::G_, MatrixBase< Real >::InvertDouble(), MatrixBase< Real >::IsZero(), AffineXformStats::K_, KALDI_ASSERT, KALDI_VLOG, KALDI_WARN, kaldi::kNoTrans, kaldi::kSetZero, kaldi::kTrans, BasisFmllrOptions::min_count, rnnlm::n, BasisFmllrOptions::num_iters, MatrixBase< Real >::NumCols(), MatrixBase< Real >::NumRows(), MatrixBase< Real >::Range(), Vector< Real >::Resize(), Matrix< Real >::Resize(), MatrixBase< Real >::Row(), MatrixBase< Real >::Scale(), MatrixBase< Real >::SetUnit(), MatrixBase< Real >::SetZero(), BasisFmllrOptions::size_scale, BasisFmllrOptions::step_size_iters, kaldi::TraceMatMat(), and Matrix< Real >::Transpose().

Referenced by SingleUtteranceGmmDecoder::EstimateFmllr(), and main().

|

inline |

Definition at line 137 of file basis-fmllr-diag-gmm.h.

Referenced by SingleUtteranceGmmDecoder::EstimateFmllr().

| void EstimateFmllrBasis | ( | const AmDiagGmm & | am_gmm, |

| const BasisFmllrAccus & | basis_accus | ||

| ) |

Estimate the base matrices efficiently in a Maximum Likelihood manner.

It takes diagonal GMM as argument, which will be used for preconditioner computation. The total number of bases is fixed to N = (dim + 1) * dim Note that SVD is performed in the normalized space. The base matrices are finally converted back to the unnormalized space.

The sum of the [per-frame] eigenvalues is roughly equal to the improvement of log-likelihood of the training data.

Definition at line 219 of file basis-fmllr-diag-gmm.cc.

References SpMatrix< Real >::AddMat2Sp(), VectorBase< Real >::AddMatVec(), BasisFmllrAccus::beta_, TpMatrix< Real >::Cholesky(), MatrixBase< Real >::CopyFromTp(), BasisFmllrAccus::dim_, BasisFmllrAccus::grad_scatter_, TpMatrix< Real >::InvertDouble(), KALDI_LOG, kaldi::kNoTrans, kaldi::kSetZero, kaldi::kTrans, rnnlm::n, MatrixBase< Real >::Row(), VectorBase< Real >::Scale(), kaldi::SortSvd(), SpMatrix< Real >::SymPosSemiDefEig(), and Matrix< Real >::Transpose().

Referenced by main().

| void Read | ( | std::istream & | in_stream, |

| bool | binary | ||

| ) |

Definition at line 133 of file basis-fmllr-diag-gmm.cc.

References BasisFmllrAccus::dim_, kaldi::ExpectToken(), KALDI_ASSERT, rnnlm::n, and kaldi::ReadBasicType().

| void Write | ( | std::ostream & | out_stream, |

| bool | binary | ||

| ) | const |

Routines for reading and writing fMLLR basis matrices.

Definition at line 116 of file basis-fmllr-diag-gmm.cc.

References rnnlm::n, kaldi::WriteBasicType(), and kaldi::WriteToken().

|

private |

Number of bases D*(D+1)

Definition at line 163 of file basis-fmllr-diag-gmm.h.

|

private |

Feature dimension.

Definition at line 161 of file basis-fmllr-diag-gmm.h.

Basis matrices.

Dim is [T] [D] [D+1] T is the number of bases

Definition at line 159 of file basis-fmllr-diag-gmm.h.