#include <optimization.h>

Public Member Functions | |

| OptimizeLbfgs (const VectorBase< Real > &x, const LbfgsOptions &opts) | |

| Initializer takes the starting value of x. More... | |

| const VectorBase< Real > & | GetValue (Real *objf_value=NULL) const |

| This returns the value of the variable x that has the best objective function so far, and the corresponding objective function value if requested. More... | |

| const VectorBase< Real > & | GetProposedValue () const |

| This returns the value at which the function wants us to compute the objective function and gradient. More... | |

| Real | RecentStepLength () const |

| Returns the average magnitude of the last n steps (but not more than the number we have stored). More... | |

| void | DoStep (Real function_value, const VectorBase< Real > &gradient) |

| The user calls this function to provide the class with the function and gradient info at the point GetProposedValue(). More... | |

| void | DoStep (Real function_value, const VectorBase< Real > &gradient, const VectorBase< Real > &diag_approx_2nd_deriv) |

| The user can call this version of DoStep() if it is desired to set some kind of approximate Hessian on this iteration. More... | |

Private Types | |

| enum | ComputationState { kBeforeStep, kWithinStep } |

| "compute p_k <-- - H_k \delta f_k" (i.e. Algorithm 7.4). More... | |

| enum | { kWolfeI, kWolfeII, kNone } |

Private Member Functions | |

| KALDI_DISALLOW_COPY_AND_ASSIGN (OptimizeLbfgs) | |

| MatrixIndexT | Dim () |

| MatrixIndexT | M () |

| SubVector< Real > | Y (MatrixIndexT i) |

| SubVector< Real > | S (MatrixIndexT i) |

| bool | AcceptStep (Real function_value, const VectorBase< Real > &gradient) |

| void | Restart (const VectorBase< Real > &x, Real function_value, const VectorBase< Real > &gradient) |

| void | ComputeNewDirection (Real function_value, const VectorBase< Real > &gradient) |

| void | ComputeHifNeeded (const VectorBase< Real > &gradient) |

| void | StepSizeIteration (Real function_value, const VectorBase< Real > &gradient) |

| void | RecordStepLength (Real s) |

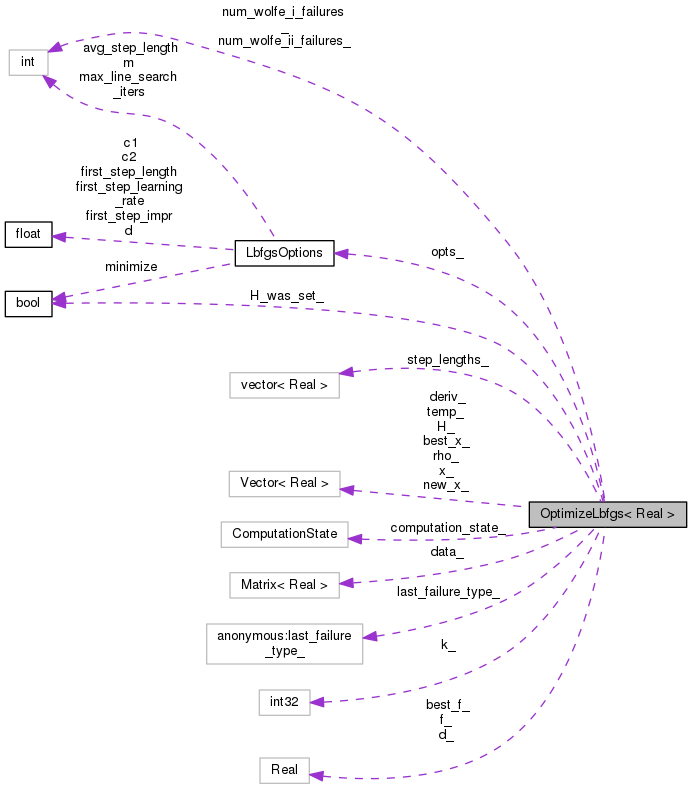

Private Attributes | |

| LbfgsOptions | opts_ |

| SignedMatrixIndexT | k_ |

| ComputationState | computation_state_ |

| bool | H_was_set_ |

| Vector< Real > | x_ |

| Vector< Real > | new_x_ |

| Vector< Real > | best_x_ |

| Vector< Real > | deriv_ |

| Vector< Real > | temp_ |

| Real | f_ |

| Real | best_f_ |

| Real | d_ |

| int | num_wolfe_i_failures_ |

| int | num_wolfe_ii_failures_ |

| enum kaldi::OptimizeLbfgs:: { ... } | last_failure_type_ |

| Vector< Real > | H_ |

| Matrix< Real > | data_ |

| Vector< Real > | rho_ |

| std::vector< Real > | step_lengths_ |

Definition at line 121 of file optimization.h.

|

private |

| Enumerator | |

|---|---|

| kWolfeI | |

| kWolfeII | |

| kNone | |

Definition at line 223 of file optimization.h.

|

private |

"compute p_k <-- - H_k \delta f_k" (i.e. Algorithm 7.4).

| Enumerator | |

|---|---|

| kBeforeStep | |

| kWithinStep | |

Definition at line 173 of file optimization.h.

| OptimizeLbfgs | ( | const VectorBase< Real > & | x, |

| const LbfgsOptions & | opts | ||

| ) |

Initializer takes the starting value of x.

Definition at line 35 of file optimization.cc.

References OptimizeLbfgs< Real >::best_f_, OptimizeLbfgs< Real >::best_x_, OptimizeLbfgs< Real >::data_, OptimizeLbfgs< Real >::deriv_, VectorBase< Real >::Dim(), OptimizeLbfgs< Real >::f_, KALDI_ASSERT, LbfgsOptions::m, LbfgsOptions::minimize, OptimizeLbfgs< Real >::new_x_, OptimizeLbfgs< Real >::rho_, OptimizeLbfgs< Real >::temp_, and OptimizeLbfgs< Real >::x_.

|

private |

Definition at line 173 of file optimization.cc.

References VectorBase< Real >::AddVec(), VectorBase< Real >::CopyFromVec(), OptimizeLbfgs< Real >::deriv_, OptimizeLbfgs< Real >::f_, OptimizeLbfgs< Real >::k_, KALDI_VLOG, LbfgsOptions::m, LbfgsOptions::minimize, OptimizeLbfgs< Real >::new_x_, VectorBase< Real >::Norm(), OptimizeLbfgs< Real >::opts_, OptimizeLbfgs< Real >::RecordStepLength(), OptimizeLbfgs< Real >::rho_, OptimizeLbfgs< Real >::S(), kaldi::VecVec(), OptimizeLbfgs< Real >::x_, and OptimizeLbfgs< Real >::Y().

Referenced by OptimizeLbfgs< Real >::StepSizeIteration().

|

private |

Definition at line 70 of file optimization.cc.

References LbfgsOptions::first_step_impr, LbfgsOptions::first_step_learning_rate, LbfgsOptions::first_step_length, OptimizeLbfgs< Real >::H_, OptimizeLbfgs< Real >::H_was_set_, OptimizeLbfgs< Real >::k_, KALDI_ASSERT, KALDI_ISINF, KALDI_ISNAN, KALDI_WARN, LbfgsOptions::minimize, VectorBase< Real >::Norm(), OptimizeLbfgs< Real >::opts_, OptimizeLbfgs< Real >::S(), kaldi::VecVec(), OptimizeLbfgs< Real >::x_, and OptimizeLbfgs< Real >::Y().

Referenced by OptimizeLbfgs< Real >::ComputeNewDirection().

|

private |

Definition at line 114 of file optimization.cc.

References VectorBase< Real >::AddVec(), VectorBase< Real >::AddVecVec(), OptimizeLbfgs< Real >::computation_state_, OptimizeLbfgs< Real >::ComputeHifNeeded(), LbfgsOptions::d, OptimizeLbfgs< Real >::d_, OptimizeLbfgs< Real >::deriv_, OptimizeLbfgs< Real >::f_, OptimizeLbfgs< Real >::H_, rnnlm::i, OptimizeLbfgs< Real >::k_, KALDI_ASSERT, KALDI_WARN, OptimizeLbfgs< Real >::kBeforeStep, OptimizeLbfgs< Real >::kNone, OptimizeLbfgs< Real >::kWithinStep, OptimizeLbfgs< Real >::last_failure_type_, OptimizeLbfgs< Real >::M(), LbfgsOptions::minimize, OptimizeLbfgs< Real >::new_x_, OptimizeLbfgs< Real >::num_wolfe_i_failures_, OptimizeLbfgs< Real >::num_wolfe_ii_failures_, OptimizeLbfgs< Real >::opts_, OptimizeLbfgs< Real >::rho_, OptimizeLbfgs< Real >::S(), VectorBase< Real >::SetZero(), kaldi::VecVec(), OptimizeLbfgs< Real >::x_, and OptimizeLbfgs< Real >::Y().

Referenced by OptimizeLbfgs< Real >::DoStep(), OptimizeLbfgs< Real >::Restart(), and OptimizeLbfgs< Real >::StepSizeIteration().

|

inlineprivate |

Definition at line 179 of file optimization.h.

| void DoStep | ( | Real | function_value, |

| const VectorBase< Real > & | gradient | ||

| ) |

The user calls this function to provide the class with the function and gradient info at the point GetProposedValue().

If this point is outside the constraints you can set function_value to {+infinity,-infinity} for {minimization,maximization} problems. In this case the gradient, and also the second derivative (if you call the second overloaded version of this function) will be ignored.

Definition at line 383 of file optimization.cc.

References OptimizeLbfgs< Real >::best_f_, OptimizeLbfgs< Real >::best_x_, OptimizeLbfgs< Real >::computation_state_, OptimizeLbfgs< Real >::ComputeNewDirection(), OptimizeLbfgs< Real >::kBeforeStep, LbfgsOptions::minimize, OptimizeLbfgs< Real >::new_x_, OptimizeLbfgs< Real >::opts_, and OptimizeLbfgs< Real >::StepSizeIteration().

Referenced by kaldi::nnet2::CombineNnets(), kaldi::nnet2::CombineNnetsA(), LogisticRegression::DoStep(), OptimizeLbfgs< Real >::DoStep(), FastNnetCombiner::FastNnetCombiner(), kaldi::nnet2::ShrinkNnet(), and kaldi::UnitTestLbfgs().

| void DoStep | ( | Real | function_value, |

| const VectorBase< Real > & | gradient, | ||

| const VectorBase< Real > & | diag_approx_2nd_deriv | ||

| ) |

The user can call this version of DoStep() if it is desired to set some kind of approximate Hessian on this iteration.

Note: it is a prerequisite that diag_approx_2nd_deriv must be strictly positive (minimizing), or negative (maximizing).

Definition at line 396 of file optimization.cc.

References OptimizeLbfgs< Real >::best_f_, OptimizeLbfgs< Real >::best_x_, OptimizeLbfgs< Real >::DoStep(), OptimizeLbfgs< Real >::H_, OptimizeLbfgs< Real >::H_was_set_, KALDI_ASSERT, VectorBase< Real >::Max(), VectorBase< Real >::Min(), LbfgsOptions::minimize, OptimizeLbfgs< Real >::new_x_, and OptimizeLbfgs< Real >::opts_.

|

inline |

This returns the value at which the function wants us to compute the objective function and gradient.

Definition at line 134 of file optimization.h.

References KALDI_DISALLOW_COPY_AND_ASSIGN.

Referenced by kaldi::nnet2::CombineNnets(), kaldi::nnet2::CombineNnetsA(), LogisticRegression::DoStep(), FastNnetCombiner::FastNnetCombiner(), kaldi::nnet2::ShrinkNnet(), and kaldi::UnitTestLbfgs().

| const VectorBase< Real > & GetValue | ( | Real * | objf_value = NULL | ) | const |

This returns the value of the variable x that has the best objective function so far, and the corresponding objective function value if requested.

This would typically be called only at the end.

Definition at line 416 of file optimization.cc.

References OptimizeLbfgs< Real >::best_f_, and OptimizeLbfgs< Real >::best_x_.

Referenced by kaldi::nnet2::CombineNnets(), kaldi::nnet2::CombineNnetsA(), FastNnetCombiner::FastNnetCombiner(), kaldi::nnet2::ShrinkNnet(), LogisticRegression::TrainParameters(), and kaldi::UnitTestLbfgs().

|

private |

|

inlineprivate |

Definition at line 180 of file optimization.h.

Referenced by OptimizeLbfgs< Real >::ComputeNewDirection(), and kaldi::LinearCgd().

| Real RecentStepLength | ( | ) | const |

Returns the average magnitude of the last n steps (but not more than the number we have stored).

Before we have taken any steps, returns +infinity. Note: if the most recent step length was 0, it returns 0, regardless of the other step lengths. This makes it suitable as a convergence test (else we'd generate NaN's).

Definition at line 55 of file optimization.cc.

References rnnlm::i, rnnlm::n, and OptimizeLbfgs< Real >::step_lengths_.

Referenced by kaldi::UnitTestLbfgs().

|

private |

Definition at line 207 of file optimization.cc.

References LbfgsOptions::avg_step_length, OptimizeLbfgs< Real >::opts_, and OptimizeLbfgs< Real >::step_lengths_.

Referenced by OptimizeLbfgs< Real >::AcceptStep(), and OptimizeLbfgs< Real >::Restart().

|

private |

Definition at line 215 of file optimization.cc.

References VectorBase< Real >::AddVec(), OptimizeLbfgs< Real >::computation_state_, OptimizeLbfgs< Real >::ComputeNewDirection(), VectorBase< Real >::CopyFromVec(), OptimizeLbfgs< Real >::f_, OptimizeLbfgs< Real >::k_, OptimizeLbfgs< Real >::kBeforeStep, OptimizeLbfgs< Real >::new_x_, VectorBase< Real >::Norm(), OptimizeLbfgs< Real >::RecordStepLength(), OptimizeLbfgs< Real >::temp_, and OptimizeLbfgs< Real >::x_.

Referenced by OptimizeLbfgs< Real >::StepSizeIteration().

|

inlineprivate |

Definition at line 184 of file optimization.h.

References data_.

Referenced by OptimizeLbfgs< Real >::AcceptStep(), OptimizeLbfgs< Real >::ComputeHifNeeded(), and OptimizeLbfgs< Real >::ComputeNewDirection().

|

private |

Definition at line 238 of file optimization.cc.

References OptimizeLbfgs< Real >::AcceptStep(), LbfgsOptions::c1, LbfgsOptions::c2, OptimizeLbfgs< Real >::computation_state_, OptimizeLbfgs< Real >::ComputeNewDirection(), OptimizeLbfgs< Real >::d_, OptimizeLbfgs< Real >::deriv_, OptimizeLbfgs< Real >::f_, OptimizeLbfgs< Real >::k_, KALDI_VLOG, OptimizeLbfgs< Real >::kBeforeStep, OptimizeLbfgs< Real >::kWolfeI, OptimizeLbfgs< Real >::kWolfeII, OptimizeLbfgs< Real >::last_failure_type_, LbfgsOptions::max_line_search_iters, LbfgsOptions::minimize, OptimizeLbfgs< Real >::new_x_, OptimizeLbfgs< Real >::num_wolfe_i_failures_, OptimizeLbfgs< Real >::num_wolfe_ii_failures_, OptimizeLbfgs< Real >::opts_, OptimizeLbfgs< Real >::Restart(), OptimizeLbfgs< Real >::temp_, kaldi::VecVec(), and OptimizeLbfgs< Real >::x_.

Referenced by OptimizeLbfgs< Real >::DoStep().

|

inlineprivate |

Definition at line 181 of file optimization.h.

References data_.

Referenced by OptimizeLbfgs< Real >::AcceptStep(), OptimizeLbfgs< Real >::ComputeHifNeeded(), and OptimizeLbfgs< Real >::ComputeNewDirection().

|

private |

Definition at line 217 of file optimization.h.

Referenced by OptimizeLbfgs< Real >::DoStep(), OptimizeLbfgs< Real >::GetValue(), and OptimizeLbfgs< Real >::OptimizeLbfgs().

|

private |

Definition at line 212 of file optimization.h.

Referenced by OptimizeLbfgs< Real >::DoStep(), OptimizeLbfgs< Real >::GetValue(), and OptimizeLbfgs< Real >::OptimizeLbfgs().

|

private |

Definition at line 205 of file optimization.h.

Referenced by OptimizeLbfgs< Real >::ComputeNewDirection(), OptimizeLbfgs< Real >::DoStep(), OptimizeLbfgs< Real >::Restart(), and OptimizeLbfgs< Real >::StepSizeIteration().

|

private |

Definition at line 218 of file optimization.h.

Referenced by OptimizeLbfgs< Real >::ComputeNewDirection(), and OptimizeLbfgs< Real >::StepSizeIteration().

|

private |

Definition at line 228 of file optimization.h.

Referenced by OptimizeLbfgs< Real >::OptimizeLbfgs().

|

private |

Definition at line 214 of file optimization.h.

Referenced by OptimizeLbfgs< Real >::AcceptStep(), OptimizeLbfgs< Real >::ComputeNewDirection(), OptimizeLbfgs< Real >::OptimizeLbfgs(), and OptimizeLbfgs< Real >::StepSizeIteration().

|

private |

Definition at line 216 of file optimization.h.

Referenced by OptimizeLbfgs< Real >::AcceptStep(), OptimizeLbfgs< Real >::ComputeNewDirection(), OptimizeLbfgs< Real >::OptimizeLbfgs(), OptimizeLbfgs< Real >::Restart(), and OptimizeLbfgs< Real >::StepSizeIteration().

|

private |

Definition at line 226 of file optimization.h.

Referenced by OptimizeLbfgs< Real >::ComputeHifNeeded(), OptimizeLbfgs< Real >::ComputeNewDirection(), and OptimizeLbfgs< Real >::DoStep().

|

private |

Definition at line 206 of file optimization.h.

Referenced by OptimizeLbfgs< Real >::ComputeHifNeeded(), and OptimizeLbfgs< Real >::DoStep().

|

private |

Definition at line 202 of file optimization.h.

Referenced by OptimizeLbfgs< Real >::AcceptStep(), OptimizeLbfgs< Real >::ComputeHifNeeded(), OptimizeLbfgs< Real >::ComputeNewDirection(), OptimizeLbfgs< Real >::Restart(), and OptimizeLbfgs< Real >::StepSizeIteration().

| enum { ... } last_failure_type_ |

|

private |

|

private |

Definition at line 221 of file optimization.h.

Referenced by OptimizeLbfgs< Real >::ComputeNewDirection(), and OptimizeLbfgs< Real >::StepSizeIteration().

|

private |

Definition at line 222 of file optimization.h.

Referenced by OptimizeLbfgs< Real >::ComputeNewDirection(), and OptimizeLbfgs< Real >::StepSizeIteration().

|

private |

Definition at line 201 of file optimization.h.

Referenced by OptimizeLbfgs< Real >::AcceptStep(), OptimizeLbfgs< Real >::ComputeHifNeeded(), OptimizeLbfgs< Real >::ComputeNewDirection(), OptimizeLbfgs< Real >::DoStep(), OptimizeLbfgs< Real >::RecordStepLength(), and OptimizeLbfgs< Real >::StepSizeIteration().

|

private |

Definition at line 230 of file optimization.h.

Referenced by OptimizeLbfgs< Real >::AcceptStep(), OptimizeLbfgs< Real >::ComputeNewDirection(), and OptimizeLbfgs< Real >::OptimizeLbfgs().

|

private |

Definition at line 232 of file optimization.h.

Referenced by OptimizeLbfgs< Real >::RecentStepLength(), and OptimizeLbfgs< Real >::RecordStepLength().

|

private |

Definition at line 215 of file optimization.h.

Referenced by OptimizeLbfgs< Real >::OptimizeLbfgs(), OptimizeLbfgs< Real >::Restart(), and OptimizeLbfgs< Real >::StepSizeIteration().

|

private |

Definition at line 210 of file optimization.h.

Referenced by OptimizeLbfgs< Real >::AcceptStep(), OptimizeLbfgs< Real >::ComputeHifNeeded(), OptimizeLbfgs< Real >::ComputeNewDirection(), OptimizeLbfgs< Real >::OptimizeLbfgs(), OptimizeLbfgs< Real >::Restart(), and OptimizeLbfgs< Real >::StepSizeIteration().