Public Member Functions | |

| FastNnetCombiner (const NnetCombineFastConfig &combine_config, const std::vector< NnetExample > &validation_set, const std::vector< Nnet > &nnets_in, Nnet *nnet_out) | |

Private Member Functions | |

| int32 | GetInitialModel (const std::vector< NnetExample > &validation_set, const std::vector< Nnet > &nnets) const |

| Returns an integer saying which model to use: either 0 ... More... | |

| void | GetInitialParams () |

| void | ComputePreconditioner () |

| double | ComputeObjfAndGradient (Vector< double > *gradient, double *regularizer_objf) |

| Computes objf at point "params_". More... | |

| void | ComputeCurrentNnet (Nnet *dest, bool debug=false) |

Static Private Member Functions | |

| static void | CombineNnets (const Vector< double > &scale_params, const std::vector< Nnet > &nnets, Nnet *dest) |

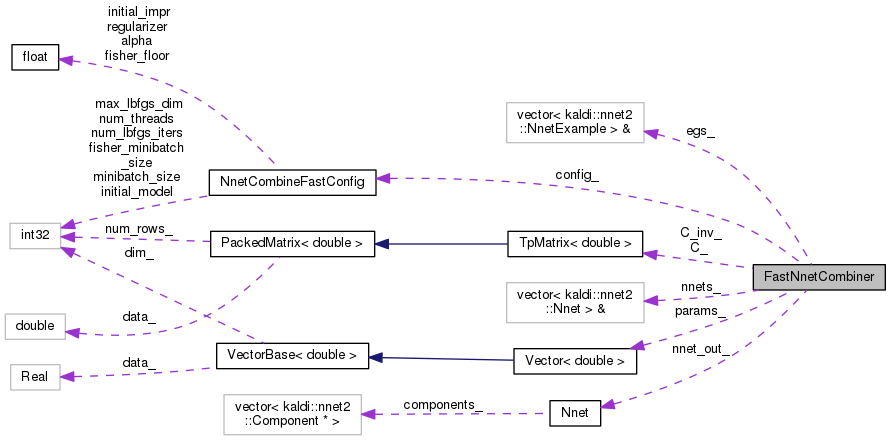

Private Attributes | |

| TpMatrix< double > | C_ |

| TpMatrix< double > | C_inv_ |

| Vector< double > | params_ |

| const NnetCombineFastConfig & | config_ |

| const std::vector< NnetExample > & | egs_ |

| const std::vector< Nnet > & | nnets_ |

| Nnet * | nnet_out_ |

Definition at line 102 of file combine-nnet-fast.cc.

|

inline |

Definition at line 104 of file combine-nnet-fast.cc.

References kaldi::nnet2::CombineNnets(), kaldi::nnet2::ComputeObjfAndGradient(), OptimizeLbfgs< Real >::DoStep(), LbfgsOptions::first_step_impr, kaldi::nnet2::GetInitialModel(), OptimizeLbfgs< Real >::GetProposedValue(), OptimizeLbfgs< Real >::GetValue(), rnnlm::i, KALDI_ASSERT, KALDI_LOG, LbfgsOptions::m, and LbfgsOptions::minimize.

|

staticprivate |

Definition at line 201 of file combine-nnet-fast.cc.

References Nnet::AddNnet(), KALDI_ASSERT, rnnlm::n, kaldi::nnet3::NumUpdatableComponents(), and Nnet::ScaleComponents().

Definition at line 356 of file combine-nnet-fast.cc.

References VectorBase< Real >::AddTpVec(), kaldi::nnet2::CombineNnets(), MatrixBase< Real >::CopyRowsFromVec(), KALDI_ASSERT, KALDI_LOG, kaldi::kTrans, FisherComputationClass::nnets_, and kaldi::nnet3::NumUpdatableComponents().

|

private |

Computes objf at point "params_".

Definition at line 299 of file combine-nnet-fast.cc.

References VectorBase< Real >::AddTpVec(), kaldi::nnet2::DoBackpropParallel(), UpdatableComponent::DotProduct(), FisherComputationClass::egs_, Nnet::GetComponent(), rnnlm::i, rnnlm::j, KALDI_ASSERT, KALDI_VLOG, kaldi::kNoTrans, rnnlm::n, FisherComputationClass::nnets_, Nnet::NumComponents(), and Nnet::SetZero().

|

private |

Definition at line 220 of file combine-nnet-fast.cc.

References FisherComputationClass::egs_, rnnlm::i, KALDI_ASSERT, kaldi::kTrans, FisherComputationClass::nnets_, PackedMatrix< Real >::NumRows(), SpMatrix< Real >::Resize(), PackedMatrix< Real >::Scale(), and SpMatrix< Real >::Trace().

|

private |

Returns an integer saying which model to use: either 0 ...

num-models - 1 for the best individual model, or (#models) for the average of all of them.

Definition at line 381 of file combine-nnet-fast.cc.

References kaldi::nnet2::CombineNnets(), kaldi::nnet2::ComputeNnetObjfParallel(), KALDI_ASSERT, KALDI_LOG, rnnlm::n, and VectorBase< Real >::Set().

|

private |

Definition at line 268 of file combine-nnet-fast.cc.

References FisherComputationClass::egs_, kaldi::nnet2::GetInitialModel(), KALDI_ASSERT, KALDI_LOG, FisherComputationClass::nnets_, and VectorBase< Real >::Set().

|

private |

Definition at line 186 of file combine-nnet-fast.cc.

|

private |

Definition at line 187 of file combine-nnet-fast.cc.

|

private |

Definition at line 193 of file combine-nnet-fast.cc.

|

private |

Definition at line 194 of file combine-nnet-fast.cc.

|

private |

Definition at line 196 of file combine-nnet-fast.cc.

|

private |

Definition at line 195 of file combine-nnet-fast.cc.

|

private |

Definition at line 188 of file combine-nnet-fast.cc.