Public Member Functions | |

| NnetComputer (const Nnet &nnet, const CuMatrixBase< BaseFloat > &input_feats, bool pad, Nnet *nnet_to_update=NULL) | |

| void | Propagate () |

| The forward-through-the-layers part of the computation. More... | |

| void | Backprop (CuMatrix< BaseFloat > *tmp_deriv) |

| BaseFloat | ComputeLastLayerDeriv (const Posterior &pdf_post, CuMatrix< BaseFloat > *deriv) const |

| Computes objf derivative at last layer, and returns objective function summed over labels and multiplied by utterance_weight. More... | |

| CuMatrixBase< BaseFloat > & | GetOutput () |

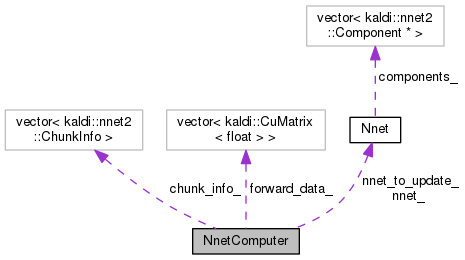

Private Attributes | |

| const Nnet & | nnet_ |

| std::vector< CuMatrix< BaseFloat > > | forward_data_ |

| Nnet * | nnet_to_update_ |

| std::vector< ChunkInfo > | chunk_info_ |

Definition at line 32 of file nnet-compute.cc.

| NnetComputer | ( | const Nnet & | nnet, |

| const CuMatrixBase< BaseFloat > & | input_feats, | ||

| bool | pad, | ||

| Nnet * | nnet_to_update = NULL |

||

| ) |

Definition at line 64 of file nnet-compute.cc.

References NnetComputer::chunk_info_, Nnet::ComputeChunkInfo(), NnetComputer::forward_data_, rnnlm::i, Nnet::InputDim(), KALDI_ERR, Nnet::LeftContext(), NnetComputer::nnet_, CuMatrixBase< Real >::NumCols(), Nnet::NumComponents(), CuMatrixBase< Real >::NumRows(), CuMatrixBase< Real >::Range(), CuMatrix< Real >::Resize(), Nnet::RightContext(), and CuMatrixBase< Real >::Row().

Definition at line 141 of file nnet-compute.cc.

References Component::Backprop(), NnetComputer::chunk_info_, NnetComputer::forward_data_, Nnet::GetComponent(), KALDI_ASSERT, NnetComputer::nnet_, NnetComputer::nnet_to_update_, and Nnet::NumComponents().

Referenced by kaldi::nnet2::NnetGradientComputation().

Computes objf derivative at last layer, and returns objective function summed over labels and multiplied by utterance_weight.

[Note: utterance_weight will normally be 1.0].

Definition at line 111 of file nnet-compute.cc.

References NnetComputer::forward_data_, rnnlm::i, rnnlm::j, KALDI_ASSERT, KALDI_VLOG, kaldi::Log(), NnetComputer::nnet_, CuMatrixBase< Real >::NumCols(), Nnet::NumComponents(), CuMatrixBase< Real >::NumRows(), and CuMatrix< Real >::Resize().

Referenced by kaldi::nnet2::NnetGradientComputation().

|

inline |

Definition at line 54 of file nnet-compute.cc.

References NnetComputer::forward_data_.

Referenced by kaldi::nnet2::NnetComputation(), and kaldi::nnet2::NnetComputationChunked().

| void Propagate | ( | ) |

The forward-through-the-layers part of the computation.

This is the forward part of the computation.

Definition at line 95 of file nnet-compute.cc.

References Component::BackpropNeedsInput(), Component::BackpropNeedsOutput(), NnetComputer::chunk_info_, NnetComputer::forward_data_, Nnet::GetComponent(), NnetComputer::nnet_, NnetComputer::nnet_to_update_, Nnet::NumComponents(), and Component::Propagate().

Referenced by kaldi::nnet2::NnetComputation(), kaldi::nnet2::NnetComputationChunked(), and kaldi::nnet2::NnetGradientComputation().

|

private |

Definition at line 61 of file nnet-compute.cc.

Referenced by NnetComputer::Backprop(), NnetComputer::NnetComputer(), and NnetComputer::Propagate().

Definition at line 58 of file nnet-compute.cc.

Referenced by NnetComputer::Backprop(), NnetComputer::ComputeLastLayerDeriv(), NnetComputer::GetOutput(), NnetComputer::NnetComputer(), and NnetComputer::Propagate().

|

private |

Definition at line 57 of file nnet-compute.cc.

Referenced by NnetComputer::Backprop(), NnetComputer::ComputeLastLayerDeriv(), NnetComputer::NnetComputer(), and NnetComputer::Propagate().

|

private |

Definition at line 59 of file nnet-compute.cc.

Referenced by NnetComputer::Backprop(), and NnetComputer::Propagate().