#include <nnet-component.h>

Public Member Functions | |

| SoftmaxComponent (int32 dim) | |

| SoftmaxComponent (const SoftmaxComponent &other) | |

| SoftmaxComponent () | |

| virtual std::string | Type () const |

| virtual bool | BackpropNeedsInput () const |

| virtual bool | BackpropNeedsOutput () const |

| virtual void | Propagate (const ChunkInfo &in_info, const ChunkInfo &out_info, const CuMatrixBase< BaseFloat > &in, CuMatrixBase< BaseFloat > *out) const |

| Perform forward pass propagation Input->Output. More... | |

| virtual void | Backprop (const ChunkInfo &in_info, const ChunkInfo &out_info, const CuMatrixBase< BaseFloat > &in_value, const CuMatrixBase< BaseFloat > &out_value, const CuMatrixBase< BaseFloat > &out_deriv, Component *to_update, CuMatrix< BaseFloat > *in_deriv) const |

| Perform backward pass propagation of the derivative, and also either update the model (if to_update == this) or update another model or compute the model derivative (otherwise). More... | |

| void | MixUp (int32 num_mixtures, BaseFloat power, BaseFloat min_count, BaseFloat perturb_stddev, AffineComponent *ac, SumGroupComponent *sc) |

| Allocate mixtures to states via a power rule, and add any new mixtures. More... | |

| virtual Component * | Copy () const |

| Copy component (deep copy). More... | |

Public Member Functions inherited from NonlinearComponent Public Member Functions inherited from NonlinearComponent | |

| void | Init (int32 dim) |

| NonlinearComponent (int32 dim) | |

| NonlinearComponent () | |

| NonlinearComponent (const NonlinearComponent &other) | |

| virtual int32 | InputDim () const |

| Get size of input vectors. More... | |

| virtual int32 | OutputDim () const |

| Get size of output vectors. More... | |

| virtual void | InitFromString (std::string args) |

| We implement InitFromString at this level. More... | |

| virtual void | Read (std::istream &is, bool binary) |

| We implement Read at this level as it just needs the Type(). More... | |

| virtual void | Write (std::ostream &os, bool binary) const |

| Write component to stream. More... | |

| void | Scale (BaseFloat scale) |

| void | Add (BaseFloat alpha, const NonlinearComponent &other) |

| const CuVector< double > & | ValueSum () const |

| const CuVector< double > & | DerivSum () const |

| double | Count () const |

| void | SetDim (int32 dim) |

Public Member Functions inherited from Component Public Member Functions inherited from Component | |

| Component () | |

| virtual int32 | Index () const |

| Returns the index in the sequence of layers in the neural net; intended only to be used in debugging information. More... | |

| virtual void | SetIndex (int32 index) |

| virtual std::vector< int32 > | Context () const |

| Return a vector describing the temporal context this component requires for each frame of output, as a sorted list. More... | |

| void | Propagate (const ChunkInfo &in_info, const ChunkInfo &out_info, const CuMatrixBase< BaseFloat > &in, CuMatrix< BaseFloat > *out) const |

| A non-virtual propagate function that first resizes output if necessary. More... | |

| virtual std::string | Info () const |

| virtual | ~Component () |

Private Member Functions | |

| SoftmaxComponent & | operator= (const SoftmaxComponent &other) |

Additional Inherited Members | |

Static Public Member Functions inherited from Component Static Public Member Functions inherited from Component | |

| static Component * | ReadNew (std::istream &is, bool binary) |

| Read component from stream. More... | |

| static Component * | NewFromString (const std::string &initializer_line) |

| Initialize the Component from one line that will contain first the type, e.g. More... | |

| static Component * | NewComponentOfType (const std::string &type) |

| Return a new Component of the given type e.g. More... | |

Protected Member Functions inherited from NonlinearComponent Protected Member Functions inherited from NonlinearComponent | |

| void | UpdateStats (const CuMatrixBase< BaseFloat > &out_value, const CuMatrixBase< BaseFloat > *deriv=NULL) |

| const NonlinearComponent & | operator= (const NonlinearComponent &other) |

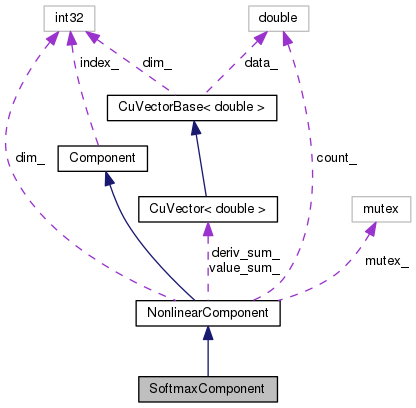

Protected Attributes inherited from NonlinearComponent Protected Attributes inherited from NonlinearComponent | |

| int32 | dim_ |

| CuVector< double > | value_sum_ |

| CuVector< double > | deriv_sum_ |

| double | count_ |

| std::mutex | mutex_ |

Definition at line 777 of file nnet-component.h.

|

inlineexplicit |

Definition at line 779 of file nnet-component.h.

|

inlineexplicit |

Definition at line 780 of file nnet-component.h.

|

inline |

Definition at line 781 of file nnet-component.h.

|

virtual |

Perform backward pass propagation of the derivative, and also either update the model (if to_update == this) or update another model or compute the model derivative (otherwise).

Note: in_value and out_value are the values of the input and output of the component, and these may be dummy variables if respectively BackpropNeedsInput() or BackpropNeedsOutput() return false for that component (not all components need these).

num_chunks lets us treat the input matrix as contiguous-in-time chunks of equal size; it only matters if splicing is involved.

Implements Component.

Definition at line 919 of file nnet-component.cc.

References CuMatrixBase< Real >::DiffSoftmaxPerRow(), CuMatrixBase< Real >::NumCols(), CuMatrixBase< Real >::NumRows(), CuMatrix< Real >::Resize(), and NonlinearComponent::UpdateStats().

|

inlinevirtual |

Reimplemented from Component.

Definition at line 783 of file nnet-component.h.

|

inlinevirtual |

Reimplemented from Component.

Definition at line 784 of file nnet-component.h.

References Component::Propagate().

|

inlinevirtual |

| void MixUp | ( | int32 | num_mixtures, |

| BaseFloat | power, | ||

| BaseFloat | min_count, | ||

| BaseFloat | perturb_stddev, | ||

| AffineComponent * | ac, | ||

| SumGroupComponent * | sc | ||

| ) |

Allocate mixtures to states via a power rule, and add any new mixtures.

Total the count out of

all the output dims of the softmax layer that correspond to this mixture. We'll use this total to allocate new quasi-Gaussians.

Definition at line 107 of file mixup-nnet.cc.

References VectorBase< Real >::AddVec(), AffineComponent::bias_params_, CuVectorBase< Real >::CopyFromVec(), VectorBase< Real >::CopyFromVec(), NonlinearComponent::count_, rnnlm::d, VectorBase< Real >::Data(), VectorBase< Real >::Dim(), CuVectorBase< Real >::Dim(), NonlinearComponent::dim_, SumGroupComponent::GetSizes(), kaldi::GetSplitTargets(), rnnlm::i, SumGroupComponent::Init(), AffineComponent::InputDim(), KALDI_ASSERT, KALDI_LOG, AffineComponent::linear_params_, kaldi::Log(), VectorBase< Real >::Range(), MatrixBase< Real >::Range(), CuVector< Real >::Resize(), AffineComponent::SetParams(), VectorBase< Real >::SetRandn(), CuVectorBase< Real >::Sum(), and NonlinearComponent::value_sum_.

Referenced by kaldi::nnet2::MixupNnet().

|

private |

|

virtual |

Perform forward pass propagation Input->Output.

Each row is one frame or training example. Interpreted as "num_chunks" equally sized chunks of frames; this only matters for layers that do things like context splicing. Typically this variable will either be 1 (when we're processing a single contiguous chunk of data) or will be the same as in.NumFrames(), but other values are possible if some layers do splicing.

Implements Component.

Definition at line 900 of file nnet-component.cc.

References CuMatrixBase< Real >::ApplyFloor(), ChunkInfo::CheckSize(), KALDI_ASSERT, ChunkInfo::NumChunks(), and CuMatrixBase< Real >::SoftMaxPerRow().

|

inlinevirtual |

Implements Component.

Definition at line 782 of file nnet-component.h.