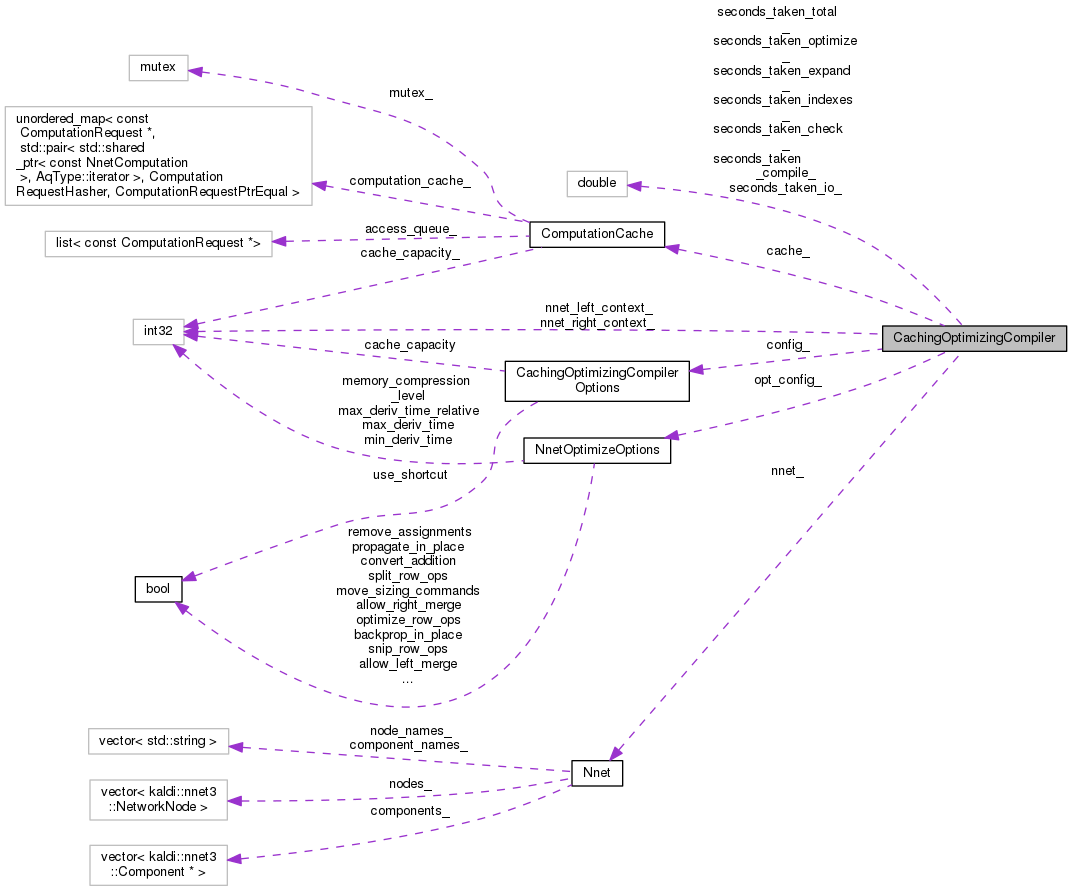

This class enables you to do the compilation and optimization in one call, and also ensures that if the ComputationRequest is identical to the previous one, the compilation process is not repeated. More...

#include <nnet-optimize.h>

Public Member Functions | |

| CachingOptimizingCompiler (const Nnet &nnet, const CachingOptimizingCompilerOptions config=CachingOptimizingCompilerOptions()) | |

| CachingOptimizingCompiler (const Nnet &nnet, const NnetOptimizeOptions &opt_config, const CachingOptimizingCompilerOptions config=CachingOptimizingCompilerOptions()) | |

| Note: nnet is retained as a const reference but opt_config is copied. More... | |

| ~CachingOptimizingCompiler () | |

| std::shared_ptr< const NnetComputation > | Compile (const ComputationRequest &request) |

| Does the compilation and returns a const pointer to the result, which is owned by this class, not the caller. More... | |

| void | ReadCache (std::istream &is, bool binary) |

| void | WriteCache (std::ostream &os, bool binary) |

| void | GetSimpleNnetContext (int32 *nnet_left_context, int32 *nnet_right_context) |

Private Member Functions | |

| std::shared_ptr< const NnetComputation > | CompileInternal (const ComputationRequest &request) |

| std::shared_ptr< const NnetComputation > | CompileAndCache (const ComputationRequest &request) |

| const NnetComputation * | CompileViaShortcut (const ComputationRequest &request) |

| const NnetComputation * | CompileNoShortcut (const ComputationRequest &request) |

Private Attributes | |

| const Nnet & | nnet_ |

| CachingOptimizingCompilerOptions | config_ |

| NnetOptimizeOptions | opt_config_ |

| double | seconds_taken_total_ |

| double | seconds_taken_compile_ |

| double | seconds_taken_optimize_ |

| double | seconds_taken_expand_ |

| double | seconds_taken_check_ |

| double | seconds_taken_indexes_ |

| double | seconds_taken_io_ |

| ComputationCache | cache_ |

| int32 | nnet_left_context_ |

| int32 | nnet_right_context_ |

This class enables you to do the compilation and optimization in one call, and also ensures that if the ComputationRequest is identical to the previous one, the compilation process is not repeated.

It is safe to call Compile() from multiple parallel threads without additional synchronization; synchronization is managed internally by class ComputationCache.

Definition at line 219 of file nnet-optimize.h.

| CachingOptimizingCompiler | ( | const Nnet & | nnet, |

| const CachingOptimizingCompilerOptions | config = CachingOptimizingCompilerOptions() |

||

| ) |

Definition at line 635 of file nnet-optimize.cc.

| CachingOptimizingCompiler | ( | const Nnet & | nnet, |

| const NnetOptimizeOptions & | opt_config, | ||

| const CachingOptimizingCompilerOptions | config = CachingOptimizingCompilerOptions() |

||

| ) |

Note: nnet is retained as a const reference but opt_config is copied.

Definition at line 645 of file nnet-optimize.cc.

Definition at line 695 of file nnet-optimize.cc.

References KALDI_LOG, CachingOptimizingCompiler::seconds_taken_check_, CachingOptimizingCompiler::seconds_taken_compile_, CachingOptimizingCompiler::seconds_taken_expand_, CachingOptimizingCompiler::seconds_taken_indexes_, CachingOptimizingCompiler::seconds_taken_io_, CachingOptimizingCompiler::seconds_taken_optimize_, and CachingOptimizingCompiler::seconds_taken_total_.

| std::shared_ptr< const NnetComputation > Compile | ( | const ComputationRequest & | request | ) |

Does the compilation and returns a const pointer to the result, which is owned by this class, not the caller.

It calls ComputeCudaIndexes() for you, because you wouldn't be able to do this on a const object.

Note: this used to return 'const NnetComputation*'. If you get a compilation failure, just replace 'const NnetComputation*' with 'std::shared_ptr<const NnetComputation>' in the calling code.

Definition at line 716 of file nnet-optimize.cc.

References CachingOptimizingCompiler::CompileInternal(), Timer::Elapsed(), and CachingOptimizingCompiler::seconds_taken_total_.

Referenced by NnetLdaStatsAccumulator::AccStats(), BatchedXvectorComputer::BatchedXvectorComputer(), NnetComputerFromEg::Compute(), NnetDiscriminativeComputeObjf::Compute(), NnetChainComputeProb::Compute(), NnetComputeProb::Compute(), DecodableNnetSimple::DoNnetComputation(), NnetBatchComputer::GetComputation(), kaldi::nnet3::RunNnetComputation(), NnetChainTrainer::Train(), NnetDiscriminativeTrainer::Train(), NnetTrainer::Train(), kaldi::nnet3::UnitTestNnetModelDerivatives(), and kaldi::nnet3::UnitTestNnetOptimizeWithOptions().

|

private |

|

private |

Definition at line 724 of file nnet-optimize.cc.

References CachingOptimizingCompiler::cache_, CachingOptimizingCompiler::CompileNoShortcut(), CachingOptimizingCompiler::CompileViaShortcut(), CachingOptimizingCompiler::config_, ComputationCache::Find(), ComputationCache::Insert(), KALDI_ASSERT, and CachingOptimizingCompilerOptions::use_shortcut.

Referenced by CachingOptimizingCompiler::Compile(), and CachingOptimizingCompiler::CompileViaShortcut().

|

private |

Definition at line 741 of file nnet-optimize.cc.

References ComputationChecker::Check(), CheckComputationOptions::check_rewrite, NnetComputation::ComputeCudaIndexes(), Compiler::CreateComputation(), Timer::Elapsed(), kaldi::GetVerboseLevel(), KALDI_LOG, kaldi::nnet3::MaxOutputTimeInRequest(), CachingOptimizingCompiler::nnet_, kaldi::nnet2::NnetComputation(), CachingOptimizingCompiler::opt_config_, kaldi::nnet3::Optimize(), ComputationRequest::Print(), NnetComputation::Print(), CachingOptimizingCompiler::seconds_taken_check_, CachingOptimizingCompiler::seconds_taken_compile_, CachingOptimizingCompiler::seconds_taken_indexes_, and CachingOptimizingCompiler::seconds_taken_optimize_.

Referenced by CachingOptimizingCompiler::CompileInternal().

|

private |

Definition at line 808 of file nnet-optimize.cc.

References kaldi::nnet3::CheckComputation(), CachingOptimizingCompiler::CompileInternal(), NnetComputation::ComputeCudaIndexes(), Timer::Elapsed(), kaldi::nnet3::ExpandComputation(), kaldi::GetVerboseLevel(), ComputationRequest::misc_info, CachingOptimizingCompiler::nnet_, kaldi::nnet2::NnetComputation(), kaldi::nnet3::RequestIsDecomposable(), CachingOptimizingCompiler::seconds_taken_expand_, and CachingOptimizingCompiler::seconds_taken_indexes_.

Referenced by CachingOptimizingCompiler::CompileInternal().

Definition at line 656 of file nnet-optimize.cc.

References kaldi::nnet3::ComputeSimpleNnetContext(), CachingOptimizingCompiler::nnet_, CachingOptimizingCompiler::nnet_left_context_, and CachingOptimizingCompiler::nnet_right_context_.

Referenced by DecodableNnetSimple::DecodableNnetSimple().

| void ReadCache | ( | std::istream & | is, |

| bool | binary | ||

| ) |

Definition at line 666 of file nnet-optimize.cc.

References CachingOptimizingCompiler::cache_, ComputationCache::Check(), Timer::Elapsed(), kaldi::GetVerboseLevel(), CachingOptimizingCompiler::nnet_, CachingOptimizingCompiler::opt_config_, NnetOptimizeOptions::Read(), ComputationCache::Read(), CachingOptimizingCompiler::seconds_taken_check_, CachingOptimizingCompiler::seconds_taken_io_, and CachingOptimizingCompiler::seconds_taken_total_.

Referenced by main(), NnetChainTrainer::NnetChainTrainer(), NnetDiscriminativeTrainer::NnetDiscriminativeTrainer(), and NnetTrainer::NnetTrainer().

| void WriteCache | ( | std::ostream & | os, |

| bool | binary | ||

| ) |

Definition at line 688 of file nnet-optimize.cc.

References CachingOptimizingCompiler::cache_, Timer::Elapsed(), CachingOptimizingCompiler::opt_config_, CachingOptimizingCompiler::seconds_taken_io_, NnetOptimizeOptions::Write(), and ComputationCache::Write().

Referenced by main(), NnetChainTrainer::~NnetChainTrainer(), NnetDiscriminativeTrainer::~NnetDiscriminativeTrainer(), and NnetTrainer::~NnetTrainer().

|

private |

Definition at line 302 of file nnet-optimize.h.

Referenced by CachingOptimizingCompiler::CompileInternal(), CachingOptimizingCompiler::ReadCache(), and CachingOptimizingCompiler::WriteCache().

|

private |

Definition at line 289 of file nnet-optimize.h.

Referenced by CachingOptimizingCompiler::CompileInternal().

|

private |

Definition at line 288 of file nnet-optimize.h.

Referenced by CachingOptimizingCompiler::CompileNoShortcut(), CachingOptimizingCompiler::CompileViaShortcut(), CachingOptimizingCompiler::GetSimpleNnetContext(), and CachingOptimizingCompiler::ReadCache().

|

private |

Definition at line 305 of file nnet-optimize.h.

Referenced by CachingOptimizingCompiler::GetSimpleNnetContext().

|

private |

Definition at line 306 of file nnet-optimize.h.

Referenced by CachingOptimizingCompiler::GetSimpleNnetContext().

|

private |

Definition at line 290 of file nnet-optimize.h.

Referenced by CachingOptimizingCompiler::CompileNoShortcut(), CachingOptimizingCompiler::ReadCache(), and CachingOptimizingCompiler::WriteCache().

|

private |

Definition at line 298 of file nnet-optimize.h.

Referenced by CachingOptimizingCompiler::CompileNoShortcut(), CachingOptimizingCompiler::ReadCache(), and CachingOptimizingCompiler::~CachingOptimizingCompiler().

|

private |

Definition at line 295 of file nnet-optimize.h.

Referenced by CachingOptimizingCompiler::CompileNoShortcut(), and CachingOptimizingCompiler::~CachingOptimizingCompiler().

|

private |

Definition at line 297 of file nnet-optimize.h.

Referenced by CachingOptimizingCompiler::CompileViaShortcut(), and CachingOptimizingCompiler::~CachingOptimizingCompiler().

|

private |

Definition at line 299 of file nnet-optimize.h.

Referenced by CachingOptimizingCompiler::CompileNoShortcut(), CachingOptimizingCompiler::CompileViaShortcut(), and CachingOptimizingCompiler::~CachingOptimizingCompiler().

|

private |

Definition at line 300 of file nnet-optimize.h.

Referenced by CachingOptimizingCompiler::ReadCache(), CachingOptimizingCompiler::WriteCache(), and CachingOptimizingCompiler::~CachingOptimizingCompiler().

|

private |

Definition at line 296 of file nnet-optimize.h.

Referenced by CachingOptimizingCompiler::CompileNoShortcut(), and CachingOptimizingCompiler::~CachingOptimizingCompiler().

|

private |

Definition at line 294 of file nnet-optimize.h.

Referenced by CachingOptimizingCompiler::Compile(), CachingOptimizingCompiler::ReadCache(), and CachingOptimizingCompiler::~CachingOptimizingCompiler().