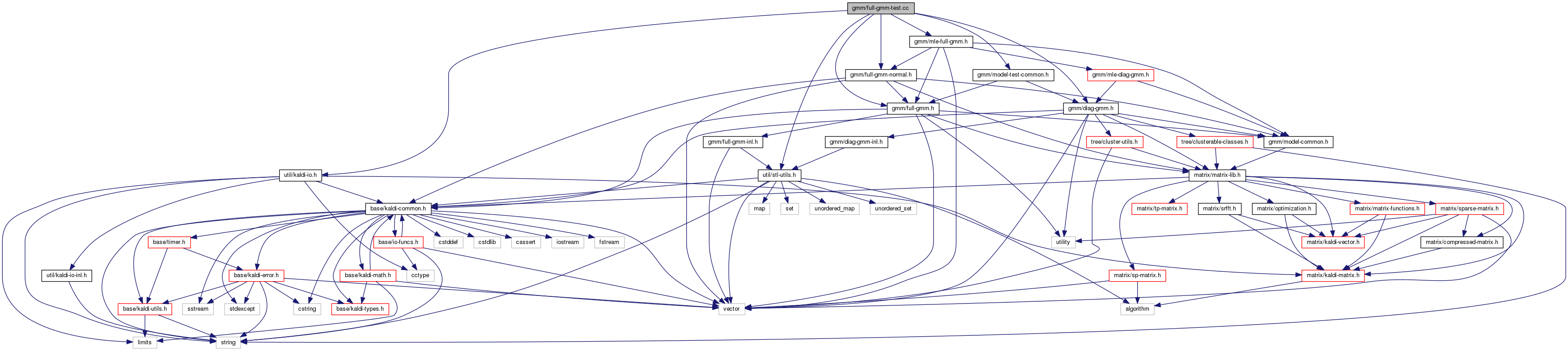

#include "gmm/full-gmm.h"#include "gmm/diag-gmm.h"#include "gmm/model-test-common.h"#include "util/stl-utils.h"#include "util/kaldi-io.h"#include "gmm/full-gmm-normal.h"#include "gmm/mle-full-gmm.h"

Go to the source code of this file.

Functions | |

| void | RandPosdefSpMatrix (size_t dim, SpMatrix< BaseFloat > *matrix, TpMatrix< BaseFloat > *matrix_sqrt=NULL, BaseFloat *logdet=NULL) |

| void | init_rand_diag_gmm (DiagGmm *gmm) |

| void | UnitTestFullGmmEst () |

| void | UnitTestFullGmm () |

| int | main () |

| void init_rand_diag_gmm | ( | DiagGmm * | gmm | ) |

Definition at line 54 of file full-gmm-test.cc.

References DiagGmm::ComputeGconsts(), rnnlm::d, DiagGmm::Dim(), kaldi::Exp(), MatrixBase< Real >::InvertElements(), DiagGmm::NumGauss(), DiagGmm::Perturb(), kaldi::RandGauss(), kaldi::RandUniform(), DiagGmm::SetInvVarsAndMeans(), and DiagGmm::SetWeights().

Referenced by UnitTestFullGmm().

| int main | ( | ) |

Definition at line 333 of file full-gmm-test.cc.

References rnnlm::i, UnitTestFullGmm(), and UnitTestFullGmmEst().

| void RandPosdefSpMatrix | ( | size_t | dim, |

| SpMatrix< BaseFloat > * | matrix, | ||

| TpMatrix< BaseFloat > * | matrix_sqrt = NULL, |

||

| BaseFloat * | logdet = NULL |

||

| ) |

Definition at line 31 of file full-gmm-test.cc.

References SpMatrix< Real >::AddMat2(), TpMatrix< Real >::Cholesky(), MatrixBase< Real >::Cond(), kaldi::kNoTrans, SpMatrix< Real >::LogPosDefDet(), and MatrixBase< Real >::SetRandn().

| void UnitTestFullGmm | ( | ) |

Definition at line 108 of file full-gmm-test.cc.

References kaldi::AssertEqual(), FullGmm::ComponentPosteriors(), FullGmm::ComputeGconsts(), FullGmm::CopyFromDiagGmm(), FullGmm::CopyFromFullGmm(), DiagGmm::CopyFromFullGmm(), MatrixBase< Real >::CopyFromMat(), VectorBase< Real >::CopyFromVec(), rnnlm::d, FullGmm::Dim(), FullGmm::GetComponentMean(), FullGmm::GetCovars(), FullGmm::GetCovarsAndMeans(), FullGmm::GetMeans(), rnnlm::i, init_rand_diag_gmm(), KALDI_ASSERT, kaldi::Log(), FullGmm::LogLikelihood(), DiagGmm::LogLikelihood(), FullGmm::LogLikelihoods(), FullGmm::LogLikelihoodsPreselect(), VectorBase< Real >::LogSumExp(), M_LOG_2PI, FullGmm::Merge(), FullGmm::NumGauss(), kaldi::RandGauss(), kaldi::RandInt(), kaldi::RandPosdefSpMatrix(), kaldi::RandUniform(), MatrixBase< Real >::Range(), FullGmm::Read(), FullGmm::Resize(), DiagGmm::Resize(), MatrixBase< Real >::Row(), FullGmm::SetInvCovars(), FullGmm::SetInvCovarsAndMeans(), FullGmm::SetMeans(), FullGmm::SetWeights(), MatrixBase< Real >::SetZero(), FullGmm::Split(), Input::Stream(), VectorBase< Real >::Sum(), kaldi::VecSpVec(), FullGmm::weights(), and FullGmm::Write().

Referenced by main().

| void UnitTestFullGmmEst | ( | ) |

Definition at line 81 of file full-gmm-test.cc.

References AccumFullGmm::AccumulateFromFull(), count, kaldi::unittest::InitRandFullGmm(), KALDI_ASSERT, KALDI_LOG, kaldi::kGmmAll, kaldi::MleFullGmmUpdate(), kaldi::Rand(), FullGmmNormal::Rand(), and MatrixBase< Real >::Row().

Referenced by main().