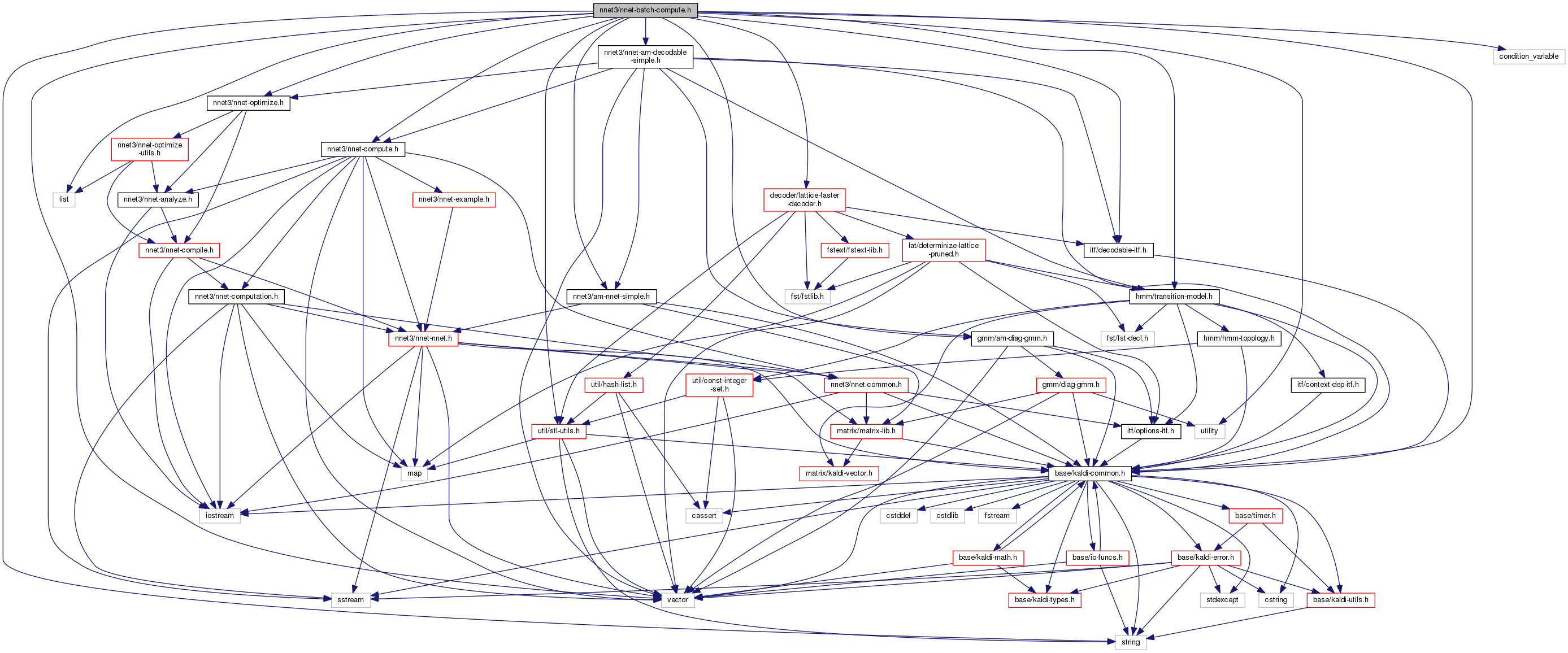

#include <vector>#include <string>#include <list>#include <utility>#include <condition_variable>#include "base/kaldi-common.h"#include "gmm/am-diag-gmm.h"#include "hmm/transition-model.h"#include "itf/decodable-itf.h"#include "nnet3/nnet-optimize.h"#include "nnet3/nnet-compute.h"#include "nnet3/am-nnet-simple.h"#include "nnet3/nnet-am-decodable-simple.h"#include "decoder/lattice-faster-decoder.h"#include "util/stl-utils.h"

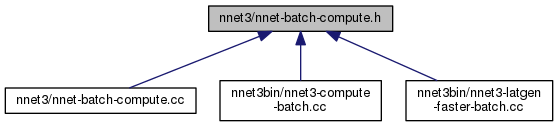

Go to the source code of this file.

Classes | |

| struct | NnetInferenceTask |

| class NnetInferenceTask represents a chunk of an utterance that is requested to be computed. More... | |

| struct | NnetBatchComputerOptions |

| class | NnetBatchComputer |

| This class does neural net inference in a way that is optimized for GPU use: it combines chunks of multiple utterances into minibatches for more efficient computation. More... | |

| struct | NnetBatchComputer::MinibatchSizeInfo |

| struct | NnetBatchComputer::ComputationGroupInfo |

| struct | NnetBatchComputer::ComputationGroupKey |

| struct | NnetBatchComputer::ComputationGroupKeyHasher |

| class | NnetBatchInference |

| This class implements a simplified interface to class NnetBatchComputer, which is suitable for programs like 'nnet3-compute' where you want to support fast GPU-based inference on a sequence of utterances, and get them back from the object in the same order. More... | |

| struct | NnetBatchInference::UtteranceInfo |

| class | NnetBatchDecoder |

| Decoder object that uses multiple CPU threads for the graph search, plus a GPU for the neural net inference (that's done by a separate NnetBatchComputer object). More... | |

| struct | NnetBatchDecoder::UtteranceInput |

| struct | NnetBatchDecoder::UtteranceOutput |

Namespaces | |

| kaldi | |

| This code computes Goodness of Pronunciation (GOP) and extracts phone-level pronunciation feature for mispronunciations detection tasks, the reference: | |

| kaldi::nnet3 | |

Functions | |

| void | MergeTaskOutput (const std::vector< NnetInferenceTask > &tasks, Matrix< BaseFloat > *output) |

| Merges together the 'output_cpu' (if the 'output_to_cpu' members are true) or the 'output' members of 'tasks' into a single CPU matrix 'output'. More... | |

| void | MergeTaskOutput (const std::vector< NnetInferenceTask > &tasks, CuMatrix< BaseFloat > *output) |