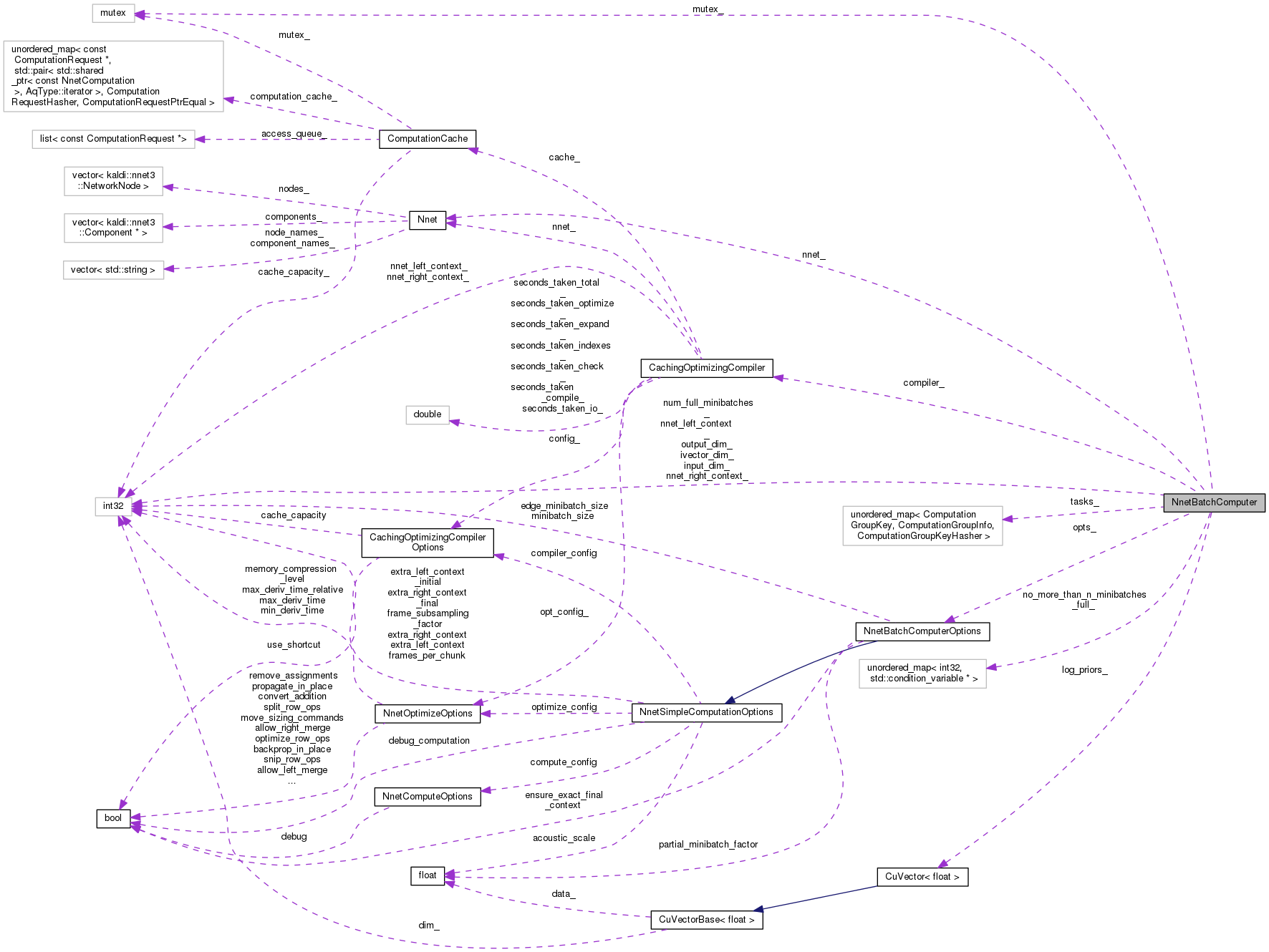

This class does neural net inference in a way that is optimized for GPU use: it combines chunks of multiple utterances into minibatches for more efficient computation. More...

#include <nnet-batch-compute.h>

Classes | |

| struct | ComputationGroupInfo |

| struct | ComputationGroupKey |

| struct | ComputationGroupKeyHasher |

| struct | MinibatchSizeInfo |

Public Member Functions | |

| NnetBatchComputer (const NnetBatchComputerOptions &opts, const Nnet &nnet, const VectorBase< BaseFloat > &priors) | |

| Constructor. More... | |

| void | AcceptTask (NnetInferenceTask *task, int32 max_minibatches_full=-1) |

| Accepts a task, meaning the task will be queued. More... | |

| int32 | NumFullPendingMinibatches () const |

| Returns the number of full minibatches waiting to be computed. More... | |

| bool | Compute (bool allow_partial_minibatch) |

| Does some kind of computation, choosing the highest-priority thing to compute. More... | |

| void | SplitUtteranceIntoTasks (bool output_to_cpu, const Matrix< BaseFloat > &input, const Vector< BaseFloat > *ivector, const Matrix< BaseFloat > *online_ivectors, int32 online_ivector_period, std::vector< NnetInferenceTask > *tasks) |

| Split a single utterance into a list of separate tasks which can then be given to this class by AcceptTask(). More... | |

| void | SplitUtteranceIntoTasks (bool output_to_cpu, const CuMatrix< BaseFloat > &input, const CuVector< BaseFloat > *ivector, const CuMatrix< BaseFloat > *online_ivectors, int32 online_ivector_period, std::vector< NnetInferenceTask > *tasks) |

| const NnetBatchComputerOptions & | GetOptions () |

| ~NnetBatchComputer () | |

Private Types | |

| typedef unordered_map< ComputationGroupKey, ComputationGroupInfo, ComputationGroupKeyHasher > | MapType |

Private Member Functions | |

| KALDI_DISALLOW_COPY_AND_ASSIGN (NnetBatchComputer) | |

| double | GetPriority (bool allow_partial_minibatch, const ComputationGroupInfo &info) const |

| int32 | GetMinibatchSize (const ComputationGroupInfo &info) const |

| std::shared_ptr< const NnetComputation > | GetComputation (const ComputationGroupInfo &info, int32 minibatch_size) |

| int32 | GetActualMinibatchSize (const ComputationGroupInfo &info) const |

| void | GetHighestPriorityTasks (int32 num_tasks, ComputationGroupInfo *info, std::vector< NnetInferenceTask *> *tasks) |

| MinibatchSizeInfo * | GetHighestPriorityComputation (bool allow_partial_minibatch, int32 *minibatch_size, std::vector< NnetInferenceTask *> *tasks) |

| This function finds and returns the computation corresponding to the highest-priority group of tasks. More... | |

| void | FormatInputs (int32 minibatch_size, const std::vector< NnetInferenceTask *> &tasks, CuMatrix< BaseFloat > *input, CuMatrix< BaseFloat > *ivector) |

| formats the inputs to the computation and transfers them to GPU. More... | |

| void | FormatOutputs (const CuMatrix< BaseFloat > &output, const std::vector< NnetInferenceTask *> &tasks) |

| void | CheckAndFixConfigs () |

| void | PrintMinibatchStats () |

Static Private Member Functions | |

| static void | GetComputationRequest (const NnetInferenceTask &task, int32 minibatch_size, ComputationRequest *request) |

Private Attributes | |

| NnetBatchComputerOptions | opts_ |

| const Nnet & | nnet_ |

| CachingOptimizingCompiler | compiler_ |

| CuVector< BaseFloat > | log_priors_ |

| std::mutex | mutex_ |

| MapType | tasks_ |

| int32 | num_full_minibatches_ |

| std::unordered_map< int32, std::condition_variable * > | no_more_than_n_minibatches_full_ |

| int32 | nnet_left_context_ |

| int32 | nnet_right_context_ |

| int32 | input_dim_ |

| int32 | ivector_dim_ |

| int32 | output_dim_ |

This class does neural net inference in a way that is optimized for GPU use: it combines chunks of multiple utterances into minibatches for more efficient computation.

It does the computation in one background thread that accesses the GPU. It is thread safe, i.e. you can call it from multiple threads without having to worry about data races and the like.

Definition at line 207 of file nnet-batch-compute.h.

|

private |

Definition at line 344 of file nnet-batch-compute.h.

| NnetBatchComputer | ( | const NnetBatchComputerOptions & | opts, |

| const Nnet & | nnet, | ||

| const VectorBase< BaseFloat > & | priors | ||

| ) |

Constructor.

It stores references to all the arguments, so don't delete them till this object goes out of scop.

| [in] | opts | Options struct |

| [in] | nnet | The neural net which we'll be doing the computation with |

| [in] | priors | Either the empty vector, or a vector of prior probabilities which we'll take the log of and subtract from the neural net outputs (e.g. used in non-chain systems). |

Definition at line 31 of file nnet-batch-compute.cc.

References NnetSimpleComputationOptions::CheckAndFixConfigs(), kaldi::nnet3::ComputeSimpleNnetContext(), NnetBatchComputerOptions::edge_minibatch_size, NnetBatchComputer::input_dim_, Nnet::InputDim(), NnetBatchComputer::ivector_dim_, KALDI_ASSERT, NnetBatchComputer::log_priors_, NnetBatchComputerOptions::minibatch_size, Nnet::Modulus(), NnetBatchComputer::nnet_, NnetBatchComputer::nnet_left_context_, NnetBatchComputer::nnet_right_context_, NnetBatchComputer::opts_, NnetBatchComputer::output_dim_, Nnet::OutputDim(), and NnetBatchComputerOptions::partial_minibatch_factor.

| ~NnetBatchComputer | ( | ) |

Definition at line 112 of file nnet-batch-compute.cc.

References KALDI_ASSERT, KALDI_ERR, NnetBatchComputer::mutex_, NnetBatchComputer::no_more_than_n_minibatches_full_, NnetBatchComputer::num_full_minibatches_, NnetBatchComputer::PrintMinibatchStats(), and NnetBatchComputer::tasks_.

| void AcceptTask | ( | NnetInferenceTask * | task, |

| int32 | max_minibatches_full = -1 |

||

| ) |

Accepts a task, meaning the task will be queued.

(Note: the pointer is still owned by the caller. If the max_minibatches_full >= 0, then the calling thread will block until no more than that many full minibatches are waiting to be computed. This is a mechanism to prevent too many requests from piling up in memory.

Definition at line 568 of file nnet-batch-compute.cc.

References NnetBatchComputer::GetMinibatchSize(), NnetBatchComputer::mutex_, NnetBatchComputer::no_more_than_n_minibatches_full_, NnetBatchComputer::num_full_minibatches_, NnetBatchComputer::ComputationGroupInfo::tasks, and NnetBatchComputer::tasks_.

Referenced by NnetBatchInference::AcceptInput(), and NnetBatchDecoder::Decode().

|

private |

Does some kind of computation, choosing the highest-priority thing to compute.

It returns true if it did some kind of computation, and false otherwise. This function locks the class, but not for the entire time it's being called: only at the beginning and at the end.

| [in] | allow_partial_minibatch | If false, then this will only do the computation if a full minibatch is ready; if true, it is allowed to do computation on partial (not-full) minibatches. |

Definition at line 593 of file nnet-batch-compute.cc.

References NnetComputer::AcceptInput(), NnetSimpleComputationOptions::acoustic_scale, CuMatrixBase< Real >::AddVecToRows(), NnetBatchComputer::MinibatchSizeInfo::computation, NnetSimpleComputationOptions::compute_config, Timer::Elapsed(), NnetBatchComputer::FormatInputs(), NnetBatchComputer::FormatOutputs(), NnetBatchComputer::GetHighestPriorityComputation(), NnetComputer::GetOutputDestructive(), rnnlm::i, NnetBatchComputer::log_priors_, NnetBatchComputer::nnet_, NnetBatchComputer::MinibatchSizeInfo::num_done, CuMatrixBase< Real >::NumRows(), NnetBatchComputer::opts_, NnetComputer::Run(), CuMatrixBase< Real >::Scale(), NnetBatchComputer::MinibatchSizeInfo::seconds_taken, kaldi::SynchronizeGpu(), and NnetBatchComputer::MinibatchSizeInfo::tot_num_tasks.

Referenced by NnetBatchInference::Compute(), and NnetBatchDecoder::Compute().

|

private |

formats the inputs to the computation and transfers them to GPU.

| [in] | minibatch_size | The number of parallel sequences we're doing this computation for. This will be more than tasks.size() in some cases. |

| [in] | tasks | The tasks we're doing the computation for. The input comes from here. |

| [out] | input | The main feature input to the computation is put into here. |

| [out] | ivector | If we're using i-vectors, the i-vectors are put here. |

Definition at line 346 of file nnet-batch-compute.cc.

References CuMatrixBase< Real >::CopyFromMat(), CuVectorBase< Real >::Data(), CuMatrixBase< Real >::Data(), kaldi::GetVerboseLevel(), KALDI_ASSERT, kaldi::kUndefined, rnnlm::n, CuMatrix< Real >::Resize(), CuMatrixBase< Real >::Row(), CuMatrixBase< Real >::RowRange(), and CuMatrixBase< Real >::Stride().

Referenced by NnetBatchComputer::Compute().

|

private |

Definition at line 459 of file nnet-batch-compute.cc.

References CuMatrixBase< Real >::Data(), KALDI_ASSERT, kaldi::kUndefined, rnnlm::n, NnetInferenceTask::num_initial_unused_output_frames, NnetInferenceTask::num_used_output_frames, CuMatrixBase< Real >::NumCols(), CuMatrixBase< Real >::NumRows(), NnetInferenceTask::output, NnetInferenceTask::output_cpu, NnetInferenceTask::output_to_cpu, Matrix< Real >::Resize(), MatrixBase< Real >::RowRange(), CuMatrixBase< Real >::RowRange(), CuMatrixBase< Real >::Stride(), and kaldi::SynchronizeGpu().

Referenced by NnetBatchComputer::Compute().

|

private |

Definition at line 242 of file nnet-batch-compute.cc.

References NnetBatchComputer::GetMinibatchSize(), KALDI_ASSERT, NnetBatchComputer::opts_, NnetBatchComputerOptions::partial_minibatch_factor, and NnetBatchComputer::ComputationGroupInfo::tasks.

Referenced by NnetBatchComputer::GetHighestPriorityComputation().

|

private |

Definition at line 255 of file nnet-batch-compute.cc.

References CachingOptimizingCompiler::Compile(), NnetBatchComputer::compiler_, NnetBatchComputer::GetComputationRequest(), KALDI_ASSERT, and NnetBatchComputer::ComputationGroupInfo::tasks.

Referenced by NnetBatchComputer::GetHighestPriorityComputation().

|

staticprivate |

Definition at line 312 of file nnet-batch-compute.cc.

References NnetInferenceTask::first_input_t, NnetInferenceTask::input, ComputationRequest::inputs, NnetInferenceTask::ivector, rnnlm::n, ComputationRequest::need_model_derivative, NnetInferenceTask::num_output_frames, NnetInferenceTask::output_t_stride, ComputationRequest::outputs, and ComputationRequest::store_component_stats.

Referenced by NnetBatchComputer::GetComputation().

|

private |

This function finds and returns the computation corresponding to the highest-priority group of tasks.

| [in] | allow_partial_minibatch | If this is true, then this function may return a computation corresponding to a partial minibatch– i.e. the minibatch size in the computation may be less than the minibatch size in the options class, and/or the number of tasks may not be as many as the minibatch size in the computation. |

| [out] | minibatch_size | If this function returns non-NULL, then this will be set to the minibatch size that the returned computation expects. This may be less than tasks->size(), in cases where the minibatch was not 'full'. |

| [out] | tasks | The tasks which we'll be doing the computation for in this minibatch are put here (and removed from tasks_, in cases where this function returns non-NULL. |

Definition at line 136 of file nnet-batch-compute.cc.

References NnetBatchComputer::MinibatchSizeInfo::computation, NnetBatchComputer::GetActualMinibatchSize(), NnetBatchComputer::GetComputation(), NnetBatchComputer::GetHighestPriorityTasks(), NnetBatchComputer::GetPriority(), NnetBatchComputer::ComputationGroupInfo::minibatch_info, NnetBatchComputer::mutex_, and NnetBatchComputer::tasks_.

Referenced by NnetBatchComputer::Compute().

|

private |

Definition at line 170 of file nnet-batch-compute.cc.

References NnetBatchComputer::GetMinibatchSize(), rnnlm::i, KALDI_ASSERT, NnetBatchComputer::no_more_than_n_minibatches_full_, NnetBatchComputer::num_full_minibatches_, and NnetBatchComputer::ComputationGroupInfo::tasks.

Referenced by NnetBatchComputer::GetHighestPriorityComputation().

|

inlineprivate |

Definition at line 227 of file nnet-batch-compute.cc.

References NnetBatchComputerOptions::edge_minibatch_size, NnetInferenceTask::is_edge, NnetInferenceTask::is_irregular, NnetBatchComputerOptions::minibatch_size, NnetBatchComputer::opts_, and NnetBatchComputer::ComputationGroupInfo::tasks.

Referenced by NnetBatchComputer::AcceptTask(), NnetBatchComputer::GetActualMinibatchSize(), NnetBatchComputer::GetHighestPriorityTasks(), and NnetBatchComputer::GetPriority().

|

inline |

Definition at line 280 of file nnet-batch-compute.h.

References KALDI_DISALLOW_COPY_AND_ASSIGN.

Referenced by NnetBatchDecoder::ProcessOutputUtterance(), and NnetBatchDecoder::~NnetBatchDecoder().

|

inlineprivate |

Definition at line 268 of file nnet-batch-compute.cc.

References NnetBatchComputer::GetMinibatchSize(), rnnlm::i, and NnetBatchComputer::ComputationGroupInfo::tasks.

Referenced by NnetBatchComputer::GetHighestPriorityComputation().

|

private |

|

inline |

Returns the number of full minibatches waiting to be computed.

Definition at line 233 of file nnet-batch-compute.h.

References NnetInferenceTask::input, NnetInferenceTask::ivector, and NnetInferenceTask::output_to_cpu.

|

private |

Definition at line 53 of file nnet-batch-compute.cc.

References rnnlm::i, KALDI_LOG, NnetBatchComputer::MinibatchSizeInfo::num_done, NnetBatchComputer::ComputationGroupKey::num_input_frames, NnetBatchComputer::ComputationGroupKey::num_output_frames, operator<(), NnetBatchComputer::MinibatchSizeInfo::seconds_taken, NnetBatchComputer::tasks_, and NnetBatchComputer::MinibatchSizeInfo::tot_num_tasks.

Referenced by NnetBatchComputer::~NnetBatchComputer().

| void SplitUtteranceIntoTasks | ( | bool | output_to_cpu, |

| const Matrix< BaseFloat > & | input, | ||

| const Vector< BaseFloat > * | ivector, | ||

| const Matrix< BaseFloat > * | online_ivectors, | ||

| int32 | online_ivector_period, | ||

| std::vector< NnetInferenceTask > * | tasks | ||

| ) |

Split a single utterance into a list of separate tasks which can then be given to this class by AcceptTask().

| [in] | output_to_cpu | Will become the 'output_to_cpu' member of the output tasks; this controls whether the computation code should transfer the outputs to CPU (which is to save GPU memory). |

| [in] | ivector | If non-NULL, and i-vector for the whole utterance is expected to be supplied here (and online_ivectors should be NULL). This is relevant if you estimate i-vectors per speaker instead of online. |

| [in] | online_ivectors | Matrix of ivectors, one every 'online_ivector_period' frames. |

| [in] | online_ivector_period | Affects the interpretation of 'online_ivectors'. |

| [out] | tasks | The tasks created will be output to here. The priorities will be set to zero; setting them to a meaningful value is up to the caller. |

Definition at line 843 of file nnet-batch-compute.cc.

References CuMatrixBase< Real >::CopyFromMat(), CuVectorBase< Real >::CopyFromVec(), VectorBase< Real >::Dim(), kaldi::kUndefined, MatrixBase< Real >::NumCols(), MatrixBase< Real >::NumRows(), CuVector< Real >::Resize(), and CuMatrix< Real >::Resize().

Referenced by NnetBatchInference::AcceptInput(), and NnetBatchDecoder::Decode().

| void SplitUtteranceIntoTasks | ( | bool | output_to_cpu, |

| const CuMatrix< BaseFloat > & | input, | ||

| const CuVector< BaseFloat > * | ivector, | ||

| const CuMatrix< BaseFloat > * | online_ivectors, | ||

| int32 | online_ivector_period, | ||

| std::vector< NnetInferenceTask > * | tasks | ||

| ) |

Definition at line 873 of file nnet-batch-compute.cc.

References kaldi::nnet3::utterance_splitting::AddOnlineIvectorsToTasks(), CuVectorBase< Real >::Data(), CuVectorBase< Real >::Dim(), NnetSimpleComputationOptions::frame_subsampling_factor, NnetSimpleComputationOptions::frames_per_chunk, kaldi::nnet3::utterance_splitting::GetOutputFrameInfoForTasks(), rnnlm::i, NnetBatchComputer::input_dim_, NnetBatchComputer::ivector_dim_, KALDI_ASSERT, KALDI_ERR, kaldi::kUndefined, NnetBatchComputer::nnet_left_context_, NnetBatchComputer::nnet_right_context_, CuMatrixBase< Real >::NumCols(), CuMatrixBase< Real >::NumRows(), NnetBatchComputer::opts_, CuVector< Real >::Resize(), and kaldi::nnet3::utterance_splitting::SplitInputToTasks().

|

private |

Definition at line 462 of file nnet-batch-compute.h.

Referenced by NnetBatchComputer::GetComputation().

|

private |

Definition at line 490 of file nnet-batch-compute.h.

Referenced by NnetBatchComputer::NnetBatchComputer(), and NnetBatchComputer::SplitUtteranceIntoTasks().

|

private |

Definition at line 491 of file nnet-batch-compute.h.

Referenced by NnetBatchComputer::NnetBatchComputer(), and NnetBatchComputer::SplitUtteranceIntoTasks().

Definition at line 463 of file nnet-batch-compute.h.

Referenced by NnetBatchComputer::Compute(), and NnetBatchComputer::NnetBatchComputer().

|

private |

Definition at line 467 of file nnet-batch-compute.h.

Referenced by NnetBatchComputer::AcceptTask(), NnetBatchComputer::GetHighestPriorityComputation(), and NnetBatchComputer::~NnetBatchComputer().

|

private |

Definition at line 461 of file nnet-batch-compute.h.

Referenced by NnetBatchComputer::Compute(), and NnetBatchComputer::NnetBatchComputer().

|

private |

Definition at line 488 of file nnet-batch-compute.h.

Referenced by NnetBatchComputer::NnetBatchComputer(), and NnetBatchComputer::SplitUtteranceIntoTasks().

|

private |

Definition at line 489 of file nnet-batch-compute.h.

Referenced by NnetBatchComputer::NnetBatchComputer(), and NnetBatchComputer::SplitUtteranceIntoTasks().

|

private |

Definition at line 485 of file nnet-batch-compute.h.

Referenced by NnetBatchComputer::AcceptTask(), NnetBatchComputer::GetHighestPriorityTasks(), and NnetBatchComputer::~NnetBatchComputer().

|

private |

Definition at line 480 of file nnet-batch-compute.h.

Referenced by NnetBatchComputer::AcceptTask(), NnetBatchComputer::GetHighestPriorityTasks(), and NnetBatchComputer::~NnetBatchComputer().

|

private |

Definition at line 460 of file nnet-batch-compute.h.

Referenced by NnetBatchComputer::Compute(), NnetBatchComputer::GetActualMinibatchSize(), NnetBatchComputer::GetMinibatchSize(), NnetBatchComputer::NnetBatchComputer(), and NnetBatchComputer::SplitUtteranceIntoTasks().

|

private |

Definition at line 492 of file nnet-batch-compute.h.

Referenced by NnetBatchComputer::NnetBatchComputer().

|

private |

Definition at line 472 of file nnet-batch-compute.h.

Referenced by NnetBatchComputer::AcceptTask(), NnetBatchComputer::GetHighestPriorityComputation(), NnetBatchComputer::PrintMinibatchStats(), and NnetBatchComputer::~NnetBatchComputer().