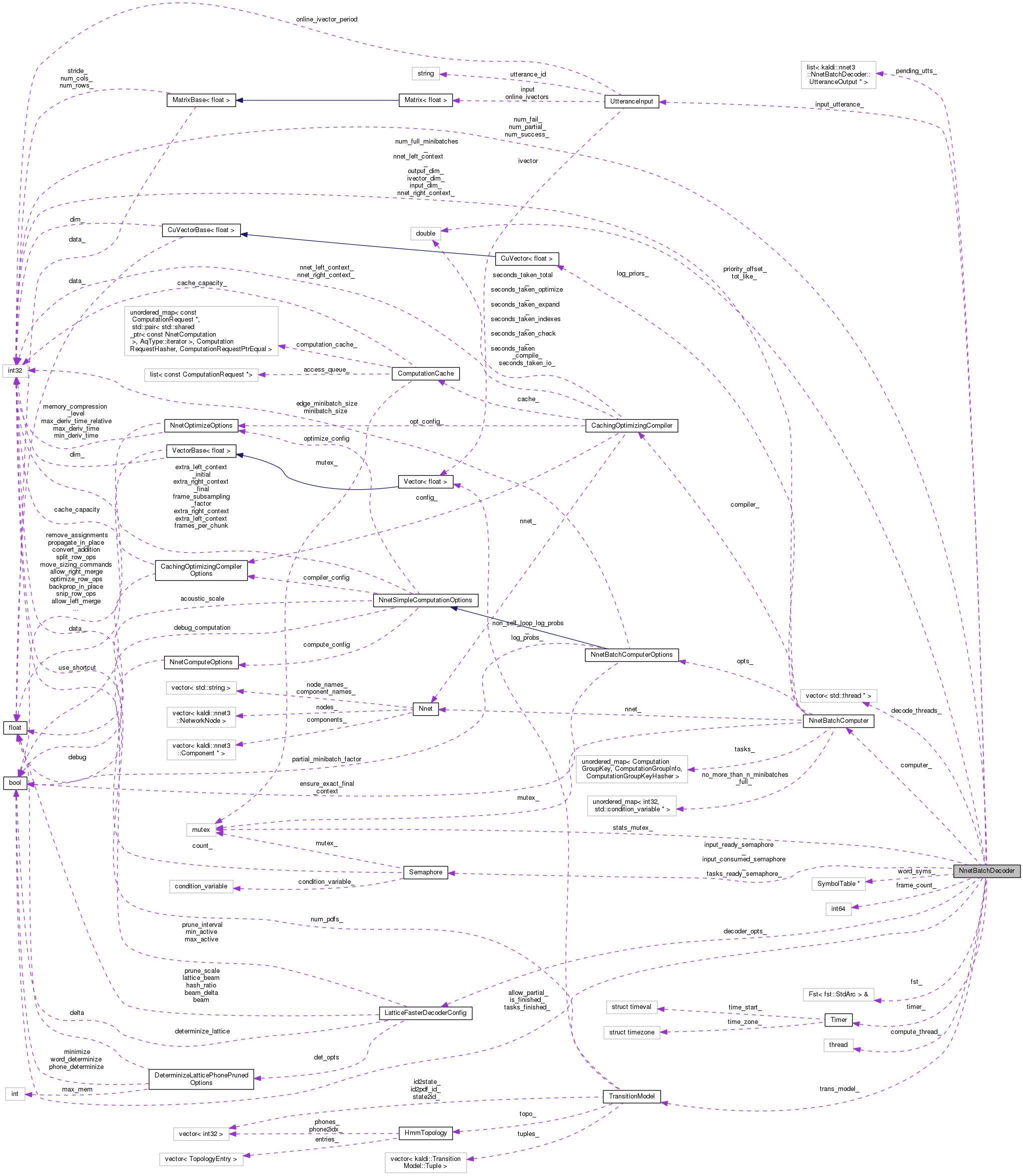

Decoder object that uses multiple CPU threads for the graph search, plus a GPU for the neural net inference (that's done by a separate NnetBatchComputer object). More...

#include <nnet-batch-compute.h>

Classes | |

| struct | UtteranceInput |

| struct | UtteranceOutput |

Public Member Functions | |

| NnetBatchDecoder (const fst::Fst< fst::StdArc > &fst, const LatticeFasterDecoderConfig &decoder_config, const TransitionModel &trans_model, const fst::SymbolTable *word_syms, bool allow_partial, int32 num_threads, NnetBatchComputer *computer) | |

| Constructor. More... | |

| void | AcceptInput (const std::string &utterance_id, const Matrix< BaseFloat > &input, const Vector< BaseFloat > *ivector, const Matrix< BaseFloat > *online_ivectors, int32 online_ivector_period) |

| The user should call this one by one for the utterances that it needs to compute (interspersed with calls to GetOutput()). More... | |

| void | UtteranceFailed () |

| int32 | Finished () |

| bool | GetOutput (std::string *utterance_id, CompactLattice *clat, std::string *sentence) |

| The user should call this to obtain output (This version should only be called if config.determinize_lattice == true (w.r.t. More... | |

| bool | GetOutput (std::string *utterance_id, Lattice *lat, std::string *sentence) |

| ~NnetBatchDecoder () | |

Private Member Functions | |

| KALDI_DISALLOW_COPY_AND_ASSIGN (NnetBatchDecoder) | |

| void | Decode () |

| void | Compute () |

| void | SetPriorities (std::vector< NnetInferenceTask > *tasks) |

| void | UpdatePriorityOffset (double priority) |

| void | ProcessOutputUtterance (UtteranceOutput *output) |

Static Private Member Functions | |

| static void | DecodeFunc (NnetBatchDecoder *object) |

| static void | ComputeFunc (NnetBatchDecoder *object) |

Private Attributes | |

| const fst::Fst< fst::StdArc > & | fst_ |

| const LatticeFasterDecoderConfig & | decoder_opts_ |

| const TransitionModel & | trans_model_ |

| const fst::SymbolTable * | word_syms_ |

| bool | allow_partial_ |

| NnetBatchComputer * | computer_ |

| std::vector< std::thread * > | decode_threads_ |

| std::thread | compute_thread_ |

| UtteranceInput | input_utterance_ |

| Semaphore | input_ready_semaphore_ |

| Semaphore | input_consumed_semaphore_ |

| Semaphore | tasks_ready_semaphore_ |

| bool | is_finished_ |

| bool | tasks_finished_ |

| std::list< UtteranceOutput * > | pending_utts_ |

| double | priority_offset_ |

| double | tot_like_ |

| int64 | frame_count_ |

| int32 | num_success_ |

| int32 | num_fail_ |

| int32 | num_partial_ |

| std::mutex | stats_mutex_ |

| Timer | timer_ |

Decoder object that uses multiple CPU threads for the graph search, plus a GPU for the neural net inference (that's done by a separate NnetBatchComputer object).

The interface of this object should accessed from only one thread, though– presumably the main thread of the program.

Definition at line 613 of file nnet-batch-compute.h.

| NnetBatchDecoder | ( | const fst::Fst< fst::StdArc > & | fst, |

| const LatticeFasterDecoderConfig & | decoder_config, | ||

| const TransitionModel & | trans_model, | ||

| const fst::SymbolTable * | word_syms, | ||

| bool | allow_partial, | ||

| int32 | num_threads, | ||

| NnetBatchComputer * | computer | ||

| ) |

Constructor.

| [in] | fst | FST that we are decoding with, will be shared between all decoder threads. |

| [in] | decoder_config | Configuration object for the decoders. |

| [in] | trans_model | The transition model– needed to construct the decoders, and for determinization. |

| [in] | word_syms | A pointer to a symbol table of words, used for printing the decoded words to stderr. If NULL, the word-level output will not be logged. |

| [in] | allow_partial | If true, in cases where no final-state was reached on the final frame of the decoding, we still output a lattice; it just may contain partial words (words that are cut off in the middle). If false, we just won't output anything for those lattices. |

| [in] | num_threads | The number of decoder threads to use. It will use two more threads on top of this: the main thread, for I/O, and a thread for possibly-GPU-based inference. |

| [in] | computer | The NnetBatchComputer object, through which the neural net will be evaluated. |

Definition at line 1188 of file nnet-batch-compute.cc.

References NnetBatchDecoder::compute_thread_, NnetBatchDecoder::ComputeFunc(), NnetBatchDecoder::decode_threads_, NnetBatchDecoder::DecodeFunc(), rnnlm::i, and KALDI_ASSERT.

| ~NnetBatchDecoder | ( | ) |

Definition at line 1497 of file nnet-batch-compute.cc.

References NnetBatchDecoder::computer_, NnetBatchDecoder::decode_threads_, Timer::Elapsed(), NnetBatchDecoder::frame_count_, NnetSimpleComputationOptions::frame_subsampling_factor, NnetBatchComputer::GetOptions(), NnetBatchDecoder::is_finished_, KALDI_ERR, KALDI_LOG, NnetBatchDecoder::num_fail_, NnetBatchDecoder::num_partial_, NnetBatchDecoder::num_success_, NnetBatchDecoder::pending_utts_, NnetBatchDecoder::timer_, and NnetBatchDecoder::tot_like_.

| void AcceptInput | ( | const std::string & | utterance_id, |

| const Matrix< BaseFloat > & | input, | ||

| const Vector< BaseFloat > * | ivector, | ||

| const Matrix< BaseFloat > * | online_ivectors, | ||

| int32 | online_ivector_period | ||

| ) |

The user should call this one by one for the utterances that it needs to compute (interspersed with calls to GetOutput()).

This call will block when no threads are ready to start processing this utterance.

| [in] | utterance_id | The string representing the utterance-id; it will be provided back to the user when GetOutput() is called. |

| [in] | input | The input features (e.g. MFCCs) |

| [in] | ivector | If non-NULL, this is expected to be the i-vector for this utterance (and 'online_ivectors' should be NULL). |

| [in] | online_ivector_period | Only relevant if 'online_ivector' is non-NULL, this says how many frames of 'input' is covered by each row of 'online_ivectors'. |

Definition at line 1224 of file nnet-batch-compute.cc.

References NnetBatchDecoder::UtteranceInput::input, NnetBatchDecoder::input_consumed_semaphore_, NnetBatchDecoder::input_ready_semaphore_, NnetBatchDecoder::input_utterance_, NnetBatchDecoder::UtteranceInput::ivector, NnetBatchDecoder::UtteranceInput::online_ivector_period, NnetBatchDecoder::UtteranceInput::online_ivectors, NnetBatchDecoder::pending_utts_, Semaphore::Signal(), NnetBatchDecoder::UtteranceInput::utterance_id, NnetBatchDecoder::UtteranceOutput::utterance_id, and Semaphore::Wait().

|

private |

Definition at line 1329 of file nnet-batch-compute.cc.

References NnetBatchComputer::Compute(), NnetBatchDecoder::computer_, NnetBatchDecoder::tasks_finished_, NnetBatchDecoder::tasks_ready_semaphore_, and Semaphore::Wait().

|

inlinestaticprivate |

Definition at line 760 of file nnet-batch-compute.h.

References NnetInferenceTask::priority.

Referenced by NnetBatchDecoder::NnetBatchDecoder().

|

private |

Definition at line 1337 of file nnet-batch-compute.cc.

References NnetBatchComputer::AcceptTask(), LatticeFasterDecoderTpl< FST, Token >::AdvanceDecoding(), NnetBatchDecoder::allow_partial_, NnetBatchDecoder::computer_, NnetBatchDecoder::decoder_opts_, NnetBatchDecoder::fst_, LatticeFasterDecoderTpl< FST, Token >::GetRawLattice(), rnnlm::i, LatticeFasterDecoderTpl< FST, Token >::InitDecoding(), NnetBatchDecoder::UtteranceInput::input, NnetBatchDecoder::input_consumed_semaphore_, NnetBatchDecoder::input_ready_semaphore_, NnetBatchDecoder::input_utterance_, NnetBatchDecoder::is_finished_, NnetBatchDecoder::UtteranceInput::ivector, KALDI_ASSERT, KALDI_WARN, NnetBatchDecoder::UtteranceOutput::lat, NnetBatchDecoder::num_fail_, NnetInferenceTask::num_initial_unused_output_frames, NnetBatchDecoder::num_partial_, NnetInferenceTask::num_used_output_frames, MatrixBase< Real >::NumCols(), NnetBatchDecoder::UtteranceInput::online_ivector_period, NnetBatchDecoder::UtteranceInput::online_ivectors, NnetInferenceTask::output, NnetInferenceTask::output_cpu, NnetBatchDecoder::pending_utts_, NnetInferenceTask::priority, NnetBatchDecoder::ProcessOutputUtterance(), LatticeFasterDecoderTpl< FST, Token >::ReachedFinal(), NnetInferenceTask::semaphore, NnetBatchDecoder::SetPriorities(), Semaphore::Signal(), NnetBatchComputer::SplitUtteranceIntoTasks(), NnetBatchDecoder::stats_mutex_, NnetBatchDecoder::tasks_ready_semaphore_, NnetBatchDecoder::trans_model_, NnetBatchDecoder::UpdatePriorityOffset(), NnetBatchDecoder::UtteranceInput::utterance_id, NnetBatchDecoder::UtteranceOutput::utterance_id, and Semaphore::Wait().

|

inlinestaticprivate |

Definition at line 755 of file nnet-batch-compute.h.

Referenced by NnetBatchDecoder::NnetBatchDecoder().

| int32 Finished | ( | ) |

Definition at line 1247 of file nnet-batch-compute.cc.

References NnetBatchDecoder::compute_thread_, NnetBatchDecoder::decode_threads_, rnnlm::i, NnetBatchDecoder::input_ready_semaphore_, NnetBatchDecoder::is_finished_, NnetBatchDecoder::num_success_, Semaphore::Signal(), NnetBatchDecoder::tasks_finished_, and NnetBatchDecoder::tasks_ready_semaphore_.

| bool GetOutput | ( | std::string * | utterance_id, |

| CompactLattice * | clat, | ||

| std::string * | sentence | ||

| ) |

The user should call this to obtain output (This version should only be called if config.determinize_lattice == true (w.r.t.

the config provided to the constructor). The output is guaranteed to be in the same order as the input was provided, but it may be delayed, *and* some outputs may be missing, for example because of search failures (allow_partial will affect this).

The acoustic scores in the output lattice will already be divided by the acoustic scale we decoded with.

This call does not block (i.e. does not wait on any semaphores). It returns true if it actually got any output; if none was ready it will return false.

| [out] | utterance_id | If an output was ready, its utterance-id is written to here. |

| [out] | clat | If an output was ready, it compact lattice will be written to here. |

| [out] | sentence | If an output was ready and a nonempty symbol table was provided to the constructor of this class, contains the word-sequence decoded as a string. Otherwise will be empty. |

Definition at line 1266 of file nnet-batch-compute.cc.

References NnetBatchDecoder::UtteranceOutput::compact_lat, NnetBatchDecoder::decoder_opts_, LatticeFasterDecoderConfig::determinize_lattice, KALDI_ERR, NnetBatchDecoder::pending_utts_, NnetBatchDecoder::UtteranceOutput::sentence, and NnetBatchDecoder::UtteranceOutput::utterance_id.

Referenced by kaldi::HandleOutput().

Definition at line 1298 of file nnet-batch-compute.cc.

References NnetBatchDecoder::decoder_opts_, LatticeFasterDecoderConfig::determinize_lattice, KALDI_ERR, NnetBatchDecoder::UtteranceOutput::lat, NnetBatchDecoder::pending_utts_, NnetBatchDecoder::UtteranceOutput::sentence, and NnetBatchDecoder::UtteranceOutput::utterance_id.

|

private |

|

private |

Definition at line 1423 of file nnet-batch-compute.cc.

References NnetSimpleComputationOptions::acoustic_scale, fst::AcousticLatticeScale(), NnetBatchDecoder::UtteranceOutput::compact_lat, NnetBatchDecoder::computer_, NnetBatchDecoder::decoder_opts_, LatticeFasterDecoderConfig::det_opts, LatticeFasterDecoderConfig::determinize_lattice, fst::DeterminizeLatticePhonePrunedWrapper(), NnetBatchDecoder::UtteranceOutput::finished, NnetBatchDecoder::frame_count_, fst::GetLinearSymbolSequence(), NnetBatchComputer::GetOptions(), rnnlm::i, KALDI_ERR, KALDI_LOG, KALDI_VLOG, KALDI_WARN, NnetBatchDecoder::UtteranceOutput::lat, LatticeFasterDecoderConfig::lattice_beam, NnetBatchDecoder::num_fail_, NnetBatchDecoder::num_success_, fst::ScaleLattice(), NnetBatchDecoder::UtteranceOutput::sentence, NnetBatchDecoder::stats_mutex_, NnetBatchDecoder::tot_like_, NnetBatchDecoder::trans_model_, NnetBatchDecoder::UtteranceOutput::utterance_id, LatticeWeightTpl< FloatType >::Value1(), LatticeWeightTpl< FloatType >::Value2(), NnetBatchDecoder::word_syms_, and words.

Referenced by NnetBatchDecoder::Decode().

|

private |

Definition at line 1208 of file nnet-batch-compute.cc.

References rnnlm::i, and NnetBatchDecoder::priority_offset_.

Referenced by NnetBatchDecoder::Decode().

|

private |

Definition at line 1215 of file nnet-batch-compute.cc.

References NnetBatchDecoder::decode_threads_, and NnetBatchDecoder::priority_offset_.

Referenced by NnetBatchDecoder::Decode().

| void UtteranceFailed | ( | ) |

Definition at line 1418 of file nnet-batch-compute.cc.

References NnetBatchDecoder::num_fail_, and NnetBatchDecoder::stats_mutex_.

|

private |

Definition at line 780 of file nnet-batch-compute.h.

Referenced by NnetBatchDecoder::Decode().

|

private |

Definition at line 783 of file nnet-batch-compute.h.

Referenced by NnetBatchDecoder::Finished(), and NnetBatchDecoder::NnetBatchDecoder().

|

private |

Definition at line 781 of file nnet-batch-compute.h.

Referenced by NnetBatchDecoder::Compute(), NnetBatchDecoder::Decode(), NnetBatchDecoder::ProcessOutputUtterance(), and NnetBatchDecoder::~NnetBatchDecoder().

|

private |

Definition at line 782 of file nnet-batch-compute.h.

Referenced by NnetBatchDecoder::Finished(), NnetBatchDecoder::NnetBatchDecoder(), NnetBatchDecoder::UpdatePriorityOffset(), and NnetBatchDecoder::~NnetBatchDecoder().

|

private |

Definition at line 777 of file nnet-batch-compute.h.

Referenced by NnetBatchDecoder::Decode(), NnetBatchDecoder::GetOutput(), and NnetBatchDecoder::ProcessOutputUtterance().

|

private |

Definition at line 831 of file nnet-batch-compute.h.

Referenced by NnetBatchDecoder::ProcessOutputUtterance(), and NnetBatchDecoder::~NnetBatchDecoder().

|

private |

Definition at line 776 of file nnet-batch-compute.h.

Referenced by NnetBatchDecoder::Decode().

|

private |

Definition at line 796 of file nnet-batch-compute.h.

Referenced by NnetBatchDecoder::AcceptInput(), and NnetBatchDecoder::Decode().

|

private |

Definition at line 791 of file nnet-batch-compute.h.

Referenced by NnetBatchDecoder::AcceptInput(), NnetBatchDecoder::Decode(), and NnetBatchDecoder::Finished().

|

private |

Definition at line 790 of file nnet-batch-compute.h.

Referenced by NnetBatchDecoder::AcceptInput(), and NnetBatchDecoder::Decode().

|

private |

Definition at line 806 of file nnet-batch-compute.h.

Referenced by NnetBatchDecoder::Decode(), NnetBatchDecoder::Finished(), and NnetBatchDecoder::~NnetBatchDecoder().

|

private |

Definition at line 833 of file nnet-batch-compute.h.

Referenced by NnetBatchDecoder::Decode(), NnetBatchDecoder::ProcessOutputUtterance(), NnetBatchDecoder::UtteranceFailed(), and NnetBatchDecoder::~NnetBatchDecoder().

|

private |

Definition at line 834 of file nnet-batch-compute.h.

Referenced by NnetBatchDecoder::Decode(), and NnetBatchDecoder::~NnetBatchDecoder().

|

private |

Definition at line 832 of file nnet-batch-compute.h.

Referenced by NnetBatchDecoder::Finished(), NnetBatchDecoder::ProcessOutputUtterance(), and NnetBatchDecoder::~NnetBatchDecoder().

|

private |

Definition at line 819 of file nnet-batch-compute.h.

Referenced by NnetBatchDecoder::AcceptInput(), NnetBatchDecoder::Decode(), NnetBatchDecoder::GetOutput(), and NnetBatchDecoder::~NnetBatchDecoder().

|

private |

Definition at line 826 of file nnet-batch-compute.h.

Referenced by NnetBatchDecoder::SetPriorities(), and NnetBatchDecoder::UpdatePriorityOffset().

|

private |

Definition at line 837 of file nnet-batch-compute.h.

Referenced by NnetBatchDecoder::Decode(), NnetBatchDecoder::ProcessOutputUtterance(), and NnetBatchDecoder::UtteranceFailed().

|

private |

Definition at line 810 of file nnet-batch-compute.h.

Referenced by NnetBatchDecoder::Compute(), and NnetBatchDecoder::Finished().

|

private |

Definition at line 803 of file nnet-batch-compute.h.

Referenced by NnetBatchDecoder::Compute(), NnetBatchDecoder::Decode(), and NnetBatchDecoder::Finished().

|

private |

Definition at line 839 of file nnet-batch-compute.h.

Referenced by NnetBatchDecoder::~NnetBatchDecoder().

|

private |

Definition at line 829 of file nnet-batch-compute.h.

Referenced by NnetBatchDecoder::ProcessOutputUtterance(), and NnetBatchDecoder::~NnetBatchDecoder().

|

private |

Definition at line 778 of file nnet-batch-compute.h.

Referenced by NnetBatchDecoder::Decode(), and NnetBatchDecoder::ProcessOutputUtterance().

|

private |

Definition at line 779 of file nnet-batch-compute.h.

Referenced by NnetBatchDecoder::ProcessOutputUtterance().