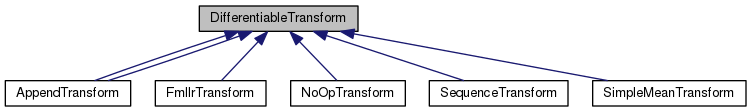

This class is for speaker-dependent feature-space transformations – principally various varieties of fMLLR, including mean-only, diagonal and block-diagonal versions – which are intended for placement in the bottleneck of a neural net. More...

#include <differentiable-transform.h>

Public Member Functions | |

| virtual int32 | Dim () const =0 |

| Return the dimension of the input and output features. More... | |

| int32 | NumClasses () const |

| Return the number of classes in the model used for adaptation. More... | |

| virtual void | SetNumClasses (int32 num_classes) |

| This can be used to change the number of classes. More... | |

| virtual MinibatchInfoItf * | TrainingForward (const CuMatrixBase< BaseFloat > &input, int32 num_chunks, int32 num_spk, const Posterior &posteriors, CuMatrixBase< BaseFloat > *output) const =0 |

| This is the function you call in training time, for the forward pass; it adapts the features. More... | |

| virtual void | TrainingBackward (const CuMatrixBase< BaseFloat > &input, const CuMatrixBase< BaseFloat > &output_deriv, int32 num_chunks, int32 num_spk, const Posterior &posteriors, const MinibatchInfoItf &minibatch_info, CuMatrixBase< BaseFloat > *input_deriv) const =0 |

| This does the backpropagation, during the training pass. More... | |

| virtual int32 | NumFinalIterations ()=0 |

| Returns the number of times you have to (call Accumulate() on a subset of data, then call Estimate()) More... | |

| virtual void | Accumulate (int32 final_iter, const CuMatrixBase< BaseFloat > &input, int32 num_chunks, int32 num_spk, const Posterior &posteriors)=0 |

| This will typically be called sequentially, minibatch by minibatch, for a subset of training data, after training the neural nets, followed by a call to Estimate(). More... | |

| virtual void | Estimate (int32 final_iter)=0 |

| virtual SpeakerStatsItf * | GetEmptySpeakerStats ()=0 |

| virtual void | TestingAccumulate (const MatrixBase< BaseFloat > &input, const Posterior &posteriors, SpeakerStatsItf *speaker_stats) const =0 |

| virtual void | TestingForward (const MatrixBase< BaseFloat > &input, const SpeakerStatsItf &speaker_stats, MatrixBase< BaseFloat > *output) const =0 |

| virtual DifferentiableTransform * | Copy () const =0 |

| virtual void | Write (std::ostream &os, bool binary) const =0 |

| virtual void | Read (std::istream &is, bool binary)=0 |

Static Public Member Functions | |

| static DifferentiableTransform * | ReadNew (std::istream &is, bool binary) |

| static DifferentiableTransform * | NewTransformOfType (const std::string &type) |

Protected Attributes | |

| int32 | num_classes_ |

This class is for speaker-dependent feature-space transformations – principally various varieties of fMLLR, including mean-only, diagonal and block-diagonal versions – which are intended for placement in the bottleneck of a neural net.

So code-wise, we'd have: bottom neural net, then transform, then top neural net. The transform is designed to be differentiable, i.e. it can be used during training to propagate derivatives from the top neural net down to the bottom neural net. The reason this is non-trivial (i.e. why it's not just a matrix multiplication) is that the value of the transform itself depends on the features, and also on the speaker-independent statistics for each class (i.e. the mean and variance), which also depends on the features. You can view this as an extension of things like BatchNorm, except the interface is more complicated because there is a dependence on the per-frame class labels.

The class labels we'll use here will probably be derived from some kind of minimal tree, with hundreds instead of thousands of states. Part of the reason for using a smaller number of states is that, to make the thing properly differentiable during training, we need to use a small enough number of states that we can obtain a reasonable estimate for the mean and variance of a Gaussian for each one in training time. Anyway, see http://isl.anthropomatik.kit.edu/pdf/Nguyen2017.pdf, it's generally better for this kind of thing to use "simple target models" for adaptation.

Note: for training utterances we'll generally get the class labels used for adatpation in a supervised manner, either by aligning a previous system like a GMM system, or from the (soft) posteriors of the the numerator graphs. In test time, we'll usually be getting these class labels from some kind of unsupervised process.

Because we tend to train neural nets on fairly small fixed-size chunks (e.g. 1.5 seconds), and transforms like fMLLR don't tend to work very well until you have about 5 seconds of data, we will usually be arranging those chunks into groups where all members of the group comes from the same speaker.

Definition at line 85 of file differentiable-transform.h.

|

pure virtual |

This will typically be called sequentially, minibatch by minibatch, for a subset of training data, after training the neural nets, followed by a call to Estimate().

Accumulate() stores statistics that are used by Estimate(). This process is analogous to computing the final stats in BatchNorm, in preparation for testing. In practice it will be doing things like computing per-class means and variances.

| [in] | final_iter | An iteration number in the range [0, NumFinalIterations()]. In many cases there will be only one iteration so this will just be zero. |

The input parameters are the same as the same-named parameters to TrainingForward(); please refer to the documentation there.

Implemented in FmllrTransform, AppendTransform, SequenceTransform, and NoOpTransform.

|

pure virtual |

Implemented in FmllrTransform, SimpleMeanTransform, AppendTransform, AppendTransform, SequenceTransform, and NoOpTransform.

|

pure virtual |

Return the dimension of the input and output features.

Implemented in FmllrTransform, SimpleMeanTransform, AppendTransform, AppendTransform, SequenceTransform, and NoOpTransform.

|

pure virtual |

Implemented in FmllrTransform, AppendTransform, SequenceTransform, and NoOpTransform.

|

pure virtual |

Implemented in FmllrTransform, SequenceTransform, and NoOpTransform.

|

static |

|

inline |

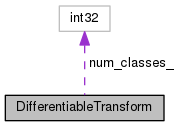

Return the number of classes in the model used for adaptation.

These will probably correspond to the leaves of a small tree, so they would be pdf-ids. This model only keeps track of the number of classes, it does not contain any information about what they mean. The integers in the objects of type Posterior provided to this class are expected to contain numbers from 0 to NumClasses() - 1.

Definition at line 98 of file differentiable-transform.h.

|

pure virtual |

Returns the number of times you have to (call Accumulate() on a subset of data, then call Estimate())

Implemented in AppendTransform, SequenceTransform, and NoOpTransform.

|

pure virtual |

Implemented in FmllrTransform, SimpleMeanTransform, AppendTransform, AppendTransform, SequenceTransform, and NoOpTransform.

|

static |

|

inlinevirtual |

This can be used to change the number of classes.

It would normally be used, if at all, after the model is trained and prior to calling Accumulate(), in case you want to use a more detailed model (e.g. the normal-size tree instead of the small one that we use during training). Child classes may want to override this, in case they need to do something more than just set this variable.

Definition at line 107 of file differentiable-transform.h.

References kaldi::cu::Copy().

|

pure virtual |

Implemented in FmllrTransform, SequenceTransform, and NoOpTransform.

|

pure virtual |

|

pure virtual |

This does the backpropagation, during the training pass.

| [in] | input | The original input (pre-transform) features that were given to TrainingForward(). |

| [in] | output_deriv | The derivative of the objective function (that we are backpropagating) w.r.t. the output. |

| [in] | num_chunks,num_spk,posteriors | See TrainingForward() for information about these arguments; they should be the same values. |

| [in] | minibatch_info | The object returned by the corresponding call to TrainingForward(). The caller will likely want to delete that object after calling this function |

| [in,out] | input_deriv | The derivative at the input, i.e. dF/d(input), where F is the function we are evaluating. Must have the same dimension as 'input'. The derivative is *added* to here. This is useful because generally we will also be training (perhaps with less weight) on the unadapted features, in order to prevent them from deviating too far from the adapted ones and to allow the same model to be used for the first pass. |

Implemented in FmllrTransform, SimpleMeanTransform, AppendTransform, AppendTransform, SequenceTransform, and NoOpTransform.

|

pure virtual |

This is the function you call in training time, for the forward pass; it adapts the features.

By "training time" here, we assume you are training the 'bottom' neural net, that produces the features in 'input'; if you were not training it, it would be the same as test time as far as this function is concerned.

| [in] | input | The original, un-adapted features; these will typically be output by a neural net, the 'bottom' net in our terminology. This will correspond to a whole minibatch, consisting of multiple speakers and multiple sequences (chunks) per speaker. Caution: the order of both the input and output features, and the posteriors, does not consist of blocks, one per sequence, but rather blocks, one per time frame, so the sequences are intercalated. |

| [in] | num_chunks | The number of individual sequences (e.g., chunks of speech) represented in 'input'. input.NumRows() will equal num_sequences times the number of time frames. |

| [in] | num_spk | The number of speakers. Must be greater than one, and must divide num_chunks. The number of chunks per speaker (num_chunks / num_spk) must be the same for all speakers, and the chunks for a speaker must be consecutive. |

| [in] | posteriors | (note: this is a vector of vector of pair<int32,BaseFloat>). This provides, in 'soft-count' form, the class supervision information that is used for the adaptation. posteriors.size() will be equal to input.NumRows(), and the ordering of its elements is the same as the ordering of the rows of input, i.e. the sequences are intercalated. There is no assumption that the posteriors sum to one; this allows you to do things like silence weighting. |

| [out] | output | The adapted output. This matrix should have the same dimensions as 'input'. |

Implemented in FmllrTransform, SimpleMeanTransform, AppendTransform, AppendTransform, SequenceTransform, and NoOpTransform.

|

pure virtual |

Implemented in FmllrTransform, SimpleMeanTransform, AppendTransform, AppendTransform, SequenceTransform, and NoOpTransform.

|

protected |

Definition at line 269 of file differentiable-transform.h.