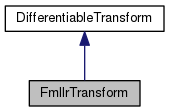

Notes on the math behind differentiable fMLLR transform.

More...

#include <differentiable-transform.h>

|

| int32 | Dim () const override |

| | Return the dimension of the input and output features. More...

|

| |

| int32 | NumClasses () const override |

| |

| MinibatchInfoItf * | TrainingForward (const CuMatrixBase< BaseFloat > &input, int32 num_chunks, int32 num_spk, const Posterior &posteriors, CuMatrixBase< BaseFloat > *output) const override |

| | This is the function you call in training time, for the forward pass; it adapts the features. More...

|

| |

| virtual void | TrainingBackward (const CuMatrixBase< BaseFloat > &input, const CuMatrixBase< BaseFloat > &output_deriv, int32 num_chunks, int32 num_spk, const Posterior &posteriors, const MinibatchInfoItf &minibatch_info, CuMatrixBase< BaseFloat > *input_deriv) const override |

| | This does the backpropagation, during the training pass. More...

|

| |

| void | Accumulate (int32 final_iter, const CuMatrixBase< BaseFloat > &input, int32 num_chunks, int32 num_spk, const Posterior &posteriors) override |

| | This will typically be called sequentially, minibatch by minibatch, for a subset of training data, after training the neural nets, followed by a call to Estimate(). More...

|

| |

| SpeakerStatsItf * | GetEmptySpeakerStats () override |

| |

| void | TestingAccumulate (const MatrixBase< BaseFloat > &input, const Posterior &posteriors, SpeakerStatsItf *speaker_stats) const override |

| |

| virtual void | TestingForward (const MatrixBase< BaseFloat > &input, const SpeakerStatsItf &speaker_stats, MatrixBase< BaseFloat > *output) override |

| |

| void | Estimate (int32 final_iter) override |

| |

| | FmllrTransform (const FmllrTransform &other) |

| |

| DifferentiableTransform * | Copy () const override |

| |

| void | Write (std::ostream &os, bool binary) const override |

| |

| void | Read (std::istream &is, bool binary) override |

| |

| int32 | NumClasses () const |

| | Return the number of classes in the model used for adaptation. More...

|

| |

| virtual void | SetNumClasses (int32 num_classes) |

| | This can be used to change the number of classes. More...

|

| |

| virtual int32 | NumFinalIterations ()=0 |

| | Returns the number of times you have to (call Accumulate() on a subset of data, then call Estimate()) More...

|

| |

| virtual void | TestingForward (const MatrixBase< BaseFloat > &input, const SpeakerStatsItf &speaker_stats, MatrixBase< BaseFloat > *output) const =0 |

| |

Notes on the math behind differentiable fMLLR transform.

Definition at line 602 of file differentiable-transform.h.

◆ FmllrTransform()

◆ Accumulate()

This will typically be called sequentially, minibatch by minibatch, for a subset of training data, after training the neural nets, followed by a call to Estimate().

Accumulate() stores statistics that are used by Estimate(). This process is analogous to computing the final stats in BatchNorm, in preparation for testing. In practice it will be doing things like computing per-class means and variances.

- Parameters

-

| [in] | final_iter | An iteration number in the range [0, NumFinalIterations()]. In many cases there will be only one iteration so this will just be zero. |

The input parameters are the same as the same-named parameters to TrainingForward(); please refer to the documentation there.

Implements DifferentiableTransform.

◆ Copy()

◆ Dim()

◆ Estimate()

| void Estimate |

( |

int32 |

final_iter | ) |

|

|

inlineoverridevirtual |

◆ GetEmptySpeakerStats()

◆ NumClasses()

| int32 NumClasses |

( |

| ) |

const |

|

override |

◆ Read()

| void Read |

( |

std::istream & |

is, |

|

|

bool |

binary |

|

) |

| |

|

overridevirtual |

◆ TestingAccumulate()

◆ TestingForward()

◆ TrainingBackward()

This does the backpropagation, during the training pass.

- Parameters

-

| [in] | input | The original input (pre-transform) features that were given to TrainingForward(). |

| [in] | output_deriv | The derivative of the objective function (that we are backpropagating) w.r.t. the output. |

| [in] | num_chunks,num_spk,posteriors | See TrainingForward() for information about these arguments; they should be the same values. |

| [in] | minibatch_info | The object returned by the corresponding call to TrainingForward(). The caller will likely want to delete that object after calling this function |

| [in,out] | input_deriv | The derivative at the input, i.e. dF/d(input), where F is the function we are evaluating. Must have the same dimension as 'input'. The derivative is *added* to here. This is useful because generally we will also be training (perhaps with less weight) on the unadapted features, in order to prevent them from deviating too far from the adapted ones and to allow the same model to be used for the first pass. |

Implements DifferentiableTransform.

◆ TrainingForward()

This is the function you call in training time, for the forward pass; it adapts the features.

By "training time" here, we assume you are training the 'bottom' neural net, that produces the features in 'input'; if you were not training it, it would be the same as test time as far as this function is concerned.

- Parameters

-

| [in] | input | The original, un-adapted features; these will typically be output by a neural net, the 'bottom' net in our terminology. This will correspond to a whole minibatch, consisting of multiple speakers and multiple sequences (chunks) per speaker. Caution: the order of both the input and output features, and the posteriors, does not consist of blocks, one per sequence, but rather blocks, one per time frame, so the sequences are intercalated. |

| [in] | num_chunks | The number of individual sequences (e.g., chunks of speech) represented in 'input'. input.NumRows() will equal num_sequences times the number of time frames. |

| [in] | num_spk | The number of speakers. Must be greater than one, and must divide num_chunks. The number of chunks per speaker (num_chunks / num_spk) must be the same for all speakers, and the chunks for a speaker must be consecutive. |

| [in] | posteriors | (note: this is a vector of vector of pair<int32,BaseFloat>). This provides, in 'soft-count' form, the class supervision information that is used for the adaptation. posteriors.size() will be equal to input.NumRows(), and the ordering of its elements is the same as the ordering of the rows of input, i.e. the sequences are intercalated. There is no assumption that the posteriors sum to one; this allows you to do things like silence weighting. |

| [out] | output | The adapted output. This matrix should have the same dimensions as 'input'. |

- Returns

- This function returns either NULL or an object of type DifferentiableTransformItf*, which is expected to be given to the function TrainingBackward(). It will store any information that will be needed in the backprop phase.

Implements DifferentiableTransform.

◆ Write()

| void Write |

( |

std::ostream & |

os, |

|

|

bool |

binary |

|

) |

| const |

|

overridevirtual |

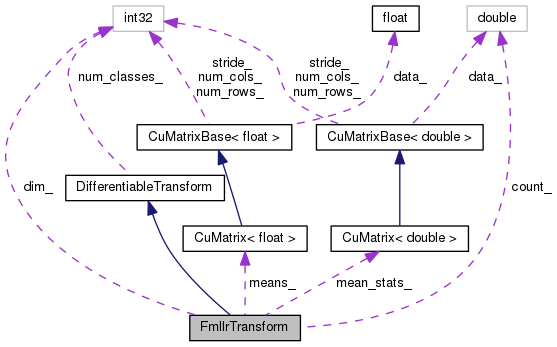

◆ count_

◆ dim_

◆ mean_stats_

◆ means_

The documentation for this class was generated from the following file:

Public Member Functions inherited from DifferentiableTransform

Public Member Functions inherited from DifferentiableTransform Static Public Member Functions inherited from DifferentiableTransform

Static Public Member Functions inherited from DifferentiableTransform Protected Attributes inherited from DifferentiableTransform

Protected Attributes inherited from DifferentiableTransform