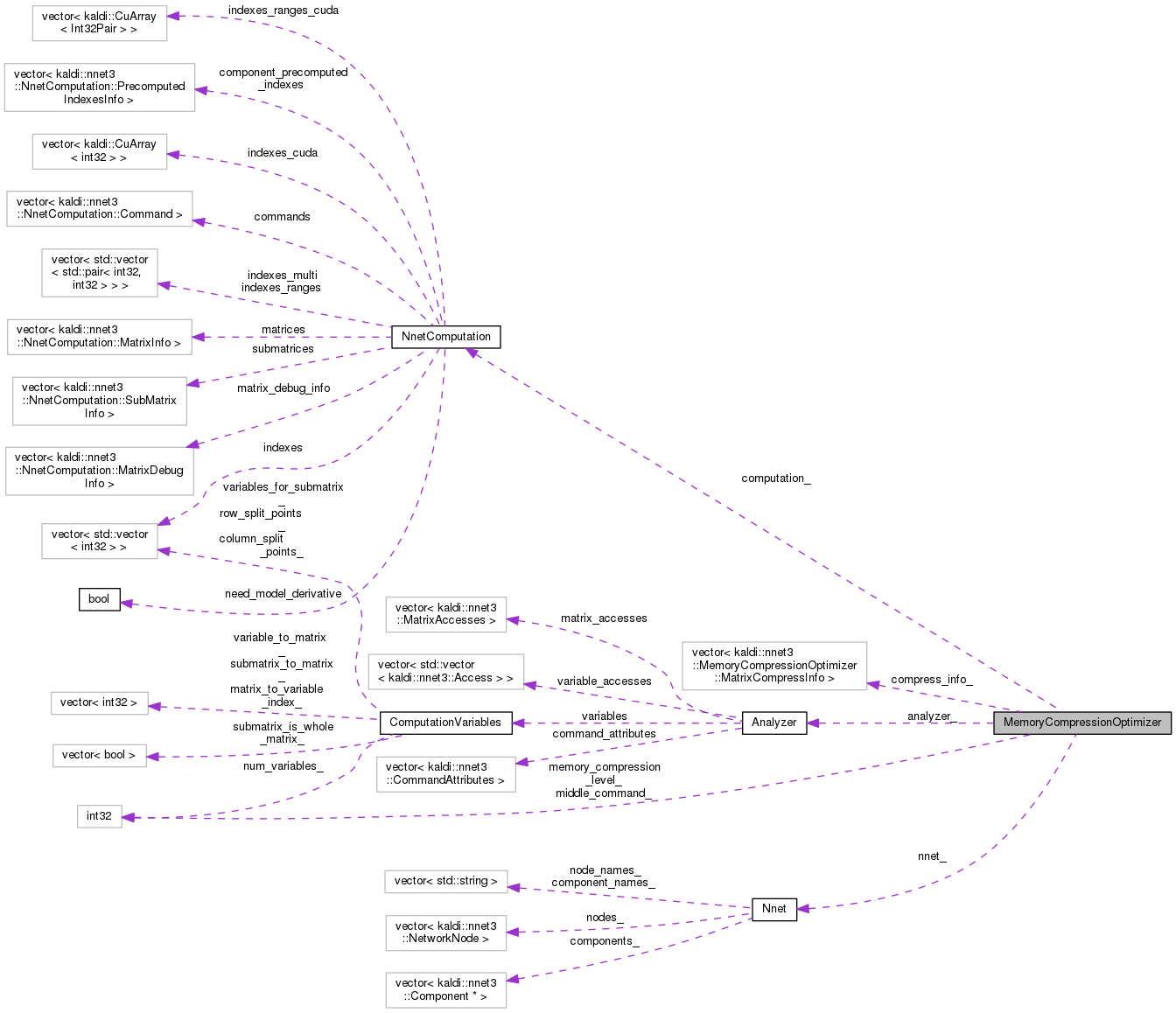

This class is used in the function OptimizeMemoryCompression(), once we determine that there is some potential to do memory compression for this computation. More...

Classes | |

| struct | MatrixCompressInfo |

Public Member Functions | |

| MemoryCompressionOptimizer (const Nnet &nnet, int32 memory_compression_level, int32 middle_command, NnetComputation *computation) | |

| void | Optimize () |

Private Member Functions | |

| void | ProcessMatrix (int32 m) |

| void | ModifyComputation () |

Private Attributes | |

| std::vector< MatrixCompressInfo > | compress_info_ |

| const Nnet & | nnet_ |

| int32 | memory_compression_level_ |

| int32 | middle_command_ |

| NnetComputation * | computation_ |

| Analyzer | analyzer_ |

This class is used in the function OptimizeMemoryCompression(), once we determine that there is some potential to do memory compression for this computation.

Definition at line 4690 of file nnet-optimize-utils.cc.

|

inline |

| [in] | nnet | The neural net the computation is for. |

| [in] | memory_compression_level. | The level of compression: 0 = no compression (the constructor should not be called with this value). 1 = compression that doesn't affect the results (but still takes time). 2 = compression that affects the results only very slightly 3 = compression that affects the results a little more. |

| [in] | middle_command | Must be the command-index of the command of type kNoOperationMarker in 'computation'. |

| [in,out] | computation | The computation we're optimizing. |

Definition at line 4703 of file nnet-optimize-utils.cc.

References kaldi::nnet3::Optimize().

|

private |

Definition at line 4769 of file nnet-optimize-utils.cc.

References MemoryCompressionOptimizer::MatrixCompressInfo::compression_command_index, MemoryCompressionOptimizer::MatrixCompressInfo::compression_type, DerivativeTimeLimiter::computation_, NnetComputation::GetWholeSubmatrices(), rnnlm::i, kaldi::nnet3::InsertCommands(), kaldi::nnet3::kCompressMatrix, kaldi::nnet3::kDecompressMatrix, MemoryCompressionOptimizer::MatrixCompressInfo::m, MemoryCompressionOptimizer::MatrixCompressInfo::range, MemoryCompressionOptimizer::MatrixCompressInfo::truncate, and MemoryCompressionOptimizer::MatrixCompressInfo::uncompression_command_index.

| void Optimize | ( | ) |

Definition at line 4803 of file nnet-optimize-utils.cc.

References DerivativeTimeLimiter::computation_, NnetComputation::matrices, and DerivativeTimeLimiter::nnet_.

Referenced by kaldi::nnet3::OptimizeMemoryCompression().

|

private |

Definition at line 4813 of file nnet-optimize-utils.cc.

References Access::access_type, NnetComputation::Command::arg1, Access::command_index, NnetComputation::Command::command_type, NnetComputation::commands, DerivativeTimeLimiter::computation_, Nnet::GetComponent(), KALDI_ASSERT, kaldi::nnet3::kBackprop, kaldi::kCompressedMatrixInt16, kaldi::kCompressedMatrixUint8, kaldi::nnet3::kReadAccess, DerivativeTimeLimiter::nnet_, and Component::Type().

|

private |

Definition at line 4765 of file nnet-optimize-utils.cc.

|

private |

Definition at line 4759 of file nnet-optimize-utils.cc.

|

private |

Definition at line 4764 of file nnet-optimize-utils.cc.

|

private |

Definition at line 4762 of file nnet-optimize-utils.cc.

|

private |

Definition at line 4763 of file nnet-optimize-utils.cc.

|

private |

Definition at line 4761 of file nnet-optimize-utils.cc.