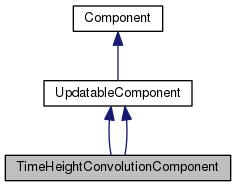

TimeHeightConvolutionComponent implements 2-dimensional convolution where one of the dimensions of convolution (which traditionally would be called the width axis) is identified with time (i.e. More...

#include <nnet-convolutional-component-temp.h>

Classes | |

| class | PrecomputedIndexes |

Public Member Functions | |

| TimeHeightConvolutionComponent () | |

| TimeHeightConvolutionComponent (const TimeHeightConvolutionComponent &other) | |

| virtual int32 | InputDim () const |

| Returns input-dimension of this component. More... | |

| virtual int32 | OutputDim () const |

| Returns output-dimension of this component. More... | |

| virtual std::string | Info () const |

| Returns some text-form information about this component, for diagnostics. More... | |

| virtual void | InitFromConfig (ConfigLine *cfl) |

| Initialize, from a ConfigLine object. More... | |

| virtual std::string | Type () const |

| Returns a string such as "SigmoidComponent", describing the type of the object. More... | |

| virtual int32 | Properties () const |

| Return bitmask of the component's properties. More... | |

| virtual void * | Propagate (const ComponentPrecomputedIndexes *indexes, const CuMatrixBase< BaseFloat > &in, CuMatrixBase< BaseFloat > *out) const |

| Propagate function. More... | |

| virtual void | Backprop (const std::string &debug_info, const ComponentPrecomputedIndexes *indexes, const CuMatrixBase< BaseFloat > &in_value, const CuMatrixBase< BaseFloat > &out_value, const CuMatrixBase< BaseFloat > &out_deriv, void *memo, Component *to_update, CuMatrixBase< BaseFloat > *in_deriv) const |

| Backprop function; depending on which of the arguments 'to_update' and 'in_deriv' are non-NULL, this can compute input-data derivatives and/or perform model update. More... | |

| virtual void | Read (std::istream &is, bool binary) |

| Read function (used after we know the type of the Component); accepts input that is missing the token that describes the component type, in case it has already been consumed. More... | |

| virtual void | Write (std::ostream &os, bool binary) const |

| Write component to stream. More... | |

| virtual Component * | Copy () const |

| Copies component (deep copy). More... | |

| virtual void | ReorderIndexes (std::vector< Index > *input_indexes, std::vector< Index > *output_indexes) const |

| This function only does something interesting for non-simple Components. More... | |

| virtual void | GetInputIndexes (const MiscComputationInfo &misc_info, const Index &output_index, std::vector< Index > *desired_indexes) const |

| This function only does something interesting for non-simple Components. More... | |

| virtual bool | IsComputable (const MiscComputationInfo &misc_info, const Index &output_index, const IndexSet &input_index_set, std::vector< Index > *used_inputs) const |

| This function only does something interesting for non-simple Components, and it exists to make it possible to manage optionally-required inputs. More... | |

| virtual ComponentPrecomputedIndexes * | PrecomputeIndexes (const MiscComputationInfo &misc_info, const std::vector< Index > &input_indexes, const std::vector< Index > &output_indexes, bool need_backprop) const |

| This function must return NULL for simple Components. More... | |

| virtual void | Scale (BaseFloat scale) |

| This virtual function when called on – an UpdatableComponent scales the parameters by "scale" when called by an UpdatableComponent. More... | |

| virtual void | Add (BaseFloat alpha, const Component &other) |

| This virtual function when called by – an UpdatableComponent adds the parameters of another updatable component, times some constant, to the current parameters. More... | |

| virtual void | PerturbParams (BaseFloat stddev) |

| This function is to be used in testing. More... | |

| virtual BaseFloat | DotProduct (const UpdatableComponent &other) const |

| Computes dot-product between parameters of two instances of a Component. More... | |

| virtual int32 | NumParameters () const |

| The following new virtual function returns the total dimension of the parameters in this class. More... | |

| virtual void | Vectorize (VectorBase< BaseFloat > *params) const |

| Turns the parameters into vector form. More... | |

| virtual void | UnVectorize (const VectorBase< BaseFloat > ¶ms) |

| Converts the parameters from vector form. More... | |

| virtual void | FreezeNaturalGradient (bool freeze) |

| freezes/unfreezes NaturalGradient updates, if applicable (to be overriden by components that use Natural Gradient). More... | |

| void | ScaleLinearParams (BaseFloat alpha) |

| void | ConsolidateMemory () |

| This virtual function relates to memory management, and avoiding fragmentation. More... | |

| TimeHeightConvolutionComponent () | |

| TimeHeightConvolutionComponent (const TimeHeightConvolutionComponent &other) | |

| virtual int32 | InputDim () const |

| Returns input-dimension of this component. More... | |

| virtual int32 | OutputDim () const |

| Returns output-dimension of this component. More... | |

| virtual std::string | Info () const |

| Returns some text-form information about this component, for diagnostics. More... | |

| virtual void | InitFromConfig (ConfigLine *cfl) |

| Initialize, from a ConfigLine object. More... | |

| virtual std::string | Type () const |

| Returns a string such as "SigmoidComponent", describing the type of the object. More... | |

| virtual int32 | Properties () const |

| Return bitmask of the component's properties. More... | |

| virtual void * | Propagate (const ComponentPrecomputedIndexes *indexes, const CuMatrixBase< BaseFloat > &in, CuMatrixBase< BaseFloat > *out) const |

| Propagate function. More... | |

| virtual void | Backprop (const std::string &debug_info, const ComponentPrecomputedIndexes *indexes, const CuMatrixBase< BaseFloat > &in_value, const CuMatrixBase< BaseFloat > &out_value, const CuMatrixBase< BaseFloat > &out_deriv, void *memo, Component *to_update, CuMatrixBase< BaseFloat > *in_deriv) const |

| Backprop function; depending on which of the arguments 'to_update' and 'in_deriv' are non-NULL, this can compute input-data derivatives and/or perform model update. More... | |

| virtual void | Read (std::istream &is, bool binary) |

| Read function (used after we know the type of the Component); accepts input that is missing the token that describes the component type, in case it has already been consumed. More... | |

| virtual void | Write (std::ostream &os, bool binary) const |

| Write component to stream. More... | |

| virtual Component * | Copy () const |

| Copies component (deep copy). More... | |

| virtual void | ReorderIndexes (std::vector< Index > *input_indexes, std::vector< Index > *output_indexes) const |

| This function only does something interesting for non-simple Components. More... | |

| virtual void | GetInputIndexes (const MiscComputationInfo &misc_info, const Index &output_index, std::vector< Index > *desired_indexes) const |

| This function only does something interesting for non-simple Components. More... | |

| virtual bool | IsComputable (const MiscComputationInfo &misc_info, const Index &output_index, const IndexSet &input_index_set, std::vector< Index > *used_inputs) const |

| This function only does something interesting for non-simple Components, and it exists to make it possible to manage optionally-required inputs. More... | |

| virtual ComponentPrecomputedIndexes * | PrecomputeIndexes (const MiscComputationInfo &misc_info, const std::vector< Index > &input_indexes, const std::vector< Index > &output_indexes, bool need_backprop) const |

| This function must return NULL for simple Components. More... | |

| virtual void | Scale (BaseFloat scale) |

| This virtual function when called on – an UpdatableComponent scales the parameters by "scale" when called by an UpdatableComponent. More... | |

| virtual void | Add (BaseFloat alpha, const Component &other) |

| This virtual function when called by – an UpdatableComponent adds the parameters of another updatable component, times some constant, to the current parameters. More... | |

| virtual void | PerturbParams (BaseFloat stddev) |

| This function is to be used in testing. More... | |

| virtual BaseFloat | DotProduct (const UpdatableComponent &other) const |

| Computes dot-product between parameters of two instances of a Component. More... | |

| virtual int32 | NumParameters () const |

| The following new virtual function returns the total dimension of the parameters in this class. More... | |

| virtual void | Vectorize (VectorBase< BaseFloat > *params) const |

| Turns the parameters into vector form. More... | |

| virtual void | UnVectorize (const VectorBase< BaseFloat > ¶ms) |

| Converts the parameters from vector form. More... | |

| virtual void | FreezeNaturalGradient (bool freeze) |

| freezes/unfreezes NaturalGradient updates, if applicable (to be overriden by components that use Natural Gradient). More... | |

| void | ScaleLinearParams (BaseFloat alpha) |

| void | ConsolidateMemory () |

| This virtual function relates to memory management, and avoiding fragmentation. More... | |

Public Member Functions inherited from UpdatableComponent Public Member Functions inherited from UpdatableComponent | |

| UpdatableComponent (const UpdatableComponent &other) | |

| UpdatableComponent () | |

| virtual | ~UpdatableComponent () |

| virtual void | SetUnderlyingLearningRate (BaseFloat lrate) |

| Sets the learning rate of gradient descent- gets multiplied by learning_rate_factor_. More... | |

| virtual void | SetActualLearningRate (BaseFloat lrate) |

| Sets the learning rate directly, bypassing learning_rate_factor_. More... | |

| virtual void | SetAsGradient () |

| Sets is_gradient_ to true and sets learning_rate_ to 1, ignoring learning_rate_factor_. More... | |

| virtual BaseFloat | LearningRateFactor () |

| virtual void | SetLearningRateFactor (BaseFloat lrate_factor) |

| void | SetUpdatableConfigs (const UpdatableComponent &other) |

| BaseFloat | LearningRate () const |

| Gets the learning rate to be used in gradient descent. More... | |

| BaseFloat | MaxChange () const |

| Returns the per-component max-change value, which is interpreted as the maximum change (in l2 norm) in parameters that is allowed per minibatch for this component. More... | |

| void | SetMaxChange (BaseFloat max_change) |

| BaseFloat | L2Regularization () const |

| Returns the l2 regularization constant, which may be set in any updatable component (usually from the config file). More... | |

| void | SetL2Regularization (BaseFloat a) |

Public Member Functions inherited from Component Public Member Functions inherited from Component | |

| virtual void | StoreStats (const CuMatrixBase< BaseFloat > &in_value, const CuMatrixBase< BaseFloat > &out_value, void *memo) |

| This function may store stats on average activation values, and for some component types, the average value of the derivative of the nonlinearity. More... | |

| virtual void | ZeroStats () |

| Components that provide an implementation of StoreStats should also provide an implementation of ZeroStats(), to set those stats to zero. More... | |

| virtual void | DeleteMemo (void *memo) const |

| This virtual function only needs to be overwritten by Components that return a non-NULL memo from their Propagate() function. More... | |

| Component () | |

| virtual | ~Component () |

Private Member Functions | |

| void | Check () const |

| void | ComputeDerived () |

| void | UpdateNaturalGradient (const PrecomputedIndexes &indexes, const CuMatrixBase< BaseFloat > &in_value, const CuMatrixBase< BaseFloat > &out_deriv) |

| void | UpdateSimple (const PrecomputedIndexes &indexes, const CuMatrixBase< BaseFloat > &in_value, const CuMatrixBase< BaseFloat > &out_deriv) |

| void | InitUnit () |

| void | Check () const |

| void | ComputeDerived () |

| void | UpdateNaturalGradient (const PrecomputedIndexes &indexes, const CuMatrixBase< BaseFloat > &in_value, const CuMatrixBase< BaseFloat > &out_deriv) |

| void | UpdateSimple (const PrecomputedIndexes &indexes, const CuMatrixBase< BaseFloat > &in_value, const CuMatrixBase< BaseFloat > &out_deriv) |

| void | InitUnit () |

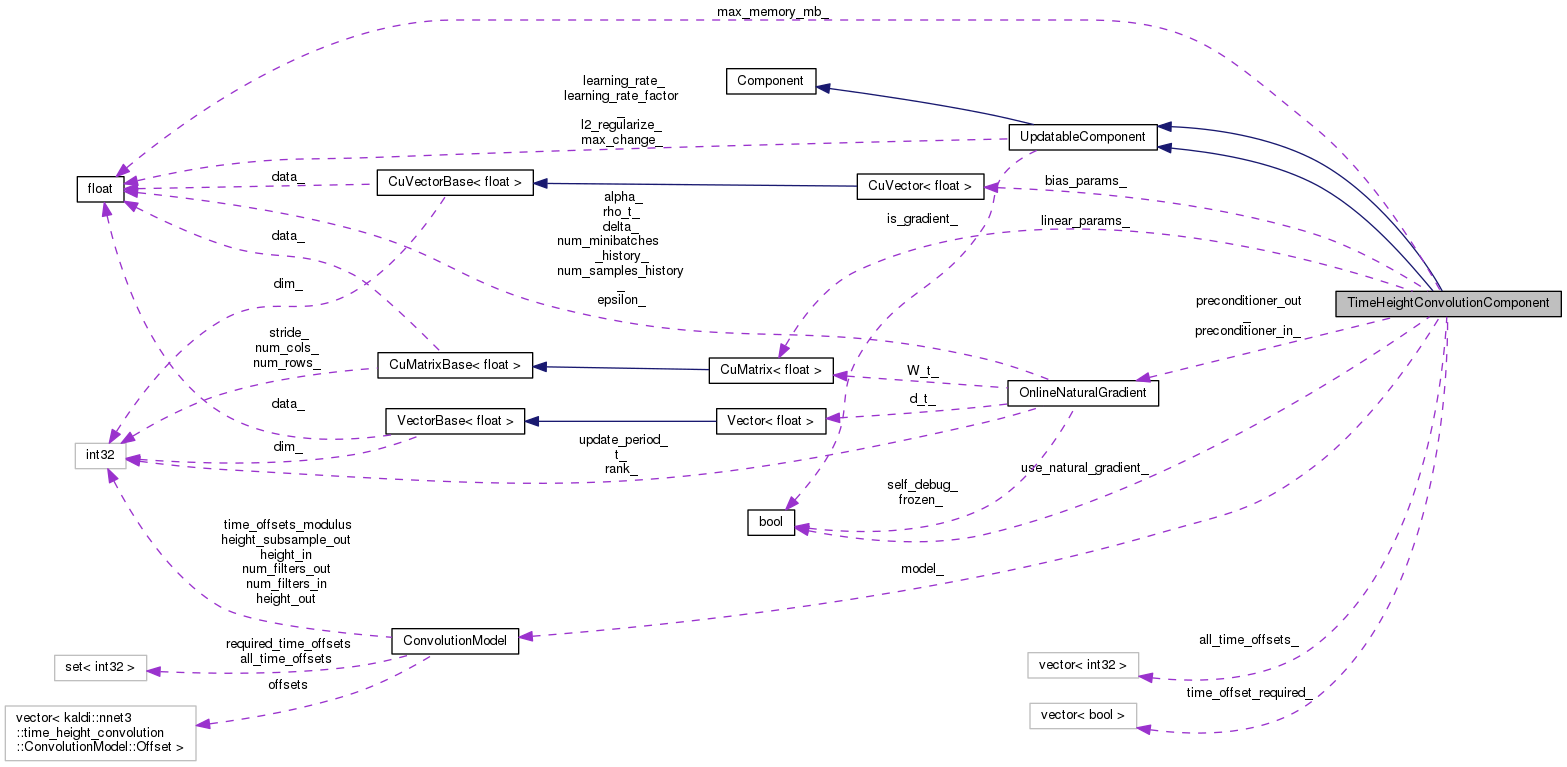

Private Attributes | |

| time_height_convolution::ConvolutionModel | model_ |

| std::vector< int32 > | all_time_offsets_ |

| std::vector< bool > | time_offset_required_ |

| CuMatrix< BaseFloat > | linear_params_ |

| CuVector< BaseFloat > | bias_params_ |

| BaseFloat | max_memory_mb_ |

| bool | use_natural_gradient_ |

| OnlineNaturalGradient | preconditioner_in_ |

| OnlineNaturalGradient | preconditioner_out_ |

Additional Inherited Members | |

Static Public Member Functions inherited from Component Static Public Member Functions inherited from Component | |

| static Component * | ReadNew (std::istream &is, bool binary) |

| Read component from stream (works out its type). Dies on error. More... | |

| static Component * | NewComponentOfType (const std::string &type) |

| Returns a new Component of the given type e.g. More... | |

Protected Member Functions inherited from UpdatableComponent Protected Member Functions inherited from UpdatableComponent | |

| void | InitLearningRatesFromConfig (ConfigLine *cfl) |

| std::string | ReadUpdatableCommon (std::istream &is, bool binary) |

| void | WriteUpdatableCommon (std::ostream &is, bool binary) const |

Protected Attributes inherited from UpdatableComponent Protected Attributes inherited from UpdatableComponent | |

| BaseFloat | learning_rate_ |

| learning rate (typically 0.0..0.01) More... | |

| BaseFloat | learning_rate_factor_ |

| learning rate factor (normally 1.0, but can be set to another < value so that when < you call SetLearningRate(), that value will be scaled by this factor. More... | |

| BaseFloat | l2_regularize_ |

| L2 regularization constant. More... | |

| bool | is_gradient_ |

| True if this component is to be treated as a gradient rather than as parameters. More... | |

| BaseFloat | max_change_ |

| configuration value for imposing max-change More... | |

TimeHeightConvolutionComponent implements 2-dimensional convolution where one of the dimensions of convolution (which traditionally would be called the width axis) is identified with time (i.e.

the 't' component of Indexes). For a deeper understanding of how this works, please see convolution.h.

The following are the parameters accepted on the config line, with examples of their values.

Parameters inherited from UpdatableComponent (see comment above declaration of UpdadableComponent in nnet-component-itf.h for details): learning-rate, learning-rate-factor, max-change

Convolution-related parameters:

num-filters-in E.g. num-filters-in=32. Number of input filters (the number of separate versions of the input image). The filter-dim has stride 1 in the input and output vectors, i.e. we order the input as (all-filters-for-height=0, all-filters-for-height=1, etc.) num-filters-out E.g. num-filters-out=64. The number of output filters (the number of separate versions of the output image). As with the input, the filter-dim has stride 1. height-in E.g. height-in=40. The height of the input image. The width is not specified the the model level, as it's identified with "t" and is called the time axis; the width is determined by how many "t" values were available at the input of the network, and how many were requested at the output. height-out E.g. height-out=40. The height of the output image. Will normally be <= (the input height divided by height-subsample-out). height-subsample-out E.g. height-subsample-out=2 (defaults to 1). Subsampling factor on the height axis, e.g. you might set this to 2 if you are doing subsampling on this layer, which would involve discarding every other height increment at the output. There is no corresponding config for the time dimension, as time subsampling is determined by which 't' values you request at the output, together with the values of 'time-offsets' at different layers of the network. height-offsets E.g. height-offsets=-1,0,1 The set of height offsets that contribute to each output pixel: with the values -1,0,1, height 10 at the output would see data from heights 9,10,11 at the input. These values will normally be consecutive. Negative values imply zero-padding on the bottom of the image, since output-height 0 is always defined. Zero-padding at the top of the image is determined in a similar way (e.g. if height-in==height-out and height-offsets=-1,0,1, then there is 1 pixel of padding at the top and bottom of the image). time-offsets E.g. time-offsets=-1,0,1 The time offsets that we require at the input to produce a given output; these are comparable to the offsets used in TDNNs. Note that the time axis is always numbered using an absolute scheme, so that if there is subsampling on the time axis, then later in the network you'll see time-offsets like "-2,0,2" or "-4,0,4". Subsampling on the time axis is not explicitly specified but is implicit based on tracking dependencies. offsets Setting 'offsets' is an alternative to setting both height-offsets and time-offsets, that is useful for configurations with less regularity. It is a semicolon- separated list of pairs (time-offset,height-offset) that might look like: -1,1;-1,0;-1,1;0,1;....;1,1 required-time-offsets E.g. required-time-offsets=0 (defaults to the same value as time-offsets). This is a set of time offsets, which if specified must be a nonempty subset of time-offsets; it determines whether zero-padding on the time axis is allowed in cases where there is insufficient input. If not specified it defaults to the same as 'time-offsets', meaning there is no zero-padding on the time axis. Note: for speech tasks we tend to pad on the time axis with repeats of the first or last frame, rather than zero; and this is handled while preparing the data and not by the core components of the nnet3 framework. So for speech tasks we wouldn't normally set this value. max-memory-mb Maximum amount of temporary memory, in megabytes, that may be used as temporary matrices in the convolution computation. default=200.0.

Initialization parameters: param-stddev Standard deviation of the linear parameters of the convolution. Defaults to sqrt(1.0 / (num-filters-in * num-height-offsets * num-time-offsets)), e.g. sqrt(1.0/(64*3*3)) for a 3x3 kernel with 64 input filters; this value will ensure that the output has unit stddev if the input has unit stddev. bias-stddev Standard deviation of bias terms. default=0.0. init-unit Defaults to false. If true, it is required that num-filters-in equal num-filters-out and there should exist a (height, time) offset in the model equal to (0, 0). We will initialize the parameter matrix to be equivalent to the identity transform. In this case, param-stddev is ignored.

Natural-gradient related options are below; you won't normally have to set these.

use-natural-gradient e.g. use-natural-gradient=false (defaults to true). You can set this to false to disable the natural gradient updates (you won't normally want to do this). rank-out Rank used in low-rank-plus-unit estimate of the Fisher-matrix factor that has the dimension (num-rows of the parameter space), which equals num-filters-out. It defaults to the minimum of 80, or half of the number of output filters. rank-in Rank used in low-rank-plus-unit estimate of the Fisher matrix factor which has the dimension (num-cols of the parameter matrix), which has the dimension (num-input-filters * number of time-offsets * number of height-offsets + 1), e.g. num-input-filters * 3 * 3 + 1 for a 3x3 kernel (the +1 is for the bias term). It defaults to the minimum of 80, or half the num-rows of the parameter matrix. [note: I'm considering decreasing this default to e.g. 40 or 20]. num-minibatches-history This is used setting the 'num_samples_history' configuration value of the natural gradient object. There is no concept of samples (frames) in the application of natural gradient to the convnet, because we do it all on the rows and columns of the derivative. default=4.0. A larger value means the Fisher matrix is averaged over more minibatches (it's an exponential-decay thing). alpha-out Constant that determines how much we smooth the Fisher-matrix factors with the unit matrix, for the space of dimension num-filters-out. default=4.0. alpha-in Constant that determines how much we smooth the Fisher-matrix factors with the unit matrix, for the space of dimension (num-filters-in * num-time-offsets * num-height-offsets + 1). default=4.0.

Example of a 3x3 kernel with no subsampling, and with zero-padding on both the the height and time axis, and where there has previously been no subsampling on the time axis:

num-filters-in=32 num-filters-out=64 height-in=28 height-out=28 \ height-subsample-out=1 height-offsets=-1,0,1 time-offsets=-1,0,1 \ required-time-offsets=0

Example of a 3x3 kernel with no subsampling, without zero-padding on either axis, and where there has *previously* been 2-fold subsampling on the time axis:

num-filters-in=32 num-filters-out=64 height-in=20 height-out=18 \ height-subsample-out=1 height-offsets=0,1,2 time-offsets=0,2,4

[note: above, the choice to have the time-offsets start at zero rather than be centered is just a choice: it assumes that at the output of the network you would want to request indexes with t=0, while at the input the t values start from zero.]

Example of a 3x3 kernel with subsampling on the height axis, without zero-padding on either axis, and where there has previously been 2-fold subsampling on the time axis:

num-filters-in=32 num-filters-out=64 height-in=20 height-out=9 \ height-subsample-out=2 height-offsets=0,1,2 time-offsets=0,2,4

[note: subsampling on the time axis is not expressed in the layer itself: any time you increase the distance between time-offsets, like changing them from 0,1,2 to 0,2,4, you are effectively subsampling the previous layer– assuming you only request the output at one time value or at multiples of the total subsampling factor.]

Example of a 1x1 kernel:

num-filters-in=64 num-filters-out=64 height-in=20 height-out=20 \ height-subsample-out=1 height-offsets=0 time-offsets=0

Definition at line 212 of file nnet-convolutional-component-temp.h.

Definition at line 31 of file nnet-convolutional-component.cc.

Referenced by TimeHeightConvolutionComponent::Copy().

| TimeHeightConvolutionComponent | ( | const TimeHeightConvolutionComponent & | other | ) |

Definition at line 34 of file nnet-convolutional-component.cc.

References TimeHeightConvolutionComponent::Check().

| TimeHeightConvolutionComponent | ( | const TimeHeightConvolutionComponent & | other | ) |

This virtual function when called by – an UpdatableComponent adds the parameters of another updatable component, times some constant, to the current parameters.

– a NonlinearComponent (or another component that stores stats, like BatchNormComponent)– it relates to adding stats. Otherwise it will normally do nothing.

Reimplemented from Component.

Definition at line 591 of file nnet-convolutional-component.cc.

References TimeHeightConvolutionComponent::bias_params_, KALDI_ASSERT, and TimeHeightConvolutionComponent::linear_params_.

Referenced by TimeHeightConvolutionComponent::Copy(), and TdnnComponent::Copy().

This virtual function when called by – an UpdatableComponent adds the parameters of another updatable component, times some constant, to the current parameters.

– a NonlinearComponent (or another component that stores stats, like BatchNormComponent)– it relates to adding stats. Otherwise it will normally do nothing.

Reimplemented from Component.

|

virtual |

Backprop function; depending on which of the arguments 'to_update' and 'in_deriv' are non-NULL, this can compute input-data derivatives and/or perform model update.

| [in] | debug_info | The component name, to be printed out in any warning messages. |

| [in] | indexes | A pointer to some information output by this class's PrecomputeIndexes function (will be NULL for simple components, i.e. those that don't do things like splicing). |

| [in] | in_value | The matrix that was given as input to the Propagate function. Will be ignored (and may be empty) if Properties()&kBackpropNeedsInput == 0. |

| [in] | out_value | The matrix that was output from the Propagate function. Will be ignored (and may be empty) if Properties()&kBackpropNeedsOutput == 0 |

| [in] | out_deriv | The derivative at the output of this component. |

| [in] | memo | This will normally be NULL, but for component types that set the flag kUsesMemo, this will be the return value of the Propagate() function that corresponds to this Backprop() function. Ownership of any pointers is not transferred to the Backprop function; DeleteMemo() will be called to delete it. |

| [out] | to_update | If model update is desired, the Component to be updated, else NULL. Does not have to be identical to this. If supplied, you can assume that to_update->Properties() & kUpdatableComponent is nonzero. |

| [out] | in_deriv | The derivative at the input of this component, if needed (else NULL). If Properties()&kBackpropInPlace, may be the same matrix as out_deriv. If Properties()&kBackpropAdds, this is added to by the Backprop routine, else it is set. The component code chooses which mode to work in, based on convenience. |

Implements Component.

|

virtual |

Backprop function; depending on which of the arguments 'to_update' and 'in_deriv' are non-NULL, this can compute input-data derivatives and/or perform model update.

| [in] | debug_info | The component name, to be printed out in any warning messages. |

| [in] | indexes | A pointer to some information output by this class's PrecomputeIndexes function (will be NULL for simple components, i.e. those that don't do things like splicing). |

| [in] | in_value | The matrix that was given as input to the Propagate function. Will be ignored (and may be empty) if Properties()&kBackpropNeedsInput == 0. |

| [in] | out_value | The matrix that was output from the Propagate function. Will be ignored (and may be empty) if Properties()&kBackpropNeedsOutput == 0 |

| [in] | out_deriv | The derivative at the output of this component. |

| [in] | memo | This will normally be NULL, but for component types that set the flag kUsesMemo, this will be the return value of the Propagate() function that corresponds to this Backprop() function. Ownership of any pointers is not transferred to the Backprop function; DeleteMemo() will be called to delete it. |

| [out] | to_update | If model update is desired, the Component to be updated, else NULL. Does not have to be identical to this. If supplied, you can assume that to_update->Properties() & kUpdatableComponent is nonzero. |

| [out] | in_deriv | The derivative at the input of this component, if needed (else NULL). If Properties()&kBackpropInPlace, may be the same matrix as out_deriv. If Properties()&kBackpropAdds, this is added to by the Backprop routine, else it is set. The component code chooses which mode to work in, based on convenience. |

Implements Component.

Definition at line 301 of file nnet-convolutional-component.cc.

References TimeHeightConvolutionComponent::PrecomputedIndexes::computation, kaldi::nnet3::time_height_convolution::ConvolveBackwardData(), UpdatableComponent::is_gradient_, KALDI_ASSERT, UpdatableComponent::learning_rate_, TimeHeightConvolutionComponent::linear_params_, NVTX_RANGE, TimeHeightConvolutionComponent::UpdateNaturalGradient(), TimeHeightConvolutionComponent::UpdateSimple(), and TimeHeightConvolutionComponent::use_natural_gradient_.

Referenced by TimeHeightConvolutionComponent::Properties(), and TdnnComponent::Properties().

|

private |

Definition at line 50 of file nnet-convolutional-component.cc.

References TimeHeightConvolutionComponent::bias_params_, ConvolutionModel::Check(), KALDI_ASSERT, TimeHeightConvolutionComponent::linear_params_, TimeHeightConvolutionComponent::model_, ConvolutionModel::num_filters_out, ConvolutionModel::ParamCols(), and ConvolutionModel::ParamRows().

Referenced by TdnnComponent::OrthonormalConstraint(), TimeHeightConvolutionComponent::Read(), TimeHeightConvolutionComponent::ScaleLinearParams(), and TimeHeightConvolutionComponent::TimeHeightConvolutionComponent().

|

private |

|

private |

Definition at line 491 of file nnet-convolutional-component.cc.

References ConvolutionModel::all_time_offsets, TimeHeightConvolutionComponent::all_time_offsets_, rnnlm::i, TimeHeightConvolutionComponent::model_, ConvolutionModel::required_time_offsets, and TimeHeightConvolutionComponent::time_offset_required_.

Referenced by TimeHeightConvolutionComponent::InitFromConfig(), TimeHeightConvolutionComponent::Read(), and TimeHeightConvolutionComponent::ScaleLinearParams().

|

private |

|

virtual |

This virtual function relates to memory management, and avoiding fragmentation.

It is called only once per model, after we do the first minibatch of training. The default implementation does nothing, but it can be overridden by child classes, where it may re-initialize certain quantities that may possibly have been allocated during the forward pass (e.g. certain statistics; OnlineNaturalGradient objects). We use our own CPU-based allocator (see cu-allocator.h) and since it can't do paging since we're not in control of the GPU page table, fragmentation can be a problem. The allocator always tries to put things in 'low-address memory' (i.e. at smaller memory addresses) near the beginning of the block it allocated, to avoid fragmentation; but if permanent things (belonging to the model) are allocated in the forward pass, they can permanently stay in high memory. This function helps to prevent that, by re-allocating those things into low-address memory (It's important that it's called after all the temporary buffers for the forward-backward have been freed, so that there is low-address memory available)).

Reimplemented from Component.

Definition at line 669 of file nnet-convolutional-component.cc.

References TimeHeightConvolutionComponent::preconditioner_in_, TimeHeightConvolutionComponent::preconditioner_out_, and OnlineNaturalGradient::Swap().

Referenced by TdnnComponent::OrthonormalConstraint(), and TimeHeightConvolutionComponent::ScaleLinearParams().

|

virtual |

This virtual function relates to memory management, and avoiding fragmentation.

It is called only once per model, after we do the first minibatch of training. The default implementation does nothing, but it can be overridden by child classes, where it may re-initialize certain quantities that may possibly have been allocated during the forward pass (e.g. certain statistics; OnlineNaturalGradient objects). We use our own CPU-based allocator (see cu-allocator.h) and since it can't do paging since we're not in control of the GPU page table, fragmentation can be a problem. The allocator always tries to put things in 'low-address memory' (i.e. at smaller memory addresses) near the beginning of the block it allocated, to avoid fragmentation; but if permanent things (belonging to the model) are allocated in the forward pass, they can permanently stay in high memory. This function helps to prevent that, by re-allocating those things into low-address memory (It's important that it's called after all the temporary buffers for the forward-backward have been freed, so that there is low-address memory available)).

Reimplemented from Component.

|

inlinevirtual |

Copies component (deep copy).

Implements Component.

Definition at line 245 of file nnet-convolutional-component-temp.h.

References TimeHeightConvolutionComponent::Add(), TimeHeightConvolutionComponent::DotProduct(), TimeHeightConvolutionComponent::FreezeNaturalGradient(), TimeHeightConvolutionComponent::GetInputIndexes(), TimeHeightConvolutionComponent::IsComputable(), TimeHeightConvolutionComponent::NumParameters(), TimeHeightConvolutionComponent::PerturbParams(), TimeHeightConvolutionComponent::PrecomputeIndexes(), TimeHeightConvolutionComponent::ReorderIndexes(), TimeHeightConvolutionComponent::Scale(), TimeHeightConvolutionComponent::TimeHeightConvolutionComponent(), TimeHeightConvolutionComponent::UnVectorize(), and TimeHeightConvolutionComponent::Vectorize().

|

inlinevirtual |

Copies component (deep copy).

Implements Component.

Definition at line 245 of file nnet-convolutional-component.h.

References TimeHeightConvolutionComponent::Add(), TimeHeightConvolutionComponent::DotProduct(), TimeHeightConvolutionComponent::FreezeNaturalGradient(), TimeHeightConvolutionComponent::GetInputIndexes(), TimeHeightConvolutionComponent::IsComputable(), TimeHeightConvolutionComponent::NumParameters(), TimeHeightConvolutionComponent::PerturbParams(), TimeHeightConvolutionComponent::PrecomputeIndexes(), TimeHeightConvolutionComponent::ReorderIndexes(), TimeHeightConvolutionComponent::Scale(), TimeHeightConvolutionComponent::TimeHeightConvolutionComponent(), TimeHeightConvolutionComponent::UnVectorize(), and TimeHeightConvolutionComponent::Vectorize().

|

virtual |

Computes dot-product between parameters of two instances of a Component.

Can be used for computing parameter-norm of an UpdatableComponent.

Implements UpdatableComponent.

Definition at line 610 of file nnet-convolutional-component.cc.

References TimeHeightConvolutionComponent::bias_params_, KALDI_ASSERT, kaldi::kTrans, TimeHeightConvolutionComponent::linear_params_, kaldi::TraceMatMat(), and kaldi::VecVec().

Referenced by TimeHeightConvolutionComponent::Copy(), and TdnnComponent::Copy().

|

virtual |

Computes dot-product between parameters of two instances of a Component.

Can be used for computing parameter-norm of an UpdatableComponent.

Implements UpdatableComponent.

|

virtual |

freezes/unfreezes NaturalGradient updates, if applicable (to be overriden by components that use Natural Gradient).

Reimplemented from UpdatableComponent.

Definition at line 642 of file nnet-convolutional-component.cc.

References OnlineNaturalGradient::Freeze(), TimeHeightConvolutionComponent::preconditioner_in_, and TimeHeightConvolutionComponent::preconditioner_out_.

Referenced by TimeHeightConvolutionComponent::Copy(), and TdnnComponent::Copy().

|

virtual |

freezes/unfreezes NaturalGradient updates, if applicable (to be overriden by components that use Natural Gradient).

Reimplemented from UpdatableComponent.

|

virtual |

This function only does something interesting for non-simple Components.

For a given index at the output of the component, tells us what indexes are required at its input (note: "required" encompasses also optionally-required things; it will enumerate all things that we'd like to have). See also IsComputable().

| [in] | misc_info | This argument is supplied to handle things that the framework can't very easily supply: information like which time indexes are needed for AggregateComponent, which time-indexes are available at the input of a recurrent network, and so on. We will add members to misc_info as needed. |

| [in] | output_index | The Index at the output of the component, for which we are requesting the list of indexes at the component's input. |

| [out] | desired_indexes | A list of indexes that are desired at the input. are to be written to here. By "desired" we mean required or optionally-required. |

The default implementation of this function is suitable for any SimpleComponent; it just copies the output_index to a single identical element in input_indexes.

Reimplemented from Component.

|

virtual |

This function only does something interesting for non-simple Components.

For a given index at the output of the component, tells us what indexes are required at its input (note: "required" encompasses also optionally-required things; it will enumerate all things that we'd like to have). See also IsComputable().

| [in] | misc_info | This argument is supplied to handle things that the framework can't very easily supply: information like which time indexes are needed for AggregateComponent, which time-indexes are available at the input of a recurrent network, and so on. We will add members to misc_info as needed. |

| [in] | output_index | The Index at the output of the component, for which we are requesting the list of indexes at the component's input. |

| [out] | desired_indexes | A list of indexes that are desired at the input. are to be written to here. By "desired" we mean required or optionally-required. |

The default implementation of this function is suitable for any SimpleComponent; it just copies the output_index to a single identical element in input_indexes.

Reimplemented from Component.

Definition at line 504 of file nnet-convolutional-component.cc.

References TimeHeightConvolutionComponent::all_time_offsets_, rnnlm::i, KALDI_ASSERT, kaldi::nnet3::kNoTime, Index::n, Index::t, and Index::x.

Referenced by TimeHeightConvolutionComponent::Copy(), and TdnnComponent::Copy().

|

virtual |

Returns some text-form information about this component, for diagnostics.

Starts with the type of the component. E.g. "SigmoidComponent dim=900", although most components will have much more info.

Reimplemented from UpdatableComponent.

|

virtual |

Returns some text-form information about this component, for diagnostics.

Starts with the type of the component. E.g. "SigmoidComponent dim=900", although most components will have much more info.

Reimplemented from UpdatableComponent.

Definition at line 65 of file nnet-convolutional-component.cc.

References TimeHeightConvolutionComponent::bias_params_, OnlineNaturalGradient::GetAlpha(), OnlineNaturalGradient::GetNumMinibatchesHistory(), OnlineNaturalGradient::GetRank(), ConvolutionModel::Info(), UpdatableComponent::Info(), TimeHeightConvolutionComponent::linear_params_, TimeHeightConvolutionComponent::max_memory_mb_, TimeHeightConvolutionComponent::model_, TimeHeightConvolutionComponent::NumParameters(), TimeHeightConvolutionComponent::preconditioner_in_, TimeHeightConvolutionComponent::preconditioner_out_, kaldi::nnet3::PrintParameterStats(), and TimeHeightConvolutionComponent::use_natural_gradient_.

Referenced by TdnnComponent::OutputDim().

|

virtual |

Initialize, from a ConfigLine object.

| [in] | cfl | A ConfigLine containing any parameters that are needed for initialization. For example: "dim=100 param-stddev=0.1" |

Implements Component.

Definition at line 114 of file nnet-convolutional-component.cc.

References TimeHeightConvolutionComponent::bias_params_, ConvolutionModel::Check(), ConvolutionModel::ComputeDerived(), TimeHeightConvolutionComponent::ComputeDerived(), ConfigLine::GetValue(), ConvolutionModel::height_in, ConvolutionModel::Offset::height_offset, ConvolutionModel::height_out, ConvolutionModel::height_subsample_out, rnnlm::i, UpdatableComponent::InitLearningRatesFromConfig(), TimeHeightConvolutionComponent::InitUnit(), kaldi::IsSortedAndUniq(), rnnlm::j, KALDI_ASSERT, KALDI_ERR, KALDI_WARN, TimeHeightConvolutionComponent::linear_params_, TimeHeightConvolutionComponent::max_memory_mb_, TimeHeightConvolutionComponent::model_, ConvolutionModel::num_filters_in, ConvolutionModel::num_filters_out, ConvolutionModel::offsets, ConvolutionModel::ParamCols(), ConvolutionModel::ParamRows(), TimeHeightConvolutionComponent::preconditioner_in_, TimeHeightConvolutionComponent::preconditioner_out_, ConvolutionModel::required_time_offsets, OnlineNaturalGradient::SetAlpha(), OnlineNaturalGradient::SetNumMinibatchesHistory(), OnlineNaturalGradient::SetRank(), kaldi::SortAndUniq(), kaldi::SplitStringToIntegers(), kaldi::SplitStringToVector(), ConvolutionModel::Offset::time_offset, TimeHeightConvolutionComponent::use_natural_gradient_, and ConfigLine::WholeLine().

Referenced by TdnnComponent::OutputDim().

|

virtual |

Initialize, from a ConfigLine object.

| [in] | cfl | A ConfigLine containing any parameters that are needed for initialization. For example: "dim=100 param-stddev=0.1" |

Implements Component.

|

private |

Definition at line 88 of file nnet-convolutional-component.cc.

References rnnlm::i, KALDI_ASSERT, KALDI_ERR, TimeHeightConvolutionComponent::linear_params_, TimeHeightConvolutionComponent::model_, ConvolutionModel::num_filters_in, ConvolutionModel::num_filters_out, and ConvolutionModel::offsets.

Referenced by TimeHeightConvolutionComponent::InitFromConfig(), and TimeHeightConvolutionComponent::ScaleLinearParams().

|

private |

|

virtual |

Returns input-dimension of this component.

Implements Component.

Definition at line 57 of file nnet-convolutional-component.cc.

References ConvolutionModel::InputDim(), and TimeHeightConvolutionComponent::model_.

|

virtual |

Returns input-dimension of this component.

Implements Component.

|

virtual |

This function only does something interesting for non-simple Components, and it exists to make it possible to manage optionally-required inputs.

It tells the user whether a given output index is computable from a given set of input indexes, and if so, says which input indexes will be used in the computation.

Implementations of this function are required to have the property that adding an element to "input_index_set" can only ever change IsComputable from false to true, never vice versa.

| [in] | misc_info | Some information specific to the computation, such as minimum and maximum times for certain components to do adaptation on; it's a place to put things that don't easily fit in the framework. |

| [in] | output_index | The index that is to be computed at the output of this Component. |

| [in] | input_index_set | The set of indexes that is available at the input of this Component. |

| [out] | used_inputs | If this is non-NULL and the output is computable this will be set to the list of input indexes that will actually be used in the computation. |

The default implementation of this function is suitable for any SimpleComponent: it just returns true if output_index is in input_index_set, and if so sets used_inputs to vector containing that one Index.

Reimplemented from Component.

|

virtual |

This function only does something interesting for non-simple Components, and it exists to make it possible to manage optionally-required inputs.

It tells the user whether a given output index is computable from a given set of input indexes, and if so, says which input indexes will be used in the computation.

Implementations of this function are required to have the property that adding an element to "input_index_set" can only ever change IsComputable from false to true, never vice versa.

| [in] | misc_info | Some information specific to the computation, such as minimum and maximum times for certain components to do adaptation on; it's a place to put things that don't easily fit in the framework. |

| [in] | output_index | The index that is to be computed at the output of this Component. |

| [in] | input_index_set | The set of indexes that is available at the input of this Component. |

| [out] | used_inputs | If this is non-NULL and the output is computable this will be set to the list of input indexes that will actually be used in the computation. |

The default implementation of this function is suitable for any SimpleComponent: it just returns true if output_index is in input_index_set, and if so sets used_inputs to vector containing that one Index.

Reimplemented from Component.

Definition at line 519 of file nnet-convolutional-component.cc.

References TimeHeightConvolutionComponent::all_time_offsets_, rnnlm::i, KALDI_ASSERT, kaldi::nnet3::kNoTime, Index::t, and TimeHeightConvolutionComponent::time_offset_required_.

Referenced by TimeHeightConvolutionComponent::Copy(), and TdnnComponent::Copy().

|

virtual |

The following new virtual function returns the total dimension of the parameters in this class.

Reimplemented from UpdatableComponent.

Definition at line 619 of file nnet-convolutional-component.cc.

References TimeHeightConvolutionComponent::bias_params_, and TimeHeightConvolutionComponent::linear_params_.

Referenced by TimeHeightConvolutionComponent::Copy(), TdnnComponent::Copy(), TimeHeightConvolutionComponent::Info(), TimeHeightConvolutionComponent::UnVectorize(), and TimeHeightConvolutionComponent::Vectorize().

|

virtual |

The following new virtual function returns the total dimension of the parameters in this class.

Reimplemented from UpdatableComponent.

|

virtual |

Returns output-dimension of this component.

Implements Component.

|

virtual |

Returns output-dimension of this component.

Implements Component.

Definition at line 61 of file nnet-convolutional-component.cc.

References TimeHeightConvolutionComponent::model_, and ConvolutionModel::OutputDim().

|

virtual |

This function is to be used in testing.

It adds unit noise times "stddev" to the parameters of the component.

Implements UpdatableComponent.

Definition at line 600 of file nnet-convolutional-component.cc.

References TimeHeightConvolutionComponent::bias_params_, kaldi::kUndefined, TimeHeightConvolutionComponent::linear_params_, CuVectorBase< Real >::SetRandn(), and CuMatrixBase< Real >::SetRandn().

Referenced by TimeHeightConvolutionComponent::Copy(), and TdnnComponent::Copy().

|

virtual |

This function is to be used in testing.

It adds unit noise times "stddev" to the parameters of the component.

Implements UpdatableComponent.

|

virtual |

This function must return NULL for simple Components.

Returns a pointer to a class that may contain some precomputed component-specific and computation-specific indexes to be in used in the Propagate and Backprop functions.

| [in] | misc_info | This argument is supplied to handle things that the framework can't very easily supply: information like which time indexes are needed for AggregateComponent, which time-indexes are available at the input of a recurrent network, and so on. misc_info may not even ever be used here. We will add members to misc_info as needed. |

| [in] | input_indexes | A vector of indexes that explains what time-indexes (and other indexes) each row of the in/in_value/in_deriv matrices given to Propagate and Backprop will mean. |

| [in] | output_indexes | A vector of indexes that explains what time-indexes (and other indexes) each row of the out/out_value/out_deriv matrices given to Propagate and Backprop will mean. |

| [in] | need_backprop | True if we might need to do backprop with this component, so that if any different indexes are needed for backprop then those should be computed too. |

Reimplemented from Component.

Definition at line 560 of file nnet-convolutional-component.cc.

References kaldi::nnet3::time_height_convolution::CompileConvolutionComputation(), TimeHeightConvolutionComponent::PrecomputedIndexes::computation, KALDI_ERR, TimeHeightConvolutionComponent::max_memory_mb_, and TimeHeightConvolutionComponent::model_.

Referenced by TimeHeightConvolutionComponent::Copy(), and TdnnComponent::Copy().

|

virtual |

This function must return NULL for simple Components.

Returns a pointer to a class that may contain some precomputed component-specific and computation-specific indexes to be in used in the Propagate and Backprop functions.

| [in] | misc_info | This argument is supplied to handle things that the framework can't very easily supply: information like which time indexes are needed for AggregateComponent, which time-indexes are available at the input of a recurrent network, and so on. misc_info may not even ever be used here. We will add members to misc_info as needed. |

| [in] | input_indexes | A vector of indexes that explains what time-indexes (and other indexes) each row of the in/in_value/in_deriv matrices given to Propagate and Backprop will mean. |

| [in] | output_indexes | A vector of indexes that explains what time-indexes (and other indexes) each row of the out/out_value/out_deriv matrices given to Propagate and Backprop will mean. |

| [in] | need_backprop | True if we might need to do backprop with this component, so that if any different indexes are needed for backprop then those should be computed too. |

Reimplemented from Component.

|

virtual |

Propagate function.

| [in] | indexes | A pointer to some information output by this class's PrecomputeIndexes function (will be NULL for simple components, i.e. those that don't do things like splicing). |

| [in] | in | The input to this component. Num-columns == InputDim(). |

| [out] | out | The output of this component. Num-columns == OutputDim(). Note: output of this component will be added to the initial value of "out" if Properties()&kPropagateAdds != 0; otherwise the output will be set and the initial value ignored. Each Component chooses whether it is more convenient implementation-wise to add or set, and the calling code has to deal with it. |

Implements Component.

|

virtual |

Propagate function.

| [in] | indexes | A pointer to some information output by this class's PrecomputeIndexes function (will be NULL for simple components, i.e. those that don't do things like splicing). |

| [in] | in | The input to this component. Num-columns == InputDim(). |

| [out] | out | The output of this component. Num-columns == OutputDim(). Note: output of this component will be added to the initial value of "out" if Properties()&kPropagateAdds != 0; otherwise the output will be set and the initial value ignored. Each Component chooses whether it is more convenient implementation-wise to add or set, and the calling code has to deal with it. |

Implements Component.

Definition at line 282 of file nnet-convolutional-component.cc.

References TimeHeightConvolutionComponent::bias_params_, TimeHeightConvolutionComponent::PrecomputedIndexes::computation, kaldi::nnet3::time_height_convolution::ConvolveForward(), CuMatrixBase< Real >::CopyRowsFromVec(), CuMatrixBase< Real >::Data(), ConvolutionModel::height_out, KALDI_ASSERT, TimeHeightConvolutionComponent::linear_params_, TimeHeightConvolutionComponent::model_, ConvolutionModel::num_filters_out, CuMatrixBase< Real >::NumCols(), CuMatrixBase< Real >::NumRows(), and CuMatrixBase< Real >::Stride().

Referenced by TimeHeightConvolutionComponent::Properties(), and TdnnComponent::Properties().

|

inlinevirtual |

Return bitmask of the component's properties.

These properties depend only on the component's type. See enum ComponentProperties.

Implements Component.

Definition at line 227 of file nnet-convolutional-component-temp.h.

References TimeHeightConvolutionComponent::Backprop(), kaldi::nnet3::kBackpropAdds, kaldi::nnet3::kBackpropNeedsInput, kaldi::nnet3::kInputContiguous, kaldi::nnet3::kOutputContiguous, kaldi::nnet3::kReordersIndexes, kaldi::nnet3::kUpdatableComponent, TimeHeightConvolutionComponent::Propagate(), TimeHeightConvolutionComponent::Read(), and TimeHeightConvolutionComponent::Write().

|

inlinevirtual |

Return bitmask of the component's properties.

These properties depend only on the component's type. See enum ComponentProperties.

Implements Component.

Definition at line 227 of file nnet-convolutional-component.h.

References TimeHeightConvolutionComponent::Backprop(), kaldi::nnet3::kBackpropAdds, kaldi::nnet3::kBackpropNeedsInput, kaldi::nnet3::kInputContiguous, kaldi::nnet3::kOutputContiguous, kaldi::nnet3::kReordersIndexes, kaldi::nnet3::kUpdatableComponent, TimeHeightConvolutionComponent::Propagate(), TimeHeightConvolutionComponent::Read(), and TimeHeightConvolutionComponent::Write().

|

virtual |

|

virtual |

Read function (used after we know the type of the Component); accepts input that is missing the token that describes the component type, in case it has already been consumed.

Implements Component.

Definition at line 452 of file nnet-convolutional-component.cc.

References TimeHeightConvolutionComponent::bias_params_, TimeHeightConvolutionComponent::Check(), TimeHeightConvolutionComponent::ComputeDerived(), kaldi::nnet3::ExpectToken(), KALDI_ASSERT, TimeHeightConvolutionComponent::linear_params_, TimeHeightConvolutionComponent::max_memory_mb_, TimeHeightConvolutionComponent::model_, TimeHeightConvolutionComponent::preconditioner_in_, TimeHeightConvolutionComponent::preconditioner_out_, ConvolutionModel::Read(), kaldi::ReadBasicType(), UpdatableComponent::ReadUpdatableCommon(), OnlineNaturalGradient::SetAlpha(), OnlineNaturalGradient::SetNumMinibatchesHistory(), OnlineNaturalGradient::SetRank(), and TimeHeightConvolutionComponent::use_natural_gradient_.

Referenced by TimeHeightConvolutionComponent::Properties().

|

virtual |

This function only does something interesting for non-simple Components.

It provides an opportunity for a Component to reorder the or pad the indexes at its input and output. This might be useful, for instance, if a component requires a particular ordering of the indexes that doesn't correspond to their natural ordering. Components that might modify the indexes are required to return the kReordersIndexes flag in their Properties(). The ReorderIndexes() function is now allowed to insert blanks into the indexes. The 'blanks' must be of the form (n,kNoTime,x), where the marker kNoTime (a very negative number) is there where the 't' indexes normally live. The reason we don't just have, say, (-1,-1,-1), relates to the need to preserve a regular pattern over the 'n' indexes so that 'shortcut compilation' (c.f. ExpandComputation()) can work correctly

| [in,out] | Indexes | at the input of the Component. |

| [in,out] | Indexes | at the output of the Component |

Reimplemented from Component.

Definition at line 408 of file nnet-convolutional-component.cc.

References kaldi::nnet3::time_height_convolution::CompileConvolutionComputation(), TimeHeightConvolutionComponent::max_memory_mb_, and TimeHeightConvolutionComponent::model_.

Referenced by TimeHeightConvolutionComponent::Copy(), and TdnnComponent::Copy().

|

virtual |

This function only does something interesting for non-simple Components.

It provides an opportunity for a Component to reorder the or pad the indexes at its input and output. This might be useful, for instance, if a component requires a particular ordering of the indexes that doesn't correspond to their natural ordering. Components that might modify the indexes are required to return the kReordersIndexes flag in their Properties(). The ReorderIndexes() function is now allowed to insert blanks into the indexes. The 'blanks' must be of the form (n,kNoTime,x), where the marker kNoTime (a very negative number) is there where the 't' indexes normally live. The reason we don't just have, say, (-1,-1,-1), relates to the need to preserve a regular pattern over the 'n' indexes so that 'shortcut compilation' (c.f. ExpandComputation()) can work correctly

| [in,out] | Indexes | at the input of the Component. |

| [in,out] | Indexes | at the output of the Component |

Reimplemented from Component.

|

virtual |

This virtual function when called on – an UpdatableComponent scales the parameters by "scale" when called by an UpdatableComponent.

– a Nonlinear component (or another component that stores stats, like BatchNormComponent)– it relates to scaling activation stats, not parameters. Otherwise it will normally do nothing.

Reimplemented from Component.

Definition at line 581 of file nnet-convolutional-component.cc.

References TimeHeightConvolutionComponent::bias_params_, and TimeHeightConvolutionComponent::linear_params_.

Referenced by TimeHeightConvolutionComponent::Copy(), and TdnnComponent::Copy().

|

virtual |

This virtual function when called on – an UpdatableComponent scales the parameters by "scale" when called by an UpdatableComponent.

– a Nonlinear component (or another component that stores stats, like BatchNormComponent)– it relates to scaling activation stats, not parameters. Otherwise it will normally do nothing.

Reimplemented from Component.

|

inline |

Definition at line 302 of file nnet-convolutional-component.h.

References TimeHeightConvolutionComponent::all_time_offsets_, TimeHeightConvolutionComponent::bias_params_, TimeHeightConvolutionComponent::Check(), TimeHeightConvolutionComponent::ComputeDerived(), TimeHeightConvolutionComponent::ConsolidateMemory(), TimeHeightConvolutionComponent::InitUnit(), TimeHeightConvolutionComponent::linear_params_, TimeHeightConvolutionComponent::max_memory_mb_, TimeHeightConvolutionComponent::model_, TimeHeightConvolutionComponent::preconditioner_in_, TimeHeightConvolutionComponent::preconditioner_out_, TimeHeightConvolutionComponent::time_offset_required_, TimeHeightConvolutionComponent::UpdateNaturalGradient(), TimeHeightConvolutionComponent::UpdateSimple(), and TimeHeightConvolutionComponent::use_natural_gradient_.

|

inline |

Definition at line 302 of file nnet-convolutional-component-temp.h.

References TimeHeightConvolutionComponent::Check(), TimeHeightConvolutionComponent::ComputeDerived(), TimeHeightConvolutionComponent::ConsolidateMemory(), TimeHeightConvolutionComponent::InitUnit(), TimeHeightConvolutionComponent::linear_params_, TimeHeightConvolutionComponent::UpdateNaturalGradient(), and TimeHeightConvolutionComponent::UpdateSimple().

|

inlinevirtual |

Returns a string such as "SigmoidComponent", describing the type of the object.

Implements Component.

Definition at line 226 of file nnet-convolutional-component.h.

|

inlinevirtual |

Returns a string such as "SigmoidComponent", describing the type of the object.

Implements Component.

Definition at line 226 of file nnet-convolutional-component-temp.h.

|

virtual |

Converts the parameters from vector form.

Reimplemented from UpdatableComponent.

Definition at line 633 of file nnet-convolutional-component.cc.

References TimeHeightConvolutionComponent::bias_params_, VectorBase< Real >::Dim(), KALDI_ASSERT, TimeHeightConvolutionComponent::linear_params_, TimeHeightConvolutionComponent::NumParameters(), and VectorBase< Real >::Range().

Referenced by TimeHeightConvolutionComponent::Copy(), and TdnnComponent::Copy().

|

virtual |

Converts the parameters from vector form.

Reimplemented from UpdatableComponent.

|

private |

|

private |

Definition at line 354 of file nnet-convolutional-component.cc.

References TimeHeightConvolutionComponent::bias_params_, TimeHeightConvolutionComponent::PrecomputedIndexes::computation, kaldi::nnet3::time_height_convolution::ConvolveBackwardParams(), CuMatrixBase< Real >::CopyColFromVec(), CuMatrixBase< Real >::Data(), ConvolutionModel::height_out, KALDI_ASSERT, kaldi::kTrans, UpdatableComponent::learning_rate_, TimeHeightConvolutionComponent::linear_params_, TimeHeightConvolutionComponent::model_, ConvolutionModel::num_filters_out, CuMatrixBase< Real >::NumCols(), CuMatrixBase< Real >::NumRows(), OnlineNaturalGradient::PreconditionDirections(), TimeHeightConvolutionComponent::preconditioner_in_, TimeHeightConvolutionComponent::preconditioner_out_, CuMatrixBase< Real >::Row(), CuMatrixBase< Real >::RowRange(), and CuMatrixBase< Real >::Stride().

Referenced by TimeHeightConvolutionComponent::Backprop(), TdnnComponent::OrthonormalConstraint(), and TimeHeightConvolutionComponent::ScaleLinearParams().

|

private |

Definition at line 334 of file nnet-convolutional-component.cc.

References TimeHeightConvolutionComponent::bias_params_, TimeHeightConvolutionComponent::PrecomputedIndexes::computation, kaldi::nnet3::time_height_convolution::ConvolveBackwardParams(), CuMatrixBase< Real >::Data(), ConvolutionModel::height_out, KALDI_ASSERT, UpdatableComponent::learning_rate_, TimeHeightConvolutionComponent::linear_params_, TimeHeightConvolutionComponent::model_, ConvolutionModel::num_filters_out, CuMatrixBase< Real >::NumCols(), CuMatrixBase< Real >::NumRows(), and CuMatrixBase< Real >::Stride().

Referenced by TimeHeightConvolutionComponent::Backprop(), TdnnComponent::OrthonormalConstraint(), and TimeHeightConvolutionComponent::ScaleLinearParams().

|

private |

|

virtual |

Turns the parameters into vector form.

We put the vector form on the CPU, because in the kinds of situations where we do this, we'll tend to use too much memory for the GPU.

Reimplemented from UpdatableComponent.

Definition at line 624 of file nnet-convolutional-component.cc.

References TimeHeightConvolutionComponent::bias_params_, VectorBase< Real >::Dim(), KALDI_ASSERT, TimeHeightConvolutionComponent::linear_params_, TimeHeightConvolutionComponent::NumParameters(), and VectorBase< Real >::Range().

Referenced by TimeHeightConvolutionComponent::Copy(), and TdnnComponent::Copy().

|

virtual |

Turns the parameters into vector form.

We put the vector form on the CPU, because in the kinds of situations where we do this, we'll tend to use too much memory for the GPU.

Reimplemented from UpdatableComponent.

|

virtual |

Write component to stream.

Implements Component.

|

virtual |

Write component to stream.

Implements Component.

Definition at line 424 of file nnet-convolutional-component.cc.

References TimeHeightConvolutionComponent::bias_params_, OnlineNaturalGradient::GetAlpha(), OnlineNaturalGradient::GetNumMinibatchesHistory(), OnlineNaturalGradient::GetRank(), TimeHeightConvolutionComponent::linear_params_, TimeHeightConvolutionComponent::max_memory_mb_, TimeHeightConvolutionComponent::model_, TimeHeightConvolutionComponent::preconditioner_in_, TimeHeightConvolutionComponent::preconditioner_out_, TimeHeightConvolutionComponent::use_natural_gradient_, ConvolutionModel::Write(), kaldi::WriteBasicType(), kaldi::WriteToken(), and UpdatableComponent::WriteUpdatableCommon().

Referenced by TimeHeightConvolutionComponent::Properties().

|

private |

Definition at line 349 of file nnet-convolutional-component-temp.h.

Referenced by TimeHeightConvolutionComponent::Add(), TdnnComponent::BiasParams(), TimeHeightConvolutionComponent::Check(), TimeHeightConvolutionComponent::DotProduct(), TimeHeightConvolutionComponent::Info(), TimeHeightConvolutionComponent::InitFromConfig(), TimeHeightConvolutionComponent::NumParameters(), TdnnComponent::OrthonormalConstraint(), TimeHeightConvolutionComponent::PerturbParams(), TimeHeightConvolutionComponent::Propagate(), TdnnComponent::Properties(), TimeHeightConvolutionComponent::Read(), TimeHeightConvolutionComponent::Scale(), TimeHeightConvolutionComponent::ScaleLinearParams(), TimeHeightConvolutionComponent::UnVectorize(), TimeHeightConvolutionComponent::UpdateNaturalGradient(), TimeHeightConvolutionComponent::UpdateSimple(), TimeHeightConvolutionComponent::Vectorize(), and TimeHeightConvolutionComponent::Write().

Definition at line 346 of file nnet-convolutional-component-temp.h.

Referenced by TimeHeightConvolutionComponent::Add(), TimeHeightConvolutionComponent::Backprop(), TimeHeightConvolutionComponent::Check(), TimeHeightConvolutionComponent::DotProduct(), TimeHeightConvolutionComponent::Info(), TimeHeightConvolutionComponent::InitFromConfig(), TimeHeightConvolutionComponent::InitUnit(), TdnnComponent::InputDim(), TdnnComponent::LinearParams(), TimeHeightConvolutionComponent::NumParameters(), TdnnComponent::OrthonormalConstraint(), TdnnComponent::OutputDim(), TimeHeightConvolutionComponent::PerturbParams(), TimeHeightConvolutionComponent::Propagate(), TimeHeightConvolutionComponent::Read(), TimeHeightConvolutionComponent::Scale(), TimeHeightConvolutionComponent::ScaleLinearParams(), TimeHeightConvolutionComponent::UnVectorize(), TimeHeightConvolutionComponent::UpdateNaturalGradient(), TimeHeightConvolutionComponent::UpdateSimple(), TimeHeightConvolutionComponent::Vectorize(), and TimeHeightConvolutionComponent::Write().

|

private |

Definition at line 356 of file nnet-convolutional-component-temp.h.

Referenced by TimeHeightConvolutionComponent::Info(), TimeHeightConvolutionComponent::InitFromConfig(), TimeHeightConvolutionComponent::PrecomputeIndexes(), TimeHeightConvolutionComponent::Read(), TimeHeightConvolutionComponent::ReorderIndexes(), TimeHeightConvolutionComponent::ScaleLinearParams(), and TimeHeightConvolutionComponent::Write().

|

private |

Definition at line 330 of file nnet-convolutional-component-temp.h.

Referenced by TimeHeightConvolutionComponent::Check(), TimeHeightConvolutionComponent::ComputeDerived(), TimeHeightConvolutionComponent::Info(), TimeHeightConvolutionComponent::InitFromConfig(), TimeHeightConvolutionComponent::InitUnit(), TimeHeightConvolutionComponent::InputDim(), TimeHeightConvolutionComponent::OutputDim(), TimeHeightConvolutionComponent::PrecomputeIndexes(), TimeHeightConvolutionComponent::Propagate(), TimeHeightConvolutionComponent::Read(), TimeHeightConvolutionComponent::ReorderIndexes(), TimeHeightConvolutionComponent::ScaleLinearParams(), TimeHeightConvolutionComponent::UpdateNaturalGradient(), TimeHeightConvolutionComponent::UpdateSimple(), and TimeHeightConvolutionComponent::Write().

|

private |

Definition at line 368 of file nnet-convolutional-component-temp.h.

Referenced by TimeHeightConvolutionComponent::ConsolidateMemory(), TimeHeightConvolutionComponent::FreezeNaturalGradient(), TimeHeightConvolutionComponent::Info(), TimeHeightConvolutionComponent::InitFromConfig(), TdnnComponent::OrthonormalConstraint(), TimeHeightConvolutionComponent::Read(), TimeHeightConvolutionComponent::ScaleLinearParams(), TimeHeightConvolutionComponent::UpdateNaturalGradient(), and TimeHeightConvolutionComponent::Write().

|

private |

Definition at line 372 of file nnet-convolutional-component-temp.h.

Referenced by TimeHeightConvolutionComponent::ConsolidateMemory(), TimeHeightConvolutionComponent::FreezeNaturalGradient(), TimeHeightConvolutionComponent::Info(), TimeHeightConvolutionComponent::InitFromConfig(), TdnnComponent::OrthonormalConstraint(), TimeHeightConvolutionComponent::Read(), TimeHeightConvolutionComponent::ScaleLinearParams(), TimeHeightConvolutionComponent::UpdateNaturalGradient(), and TimeHeightConvolutionComponent::Write().

|

private |

Definition at line 338 of file nnet-convolutional-component-temp.h.

Referenced by TimeHeightConvolutionComponent::ComputeDerived(), TimeHeightConvolutionComponent::IsComputable(), and TimeHeightConvolutionComponent::ScaleLinearParams().

|

private |

Definition at line 362 of file nnet-convolutional-component-temp.h.

Referenced by TimeHeightConvolutionComponent::Backprop(), TimeHeightConvolutionComponent::Info(), TimeHeightConvolutionComponent::InitFromConfig(), TdnnComponent::OrthonormalConstraint(), TimeHeightConvolutionComponent::Read(), TimeHeightConvolutionComponent::ScaleLinearParams(), and TimeHeightConvolutionComponent::Write().