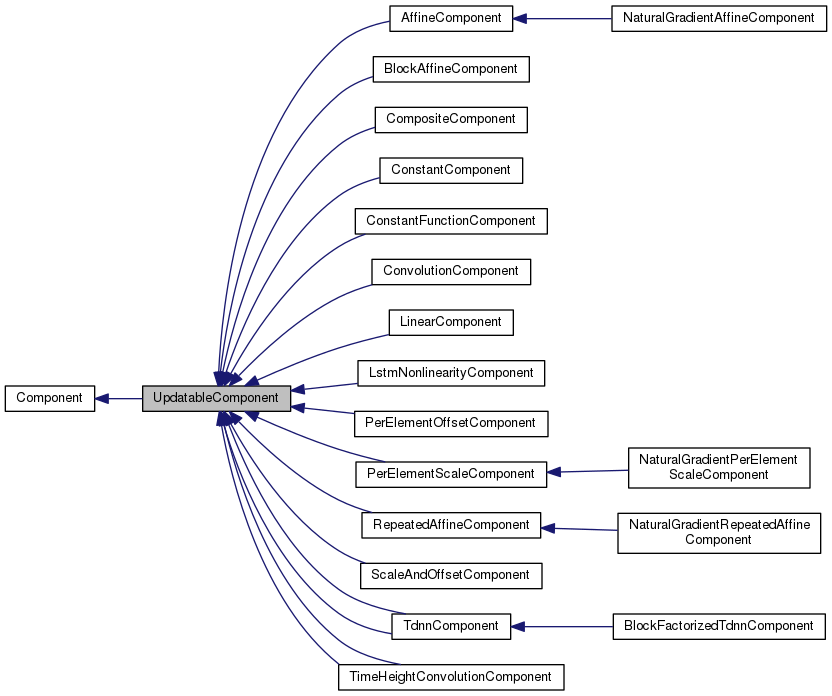

Class UpdatableComponent is a Component which has trainable parameters; it extends the interface of Component. More...

#include <nnet-component-itf.h>

Public Member Functions | |

| UpdatableComponent (const UpdatableComponent &other) | |

| UpdatableComponent () | |

| virtual | ~UpdatableComponent () |

| virtual BaseFloat | DotProduct (const UpdatableComponent &other) const =0 |

| Computes dot-product between parameters of two instances of a Component. More... | |

| virtual void | PerturbParams (BaseFloat stddev)=0 |

| This function is to be used in testing. More... | |

| virtual void | SetUnderlyingLearningRate (BaseFloat lrate) |

| Sets the learning rate of gradient descent- gets multiplied by learning_rate_factor_. More... | |

| virtual void | SetActualLearningRate (BaseFloat lrate) |

| Sets the learning rate directly, bypassing learning_rate_factor_. More... | |

| virtual void | SetAsGradient () |

| Sets is_gradient_ to true and sets learning_rate_ to 1, ignoring learning_rate_factor_. More... | |

| virtual BaseFloat | LearningRateFactor () |

| virtual void | SetLearningRateFactor (BaseFloat lrate_factor) |

| void | SetUpdatableConfigs (const UpdatableComponent &other) |

| virtual void | FreezeNaturalGradient (bool freeze) |

| freezes/unfreezes NaturalGradient updates, if applicable (to be overriden by components that use Natural Gradient). More... | |

| BaseFloat | LearningRate () const |

| Gets the learning rate to be used in gradient descent. More... | |

| BaseFloat | MaxChange () const |

| Returns the per-component max-change value, which is interpreted as the maximum change (in l2 norm) in parameters that is allowed per minibatch for this component. More... | |

| void | SetMaxChange (BaseFloat max_change) |

| BaseFloat | L2Regularization () const |

| Returns the l2 regularization constant, which may be set in any updatable component (usually from the config file). More... | |

| void | SetL2Regularization (BaseFloat a) |

| virtual std::string | Info () const |

| Returns some text-form information about this component, for diagnostics. More... | |

| virtual int32 | NumParameters () const |

| The following new virtual function returns the total dimension of the parameters in this class. More... | |

| virtual void | Vectorize (VectorBase< BaseFloat > *params) const |

| Turns the parameters into vector form. More... | |

| virtual void | UnVectorize (const VectorBase< BaseFloat > ¶ms) |

| Converts the parameters from vector form. More... | |

Public Member Functions inherited from Component Public Member Functions inherited from Component | |

| virtual void * | Propagate (const ComponentPrecomputedIndexes *indexes, const CuMatrixBase< BaseFloat > &in, CuMatrixBase< BaseFloat > *out) const =0 |

| Propagate function. More... | |

| virtual void | Backprop (const std::string &debug_info, const ComponentPrecomputedIndexes *indexes, const CuMatrixBase< BaseFloat > &in_value, const CuMatrixBase< BaseFloat > &out_value, const CuMatrixBase< BaseFloat > &out_deriv, void *memo, Component *to_update, CuMatrixBase< BaseFloat > *in_deriv) const =0 |

| Backprop function; depending on which of the arguments 'to_update' and 'in_deriv' are non-NULL, this can compute input-data derivatives and/or perform model update. More... | |

| virtual void | StoreStats (const CuMatrixBase< BaseFloat > &in_value, const CuMatrixBase< BaseFloat > &out_value, void *memo) |

| This function may store stats on average activation values, and for some component types, the average value of the derivative of the nonlinearity. More... | |

| virtual void | ZeroStats () |

| Components that provide an implementation of StoreStats should also provide an implementation of ZeroStats(), to set those stats to zero. More... | |

| virtual void | GetInputIndexes (const MiscComputationInfo &misc_info, const Index &output_index, std::vector< Index > *desired_indexes) const |

| This function only does something interesting for non-simple Components. More... | |

| virtual bool | IsComputable (const MiscComputationInfo &misc_info, const Index &output_index, const IndexSet &input_index_set, std::vector< Index > *used_inputs) const |

| This function only does something interesting for non-simple Components, and it exists to make it possible to manage optionally-required inputs. More... | |

| virtual void | ReorderIndexes (std::vector< Index > *input_indexes, std::vector< Index > *output_indexes) const |

| This function only does something interesting for non-simple Components. More... | |

| virtual ComponentPrecomputedIndexes * | PrecomputeIndexes (const MiscComputationInfo &misc_info, const std::vector< Index > &input_indexes, const std::vector< Index > &output_indexes, bool need_backprop) const |

| This function must return NULL for simple Components. More... | |

| virtual std::string | Type () const =0 |

| Returns a string such as "SigmoidComponent", describing the type of the object. More... | |

| virtual void | InitFromConfig (ConfigLine *cfl)=0 |

| Initialize, from a ConfigLine object. More... | |

| virtual int32 | InputDim () const =0 |

| Returns input-dimension of this component. More... | |

| virtual int32 | OutputDim () const =0 |

| Returns output-dimension of this component. More... | |

| virtual int32 | Properties () const =0 |

| Return bitmask of the component's properties. More... | |

| virtual Component * | Copy () const =0 |

| Copies component (deep copy). More... | |

| virtual void | Read (std::istream &is, bool binary)=0 |

| Read function (used after we know the type of the Component); accepts input that is missing the token that describes the component type, in case it has already been consumed. More... | |

| virtual void | Write (std::ostream &os, bool binary) const =0 |

| Write component to stream. More... | |

| virtual void | Scale (BaseFloat scale) |

| This virtual function when called on – an UpdatableComponent scales the parameters by "scale" when called by an UpdatableComponent. More... | |

| virtual void | Add (BaseFloat alpha, const Component &other) |

| This virtual function when called by – an UpdatableComponent adds the parameters of another updatable component, times some constant, to the current parameters. More... | |

| virtual void | DeleteMemo (void *memo) const |

| This virtual function only needs to be overwritten by Components that return a non-NULL memo from their Propagate() function. More... | |

| virtual void | ConsolidateMemory () |

| This virtual function relates to memory management, and avoiding fragmentation. More... | |

| Component () | |

| virtual | ~Component () |

Protected Member Functions | |

| void | InitLearningRatesFromConfig (ConfigLine *cfl) |

| std::string | ReadUpdatableCommon (std::istream &is, bool binary) |

| void | WriteUpdatableCommon (std::ostream &is, bool binary) const |

Protected Attributes | |

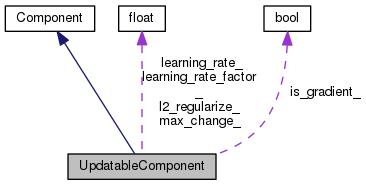

| BaseFloat | learning_rate_ |

| learning rate (typically 0.0..0.01) More... | |

| BaseFloat | learning_rate_factor_ |

| learning rate factor (normally 1.0, but can be set to another < value so that when < you call SetLearningRate(), that value will be scaled by this factor. More... | |

| BaseFloat | l2_regularize_ |

| L2 regularization constant. More... | |

| bool | is_gradient_ |

| True if this component is to be treated as a gradient rather than as parameters. More... | |

| BaseFloat | max_change_ |

| configuration value for imposing max-change More... | |

Private Member Functions | |

| const UpdatableComponent & | operator= (const UpdatableComponent &other) |

Additional Inherited Members | |

Static Public Member Functions inherited from Component Static Public Member Functions inherited from Component | |

| static Component * | ReadNew (std::istream &is, bool binary) |

| Read component from stream (works out its type). Dies on error. More... | |

| static Component * | NewComponentOfType (const std::string &type) |

| Returns a new Component of the given type e.g. More... | |

Class UpdatableComponent is a Component which has trainable parameters; it extends the interface of Component.

This is a base-class for Components with parameters. See comment by declaration of kUpdatableComponent. The functions in this interface must only be called if the component returns the kUpdatable flag.

Child classes support the following config-line parameters in addition to more specific ones:

learning-rate e.g. learning-rate=1.0e-05. default=0.001 It's not normally necessary or desirable to set this in the config line, as it typically gets set in the training scripts. learning-rate-factor e.g. learning-rate-factor=0.5, can be used to conveniently control per-layer learning rates (it's multiplied by the learning rates given to the –learning-rate option to nnet3-copy or any 'set-learning-rate' directives to the –edits-config option of nnet3-copy. default=1.0. max-change e.g. max-change=0.75. Maximum allowed parameter change for the parameters of this component, in Euclidean norm, per update step. If zero, no limit is applied at this level (the global –max-param-change option will still apply). default=0.0.

Definition at line 455 of file nnet-component-itf.h.

| UpdatableComponent | ( | const UpdatableComponent & | other | ) |

Definition at line 229 of file nnet-component-itf.cc.

|

inline |

Definition at line 461 of file nnet-component-itf.h.

Referenced by LstmNonlinearityComponent::ConsolidateMemory().

|

inlinevirtual |

Definition at line 465 of file nnet-component-itf.h.

References kaldi::nnet3::DotProduct(), and kaldi::nnet3::PerturbParams().

|

pure virtual |

Computes dot-product between parameters of two instances of a Component.

Can be used for computing parameter-norm of an UpdatableComponent.

Implemented in CompositeComponent, ScaleAndOffsetComponent, ConstantFunctionComponent, PerElementOffsetComponent, PerElementScaleComponent, LinearComponent, ConstantComponent, RepeatedAffineComponent, BlockAffineComponent, TdnnComponent, TdnnComponent, AffineComponent, LstmNonlinearityComponent, TimeHeightConvolutionComponent, TimeHeightConvolutionComponent, and ConvolutionComponent.

Referenced by kaldi::nnet3::ComponentDotProducts(), kaldi::nnet3::DotProduct(), CompositeComponent::DotProduct(), kaldi::nnet3::NnetParametersAreIdentical(), NnetComputer::ParameterStddev(), kaldi::nnet3::TestNnetComponentUpdatable(), kaldi::nnet3::TestNnetComponentVectorizeUnVectorize(), kaldi::nnet3::TestSimpleComponentModelDerivative(), and kaldi::nnet3::UpdateNnetWithMaxChange().

|

inlinevirtual |

freezes/unfreezes NaturalGradient updates, if applicable (to be overriden by components that use Natural Gradient).

Reimplemented in CompositeComponent, NaturalGradientPerElementScaleComponent, LinearComponent, NaturalGradientAffineComponent, TdnnComponent, TdnnComponent, LstmNonlinearityComponent, TimeHeightConvolutionComponent, and TimeHeightConvolutionComponent.

Definition at line 502 of file nnet-component-itf.h.

Referenced by kaldi::nnet3::FreezeNaturalGradient(), CompositeComponent::FreezeNaturalGradient(), and LstmNonlinearityComponent::Properties().

|

virtual |

Returns some text-form information about this component, for diagnostics.

Starts with the type of the component. E.g. "SigmoidComponent dim=900", although most components will have much more info.

Reimplemented from Component.

Reimplemented in CompositeComponent, ScaleAndOffsetComponent, NaturalGradientPerElementScaleComponent, ConstantFunctionComponent, PerElementOffsetComponent, PerElementScaleComponent, LinearComponent, NaturalGradientAffineComponent, ConstantComponent, RepeatedAffineComponent, BlockAffineComponent, TdnnComponent, TdnnComponent, AffineComponent, LstmNonlinearityComponent, TimeHeightConvolutionComponent, TimeHeightConvolutionComponent, and ConvolutionComponent.

Definition at line 333 of file nnet-component-itf.cc.

References Component::InputDim(), UpdatableComponent::is_gradient_, UpdatableComponent::l2_regularize_, UpdatableComponent::learning_rate_factor_, UpdatableComponent::LearningRate(), UpdatableComponent::max_change_, Component::OutputDim(), and Component::Type().

Referenced by LstmNonlinearityComponent::ConsolidateMemory(), ConvolutionComponent::Info(), TimeHeightConvolutionComponent::Info(), LstmNonlinearityComponent::Info(), AffineComponent::Info(), TdnnComponent::Info(), BlockAffineComponent::Info(), RepeatedAffineComponent::Info(), ConstantComponent::Info(), LinearComponent::Info(), PerElementScaleComponent::Info(), PerElementOffsetComponent::Info(), ConstantFunctionComponent::Info(), ScaleAndOffsetComponent::Info(), kaldi::nnet3::TestNnetComponentUpdatable(), and kaldi::nnet3::TestNnetComponentVectorizeUnVectorize().

|

protected |

Definition at line 248 of file nnet-component-itf.cc.

References ConfigLine::GetValue(), KALDI_ERR, UpdatableComponent::l2_regularize_, UpdatableComponent::learning_rate_, UpdatableComponent::learning_rate_factor_, UpdatableComponent::max_change_, and ConfigLine::WholeLine().

Referenced by LstmNonlinearityComponent::ConsolidateMemory(), ConvolutionComponent::InitFromConfig(), TimeHeightConvolutionComponent::InitFromConfig(), LstmNonlinearityComponent::InitFromConfig(), AffineComponent::InitFromConfig(), TdnnComponent::InitFromConfig(), BlockAffineComponent::InitFromConfig(), RepeatedAffineComponent::InitFromConfig(), ConstantComponent::InitFromConfig(), NaturalGradientAffineComponent::InitFromConfig(), LinearComponent::InitFromConfig(), PerElementScaleComponent::InitFromConfig(), PerElementOffsetComponent::InitFromConfig(), ConstantFunctionComponent::InitFromConfig(), and ScaleAndOffsetComponent::InitFromConfig().

|

inline |

Returns the l2 regularization constant, which may be set in any updatable component (usually from the config file).

This value is not interrogated in the component-level code. Instead it is read by the function ApplyL2Regularization(), declared in nnet-utils.h, which is used as part of the training workflow.

Definition at line 522 of file nnet-component-itf.h.

Referenced by kaldi::nnet3::ApplyL2Regularization().

|

inline |

Gets the learning rate to be used in gradient descent.

Definition at line 505 of file nnet-component-itf.h.

Referenced by kaldi::nnet3::ApplyL2Regularization(), Compiler::ComputeDerivNeeded(), ModelCollapser::GetDiagonallyPreModifiedComponentIndex(), UpdatableComponent::Info(), and CompositeComponent::SetUnderlyingLearningRate().

|

inlinevirtual |

Definition at line 489 of file nnet-component-itf.h.

|

inline |

Returns the per-component max-change value, which is interpreted as the maximum change (in l2 norm) in parameters that is allowed per minibatch for this component.

The components themselves do not enforce the per-component max-change; it's enforced in class NnetTrainer by querying the max-changes for each component. See NnetTrainer::UpdateParamsWithMaxChange() in nnet-utils.h.

Definition at line 513 of file nnet-component-itf.h.

Referenced by kaldi::nnet3::UpdateNnetWithMaxChange().

|

inlinevirtual |

The following new virtual function returns the total dimension of the parameters in this class.

Reimplemented in CompositeComponent, ScaleAndOffsetComponent, ConstantFunctionComponent, PerElementOffsetComponent, PerElementScaleComponent, LinearComponent, ConstantComponent, RepeatedAffineComponent, BlockAffineComponent, TdnnComponent, TdnnComponent, AffineComponent, LstmNonlinearityComponent, TimeHeightConvolutionComponent, TimeHeightConvolutionComponent, and ConvolutionComponent.

Definition at line 530 of file nnet-component-itf.h.

References KALDI_ASSERT.

Referenced by kaldi::nnet3::NumParameters(), CompositeComponent::NumParameters(), NnetComputer::ParameterStddev(), kaldi::nnet3::TestNnetComponentUpdatable(), kaldi::nnet3::TestNnetComponentVectorizeUnVectorize(), CompositeComponent::UnVectorize(), kaldi::nnet3::UnVectorizeNnet(), CompositeComponent::Vectorize(), and kaldi::nnet3::VectorizeNnet().

|

private |

|

pure virtual |

This function is to be used in testing.

It adds unit noise times "stddev" to the parameters of the component.

Implemented in CompositeComponent, ScaleAndOffsetComponent, ConstantFunctionComponent, PerElementOffsetComponent, PerElementScaleComponent, LinearComponent, ConstantComponent, RepeatedAffineComponent, BlockAffineComponent, TdnnComponent, TdnnComponent, AffineComponent, LstmNonlinearityComponent, TimeHeightConvolutionComponent, TimeHeightConvolutionComponent, and ConvolutionComponent.

Referenced by kaldi::nnet3::PerturbParams(), CompositeComponent::PerturbParams(), and kaldi::nnet3::TestSimpleComponentModelDerivative().

|

protected |

Definition at line 263 of file nnet-component-itf.cc.

References UpdatableComponent::is_gradient_, UpdatableComponent::l2_regularize_, UpdatableComponent::learning_rate_, UpdatableComponent::learning_rate_factor_, UpdatableComponent::max_change_, kaldi::ReadBasicType(), kaldi::ReadToken(), and Component::Type().

Referenced by LstmNonlinearityComponent::ConsolidateMemory(), ConvolutionComponent::Read(), TimeHeightConvolutionComponent::Read(), AffineComponent::Read(), TdnnComponent::Read(), BlockAffineComponent::Read(), RepeatedAffineComponent::Read(), NaturalGradientAffineComponent::Read(), LinearComponent::Read(), PerElementScaleComponent::Read(), PerElementOffsetComponent::Read(), ScaleAndOffsetComponent::Read(), and CompositeComponent::Read().

|

inlinevirtual |

Sets the learning rate directly, bypassing learning_rate_factor_.

Reimplemented in CompositeComponent.

Definition at line 483 of file nnet-component-itf.h.

Referenced by CompositeComponent::SetActualLearningRate().

|

inlinevirtual |

Sets is_gradient_ to true and sets learning_rate_ to 1, ignoring learning_rate_factor_.

Reimplemented in CompositeComponent.

Definition at line 487 of file nnet-component-itf.h.

Referenced by CompositeComponent::SetAsGradient(), kaldi::nnet3::SetNnetAsGradient(), and kaldi::nnet3::TestSimpleComponentModelDerivative().

|

inline |

Definition at line 524 of file nnet-component-itf.h.

|

inlinevirtual |

Definition at line 492 of file nnet-component-itf.h.

Referenced by kaldi::nnet3::ReadEditConfig().

|

inline |

Definition at line 515 of file nnet-component-itf.h.

|

inlinevirtual |

Sets the learning rate of gradient descent- gets multiplied by learning_rate_factor_.

Reimplemented in CompositeComponent.

Definition at line 478 of file nnet-component-itf.h.

Referenced by AffineComponent::AffineComponent(), ConvolutionComponent::ConvolutionComponent(), kaldi::nnet3::ReadEditConfig(), kaldi::nnet3::SetLearningRate(), and CompositeComponent::SetUnderlyingLearningRate().

| void SetUpdatableConfigs | ( | const UpdatableComponent & | other | ) |

Definition at line 237 of file nnet-component-itf.cc.

References UpdatableComponent::is_gradient_, UpdatableComponent::l2_regularize_, UpdatableComponent::learning_rate_, UpdatableComponent::learning_rate_factor_, and UpdatableComponent::max_change_.

Referenced by SvdApplier::DecomposeComponent().

|

inlinevirtual |

Converts the parameters from vector form.

Reimplemented in CompositeComponent, ScaleAndOffsetComponent, ConstantFunctionComponent, PerElementOffsetComponent, PerElementScaleComponent, LinearComponent, ConstantComponent, RepeatedAffineComponent, BlockAffineComponent, TdnnComponent, TdnnComponent, AffineComponent, LstmNonlinearityComponent, TimeHeightConvolutionComponent, TimeHeightConvolutionComponent, and ConvolutionComponent.

Definition at line 537 of file nnet-component-itf.h.

References KALDI_ASSERT.

Referenced by kaldi::nnet3::TestNnetComponentVectorizeUnVectorize(), CompositeComponent::UnVectorize(), and kaldi::nnet3::UnVectorizeNnet().

|

inlinevirtual |

Turns the parameters into vector form.

We put the vector form on the CPU, because in the kinds of situations where we do this, we'll tend to use too much memory for the GPU.

Reimplemented in CompositeComponent, ScaleAndOffsetComponent, ConstantFunctionComponent, PerElementOffsetComponent, PerElementScaleComponent, LinearComponent, ConstantComponent, RepeatedAffineComponent, BlockAffineComponent, TdnnComponent, TdnnComponent, AffineComponent, LstmNonlinearityComponent, TimeHeightConvolutionComponent, TimeHeightConvolutionComponent, and ConvolutionComponent.

Definition at line 535 of file nnet-component-itf.h.

References KALDI_ASSERT.

Referenced by kaldi::nnet3::TestNnetComponentVectorizeUnVectorize(), CompositeComponent::Vectorize(), and kaldi::nnet3::VectorizeNnet().

|

protected |

Definition at line 306 of file nnet-component-itf.cc.

References UpdatableComponent::is_gradient_, UpdatableComponent::l2_regularize_, UpdatableComponent::learning_rate_, UpdatableComponent::learning_rate_factor_, UpdatableComponent::max_change_, Component::Type(), kaldi::WriteBasicType(), and kaldi::WriteToken().

Referenced by LstmNonlinearityComponent::ConsolidateMemory(), ConvolutionComponent::Write(), TimeHeightConvolutionComponent::Write(), AffineComponent::Write(), TdnnComponent::Write(), BlockAffineComponent::Write(), RepeatedAffineComponent::Write(), ConstantComponent::Write(), NaturalGradientAffineComponent::Write(), LinearComponent::Write(), PerElementScaleComponent::Write(), PerElementOffsetComponent::Write(), ConstantFunctionComponent::Write(), ScaleAndOffsetComponent::Write(), and CompositeComponent::Write().

|

protected |

True if this component is to be treated as a gradient rather than as parameters.

Its main effect is that we disable any natural-gradient update and just compute the standard gradient.

Definition at line 566 of file nnet-component-itf.h.

Referenced by TimeHeightConvolutionComponent::Backprop(), LstmNonlinearityComponent::Backprop(), AffineComponent::Backprop(), TdnnComponent::Backprop(), ConstantComponent::Backprop(), LinearComponent::Backprop(), PerElementScaleComponent::Backprop(), PerElementOffsetComponent::Backprop(), ConstantFunctionComponent::Backprop(), ScaleAndOffsetComponent::BackpropInternal(), LstmNonlinearityComponent::ConsolidateMemory(), ConvolutionComponent::ConvolutionComponent(), UpdatableComponent::Info(), NaturalGradientAffineComponent::InitFromConfig(), LinearComponent::InitFromConfig(), ConvolutionComponent::Read(), AffineComponent::Read(), BlockAffineComponent::Read(), RepeatedAffineComponent::Read(), ConstantComponent::Read(), NaturalGradientAffineComponent::Read(), PerElementScaleComponent::Read(), PerElementOffsetComponent::Read(), ConstantFunctionComponent::Read(), CompositeComponent::Read(), UpdatableComponent::ReadUpdatableCommon(), UpdatableComponent::SetUpdatableConfigs(), NaturalGradientRepeatedAffineComponent::Update(), ConvolutionComponent::Write(), and UpdatableComponent::WriteUpdatableCommon().

|

protected |

L2 regularization constant.

See comment for the L2Regularization() for details.

Definition at line 564 of file nnet-component-itf.h.

Referenced by UpdatableComponent::Info(), UpdatableComponent::InitLearningRatesFromConfig(), UpdatableComponent::ReadUpdatableCommon(), UpdatableComponent::SetUpdatableConfigs(), and UpdatableComponent::WriteUpdatableCommon().

|

protected |

learning rate (typically 0.0..0.01)

Definition at line 559 of file nnet-component-itf.h.

Referenced by TimeHeightConvolutionComponent::Backprop(), LstmNonlinearityComponent::Backprop(), TdnnComponent::Backprop(), BlockAffineComponent::Backprop(), ConstantComponent::Backprop(), LinearComponent::Backprop(), PerElementOffsetComponent::Backprop(), ConstantFunctionComponent::Backprop(), ScaleAndOffsetComponent::BackpropInternal(), LstmNonlinearityComponent::ConsolidateMemory(), UpdatableComponent::InitLearningRatesFromConfig(), ConstantComponent::Read(), ConstantFunctionComponent::Read(), CompositeComponent::Read(), UpdatableComponent::ReadUpdatableCommon(), UpdatableComponent::SetUpdatableConfigs(), ConvolutionComponent::Update(), RepeatedAffineComponent::Update(), NaturalGradientRepeatedAffineComponent::Update(), NaturalGradientAffineComponent::Update(), NaturalGradientPerElementScaleComponent::Update(), TimeHeightConvolutionComponent::UpdateNaturalGradient(), TdnnComponent::UpdateNaturalGradient(), TimeHeightConvolutionComponent::UpdateSimple(), AffineComponent::UpdateSimple(), TdnnComponent::UpdateSimple(), PerElementScaleComponent::UpdateSimple(), and UpdatableComponent::WriteUpdatableCommon().

|

protected |

learning rate factor (normally 1.0, but can be set to another < value so that when < you call SetLearningRate(), that value will be scaled by this factor.

Definition at line 560 of file nnet-component-itf.h.

Referenced by UpdatableComponent::Info(), UpdatableComponent::InitLearningRatesFromConfig(), ConstantComponent::Read(), ConstantFunctionComponent::Read(), CompositeComponent::Read(), UpdatableComponent::ReadUpdatableCommon(), UpdatableComponent::SetUpdatableConfigs(), and UpdatableComponent::WriteUpdatableCommon().

|

protected |

configuration value for imposing max-change

Definition at line 570 of file nnet-component-itf.h.

Referenced by UpdatableComponent::Info(), UpdatableComponent::InitLearningRatesFromConfig(), ConstantComponent::Read(), UpdatableComponent::ReadUpdatableCommon(), UpdatableComponent::SetUpdatableConfigs(), and UpdatableComponent::WriteUpdatableCommon().