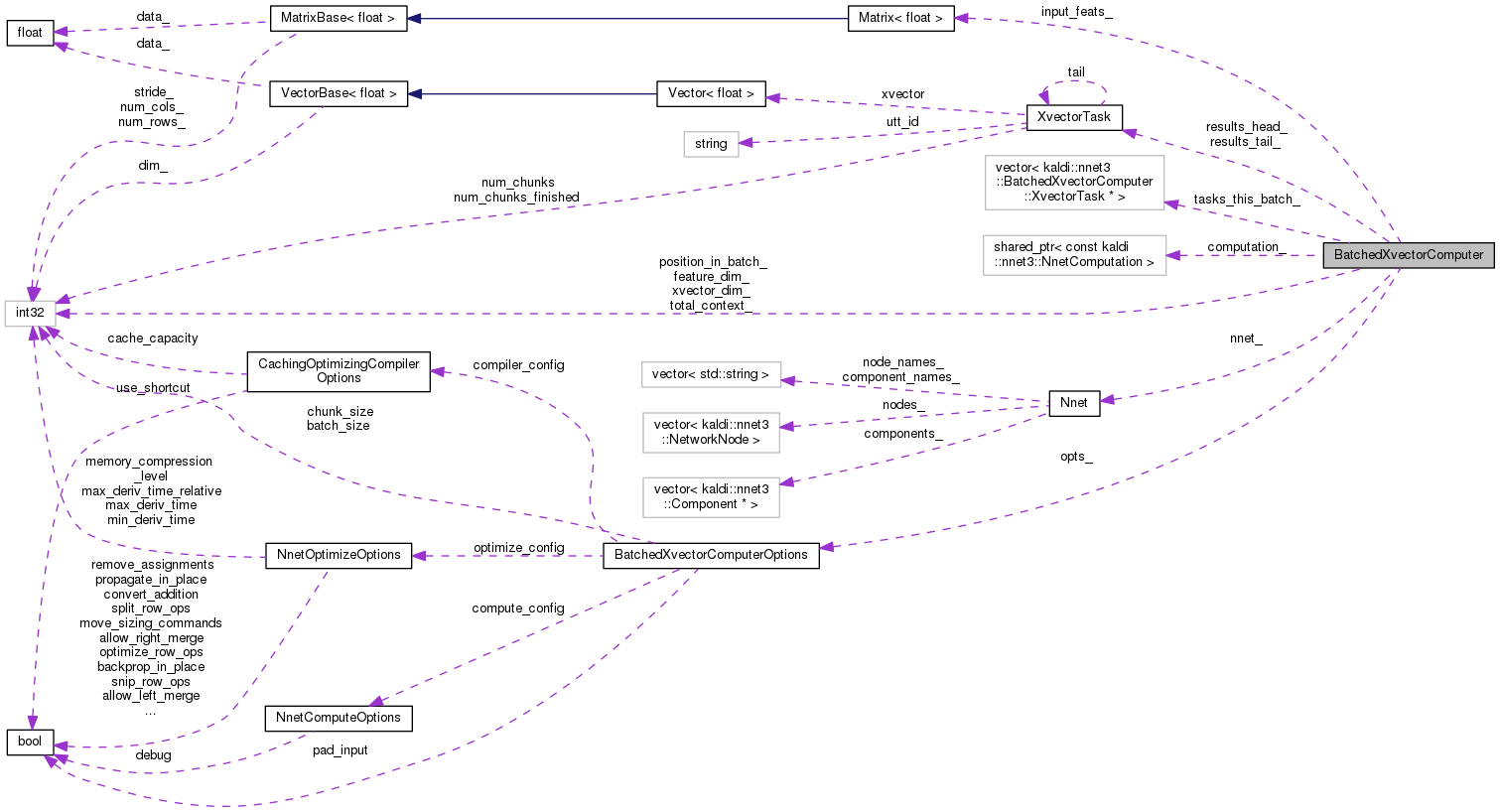

Classes | |

| struct | XvectorTask |

Public Member Functions | |

| BatchedXvectorComputer (const BatchedXvectorComputerOptions &opts, const Nnet &nnet, int32 total_context) | |

| void | AcceptUtterance (const std::string &utt, const Matrix< BaseFloat > &input) |

| Accepts an utterance to process into an xvector, and, if one or more batches become full, processes the batch. More... | |

| bool | XvectorReady () const |

| Returns true if at least one xvector is pending output (i.e. More... | |

| void | OutputXvector (std::string *utt, Vector< BaseFloat > *xvector) |

| This function, which must only be called if XvectorReady() has just returned true, outputs an xvector for an utterance. More... | |

| void | Flush () |

| Calling this will force any partial minibatch to be computed, so that any utterances that have previously been passed to AcceptUtterance() will, when this function returns, have their xvectors ready to be retrieved by OutputXvector(). More... | |

Private Member Functions | |

| void | SplitUtteranceIntoChunks (int32 num_frames, std::vector< int32 > *start_frames) |

| This decides how to split the utterance into chunks. More... | |

| XvectorTask * | CreateTask (const std::string &utt, int32 num_chunks) |

| This adds a newly created XvectorTask at the tail of the singly linked list whose (head,tail) are results_head_, results_tail_. More... | |

| void | ComputeOneBatch () |

| Does the nnet computation for one batch and distributes the computed x-vectors (of chunks) appropriately to their XvectorTask objects. More... | |

| void | AddChunkToBatch (XvectorTask *task, const Matrix< BaseFloat > &input, int32 chunk_start) |

| Adds a new chunk to a batch we are preparing. More... | |

Private Attributes | |

| const BatchedXvectorComputerOptions & | opts_ |

| int32 | total_context_ |

| const Nnet & | nnet_ |

| int32 | feature_dim_ |

| int32 | xvector_dim_ |

| Matrix< BaseFloat > | input_feats_ |

| Staging area for the input features prior to copying them to GPU. More... | |

| std::shared_ptr< const NnetComputation > | computation_ |

| The compiled computation (will be the same for every batch). More... | |

| int32 | position_in_batch_ |

| position_in_batch_ is the number of chunks that we have filled in in the input_feats_ matrix and tasks_this_batch_. More... | |

| std::vector< XvectorTask * > | tasks_this_batch_ |

| tasks_this_batch_ is of dimension opts_.batch_size. More... | |

| XvectorTask * | results_head_ |

| XvectorTask * | results_tail_ |

Definition at line 99 of file nnet3-xvector-compute-batched.cc.

| BatchedXvectorComputer | ( | const BatchedXvectorComputerOptions & | opts, |

| const Nnet & | nnet, | ||

| int32 | total_context | ||

| ) |

| [in] | opts | Options class; warning, it keeps a reference to it. |

| [in] | nnet | The neural net we'll be computing with; assumed to have already been prepared for test. |

| [in] | total_context | The sum of the left and right context of the network, computed after calling SetRequireDirectInput(true, &nnet); so the l/r context isn't zero. |

Definition at line 293 of file nnet3-xvector-compute-batched.cc.

References BatchedXvectorComputerOptions::batch_size, BatchedXvectorComputerOptions::chunk_size, CachingOptimizingCompiler::Compile(), BatchedXvectorComputerOptions::compiler_config, BatchedXvectorComputer::computation_, BatchedXvectorComputer::feature_dim_, IoSpecification::has_deriv, IoSpecification::indexes, BatchedXvectorComputer::input_feats_, Nnet::InputDim(), ComputationRequest::inputs, Index::n, rnnlm::n, IoSpecification::name, ComputationRequest::need_model_derivative, BatchedXvectorComputerOptions::optimize_config, BatchedXvectorComputer::opts_, Nnet::OutputDim(), ComputationRequest::outputs, Matrix< Real >::Resize(), ComputationRequest::store_component_stats, Index::t, BatchedXvectorComputer::tasks_this_batch_, and BatchedXvectorComputer::xvector_dim_.

Accepts an utterance to process into an xvector, and, if one or more batches become full, processes the batch.

Definition at line 428 of file nnet3-xvector-compute-batched.cc.

References BatchedXvectorComputer::AddChunkToBatch(), BatchedXvectorComputerOptions::batch_size, BatchedXvectorComputer::ComputeOneBatch(), BatchedXvectorComputer::CreateTask(), rnnlm::i, MatrixBase< Real >::NumRows(), BatchedXvectorComputer::opts_, BatchedXvectorComputer::position_in_batch_, and BatchedXvectorComputer::SplitUtteranceIntoChunks().

Referenced by main().

|

private |

Adds a new chunk to a batch we are preparing.

This will go at position `position_in_batch_` which will be incremented.

| [in] | task | The task this is part of (records the utterance); tasks_this_batch_[position_in_batch_] will be set to this. |

| [in] | input | The input matrix of features of which this chunk is a part |

| [in] | chunk_start | The frame at which this chunk starts. Must be >= 0; and if opts_.pad_input is false, chunk_start + opts_.chunk_size must be <= input.NumRows(). |

Definition at line 352 of file nnet3-xvector-compute-batched.cc.

References BatchedXvectorComputerOptions::batch_size, BatchedXvectorComputerOptions::chunk_size, VectorBase< Real >::CopyFromVec(), BatchedXvectorComputer::feature_dim_, BatchedXvectorComputer::input_feats_, KALDI_ASSERT, KALDI_ERR, rnnlm::n, MatrixBase< Real >::NumCols(), MatrixBase< Real >::NumRows(), BatchedXvectorComputer::opts_, BatchedXvectorComputerOptions::pad_input, BatchedXvectorComputer::position_in_batch_, and BatchedXvectorComputer::tasks_this_batch_.

Referenced by BatchedXvectorComputer::AcceptUtterance().

|

private |

Does the nnet computation for one batch and distributes the computed x-vectors (of chunks) appropriately to their XvectorTask objects.

Definition at line 404 of file nnet3-xvector-compute-batched.cc.

References NnetComputer::AcceptInput(), BatchedXvectorComputerOptions::batch_size, BatchedXvectorComputer::computation_, BatchedXvectorComputerOptions::compute_config, NnetComputer::GetOutputDestructive(), BatchedXvectorComputer::input_feats_, KALDI_ASSERT, rnnlm::n, BatchedXvectorComputer::nnet_, BatchedXvectorComputer::XvectorTask::num_chunks, BatchedXvectorComputer::XvectorTask::num_chunks_finished, CuMatrixBase< Real >::NumRows(), BatchedXvectorComputer::opts_, BatchedXvectorComputer::position_in_batch_, NnetComputer::Run(), BatchedXvectorComputer::tasks_this_batch_, and BatchedXvectorComputer::XvectorTask::xvector.

Referenced by BatchedXvectorComputer::AcceptUtterance(), and BatchedXvectorComputer::Flush().

|

private |

This adds a newly created XvectorTask at the tail of the singly linked list whose (head,tail) are results_head_, results_tail_.

Definition at line 275 of file nnet3-xvector-compute-batched.cc.

References BatchedXvectorComputer::XvectorTask::num_chunks, BatchedXvectorComputer::XvectorTask::num_chunks_finished, BatchedXvectorComputer::XvectorTask::tail, BatchedXvectorComputer::XvectorTask::utt_id, and BatchedXvectorComputer::XvectorTask::xvector.

Referenced by BatchedXvectorComputer::AcceptUtterance().

| void Flush | ( | ) |

Calling this will force any partial minibatch to be computed, so that any utterances that have previously been passed to AcceptUtterance() will, when this function returns, have their xvectors ready to be retrieved by OutputXvector().

Definition at line 397 of file nnet3-xvector-compute-batched.cc.

References BatchedXvectorComputer::ComputeOneBatch(), and BatchedXvectorComputer::position_in_batch_.

Referenced by main().

This function, which must only be called if XvectorReady() has just returned true, outputs an xvector for an utterance.

| [out] | utt | The utterance-id is written to here. Note: these will be output in the same order as the user called AcceptUtterance(), except that if opts_.pad_input is false and and utterance is shorter than the chunk size, some utterances may be skipped. |

| [out] | xvector | The xvector will be written to here. |

Definition at line 385 of file nnet3-xvector-compute-batched.cc.

References KALDI_ASSERT, BatchedXvectorComputer::results_head_, BatchedXvectorComputer::results_tail_, Vector< Real >::Swap(), BatchedXvectorComputer::XvectorTask::tail, BatchedXvectorComputer::XvectorTask::utt_id, BatchedXvectorComputer::XvectorTask::xvector, and BatchedXvectorComputer::XvectorReady().

Referenced by main().

This decides how to split the utterance into chunks.

It does so in a way that minimizes the variance of the x-vector under some simplifying assumptions. It's about minimizing the variance of the x-vector. We treat the x-vector as computed as a sum over frames (although some frames may be repeated or omitted due to gaps between chunks or overlaps between chunks); and we try to minimize the variance of the x-vector estimate; this is minimized when all the frames have the same weight, which is only possible if it can be exactly divided into chunks; anyway, this function computes the best division into chunks.

It's a question of whether to allow overlaps or gaps. Suppose we are averaging independent quantities with variance 1. The variance of a simple sum of M of those quantities is 1/M. Suppose we have M of those quantities, plus N which are repeated twice in the sum. The variance of the estimate formed that way is:

(M + 4N) / (M + 2N)^2

If we can't divide it exactly into chunks we'll compare the variances from the cases where there is a gap vs. an overlap, and choose the one with the smallest variance. (Note: due to context effects we actually lose total_context_ frames from the input signal, and the chunks would have to overlap by total_context_ even if the part at the statistics-computation layer were ideally cut up.

| [in] | num_frames | The number of frames in the utterance |

| [out] | start_frames | This function will output to here a vector containing all the start-frames of chunks in this utterance. All chunks will have duration opts_.chunk_size; if a chunk goes past the end of the input we'll repeat the last frame. (This will only happen if opts_.pad_input is false and num_frames is less than opts_.chunk_length.) |

Definition at line 445 of file nnet3-xvector-compute-batched.cc.

References BatchedXvectorComputerOptions::chunk_size, kaldi::nnet3::DivideIntoPieces(), rnnlm::i, KALDI_ASSERT, BatchedXvectorComputer::opts_, BatchedXvectorComputerOptions::pad_input, and BatchedXvectorComputer::total_context_.

Referenced by BatchedXvectorComputer::AcceptUtterance().

| bool XvectorReady | ( | ) | const |

Returns true if at least one xvector is pending output (i.e.

that the user may call OutputXvector()).

Definition at line 378 of file nnet3-xvector-compute-batched.cc.

References KALDI_ASSERT, BatchedXvectorComputer::XvectorTask::num_chunks, BatchedXvectorComputer::XvectorTask::num_chunks_finished, and BatchedXvectorComputer::results_head_.

Referenced by main(), and BatchedXvectorComputer::OutputXvector().

|

private |

The compiled computation (will be the same for every batch).

Definition at line 248 of file nnet3-xvector-compute-batched.cc.

Referenced by BatchedXvectorComputer::BatchedXvectorComputer(), and BatchedXvectorComputer::ComputeOneBatch().

|

private |

Definition at line 234 of file nnet3-xvector-compute-batched.cc.

Referenced by BatchedXvectorComputer::AddChunkToBatch(), and BatchedXvectorComputer::BatchedXvectorComputer().

Staging area for the input features prior to copying them to GPU.

Dimension is opts_.chunk_size * opts_.batch_size by feature_dim_. The sequences are interleaved (will be faster since this corresponds to how nnet3 keeps things in memory), i.e. row 0 of input_feats_ is time t=0 for chunk n=0; and row 1 of input_feats_ is time t=0 for chunk n=1.

Definition at line 244 of file nnet3-xvector-compute-batched.cc.

Referenced by BatchedXvectorComputer::AddChunkToBatch(), BatchedXvectorComputer::BatchedXvectorComputer(), and BatchedXvectorComputer::ComputeOneBatch().

|

private |

Definition at line 232 of file nnet3-xvector-compute-batched.cc.

Referenced by BatchedXvectorComputer::ComputeOneBatch().

|

private |

Definition at line 230 of file nnet3-xvector-compute-batched.cc.

Referenced by BatchedXvectorComputer::AcceptUtterance(), BatchedXvectorComputer::AddChunkToBatch(), BatchedXvectorComputer::BatchedXvectorComputer(), BatchedXvectorComputer::ComputeOneBatch(), and BatchedXvectorComputer::SplitUtteranceIntoChunks().

|

private |

position_in_batch_ is the number of chunks that we have filled in in the input_feats_ matrix and tasks_this_batch_.

When it reaches opts_.batch_size we will do the actual computation.

Definition at line 255 of file nnet3-xvector-compute-batched.cc.

Referenced by BatchedXvectorComputer::AcceptUtterance(), BatchedXvectorComputer::AddChunkToBatch(), BatchedXvectorComputer::ComputeOneBatch(), and BatchedXvectorComputer::Flush().

|

private |

Definition at line 268 of file nnet3-xvector-compute-batched.cc.

Referenced by BatchedXvectorComputer::OutputXvector(), and BatchedXvectorComputer::XvectorReady().

|

private |

Definition at line 271 of file nnet3-xvector-compute-batched.cc.

Referenced by BatchedXvectorComputer::OutputXvector().

|

private |

tasks_this_batch_ is of dimension opts_.batch_size.

It is a vector of pointers to elements of the singly linked list whose head is at results_head_, or NULL for elements with indexes >= position_in_batch_.

Definition at line 262 of file nnet3-xvector-compute-batched.cc.

Referenced by BatchedXvectorComputer::AddChunkToBatch(), BatchedXvectorComputer::BatchedXvectorComputer(), and BatchedXvectorComputer::ComputeOneBatch().

|

private |

Definition at line 231 of file nnet3-xvector-compute-batched.cc.

Referenced by BatchedXvectorComputer::SplitUtteranceIntoChunks().

|

private |

Definition at line 235 of file nnet3-xvector-compute-batched.cc.

Referenced by BatchedXvectorComputer::BatchedXvectorComputer().