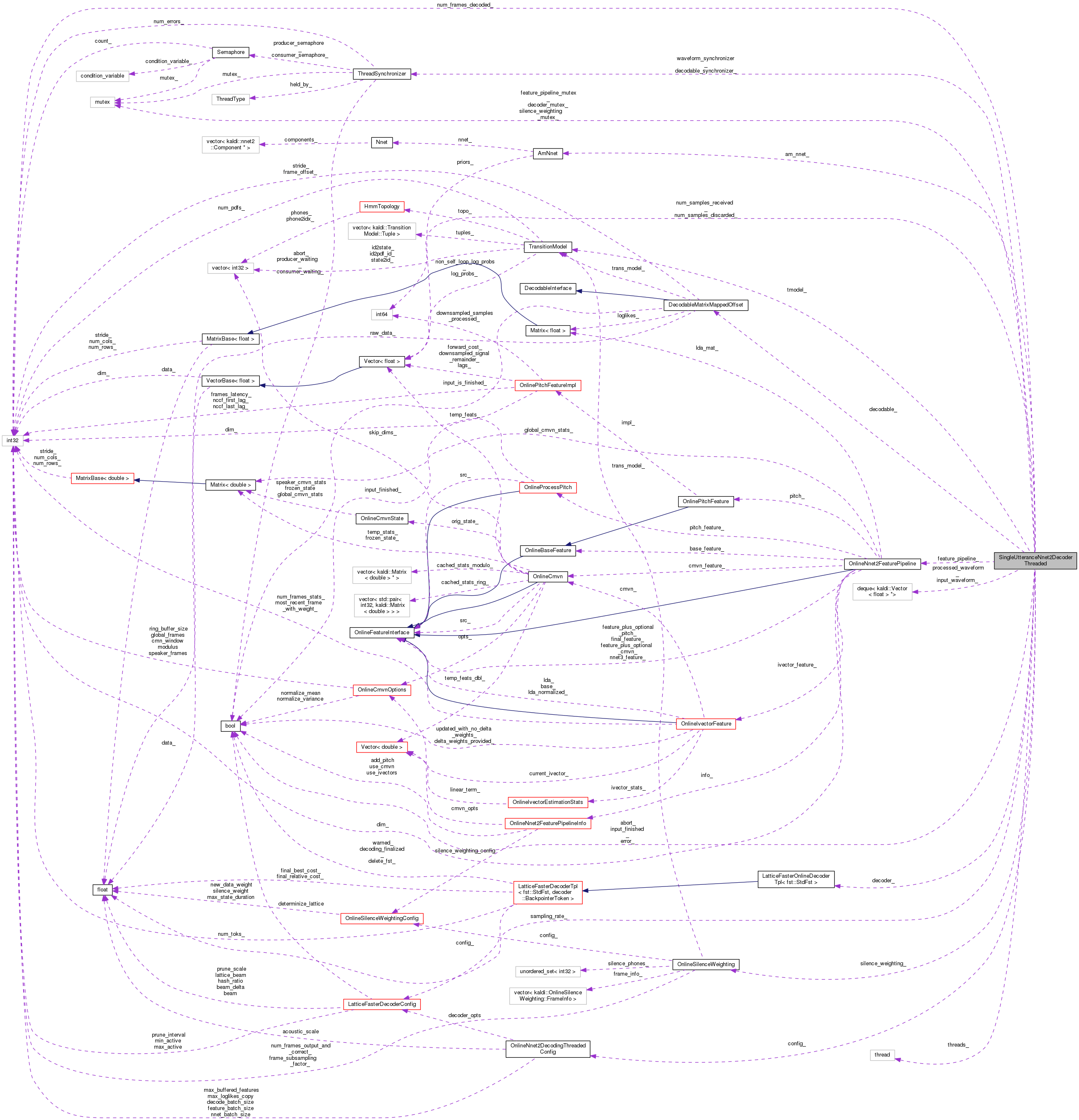

You will instantiate this class when you want to decode a single utterance using the online-decoding setup for neural nets. More...

#include <online-nnet2-decoding-threaded.h>

Public Member Functions | |

| SingleUtteranceNnet2DecoderThreaded (const OnlineNnet2DecodingThreadedConfig &config, const TransitionModel &tmodel, const nnet2::AmNnet &am_nnet, const fst::Fst< fst::StdArc > &fst, const OnlineNnet2FeaturePipelineInfo &feature_info, const OnlineIvectorExtractorAdaptationState &adaptation_state, const OnlineCmvnState &cmvn_state) | |

| void | AcceptWaveform (BaseFloat samp_freq, const VectorBase< BaseFloat > &wave_part) |

| You call this to provide this class with more waveform to decode. More... | |

| int32 | NumWaveformPiecesPending () |

| Returns the number of pieces of waveform that are still waiting to be processed. More... | |

| void | InputFinished () |

| You call this to inform the class that no more waveform will be provided; this allows it to flush out the last few frames of features, and is necessary if you want to call Wait() to wait until all decoding is done. More... | |

| void | TerminateDecoding () |

| You can call this if you don't want the decoding to proceed further with this utterance. More... | |

| void | Wait () |

| This call will block until all the data has been decoded; it must only be called after either InputFinished() has been called or TerminateDecoding() has been called; otherwise, to call it is an error. More... | |

| void | FinalizeDecoding () |

| Finalizes the decoding. More... | |

| int32 | NumFramesReceivedApprox () const |

| Returns *approximately* (ignoring end effects), the number of frames of data that we expect given the amount of data that the pipeline has received via AcceptWaveform(). More... | |

| int32 | NumFramesDecoded () const |

| Returns the number of frames currently decoded. More... | |

| void | GetLattice (bool end_of_utterance, CompactLattice *clat, BaseFloat *final_relative_cost) const |

| Gets the lattice. More... | |

| void | GetBestPath (bool end_of_utterance, Lattice *best_path, BaseFloat *final_relative_cost) const |

| Outputs an FST corresponding to the single best path through the current lattice. More... | |

| bool | EndpointDetected (const OnlineEndpointConfig &config) |

| This function calls EndpointDetected from online-endpoint.h, with the required arguments. More... | |

| void | GetAdaptationState (OnlineIvectorExtractorAdaptationState *adaptation_state) |

| Outputs the adaptation state of the feature pipeline to "adaptation_state". More... | |

| void | GetCmvnState (OnlineCmvnState *cmvn_state) |

| Outputs the OnlineCmvnState of the feature pipeline to "cmvn_stat". More... | |

| BaseFloat | GetRemainingWaveform (Vector< BaseFloat > *waveform_out) const |

| Gets the remaining, un-decoded part of the waveform and returns the sample rate. More... | |

| ~SingleUtteranceNnet2DecoderThreaded () | |

Private Member Functions | |

| void | AbortAllThreads (bool error) |

| void | WaitForAllThreads () |

| bool | RunNnetEvaluationInternal () |

| void | ProcessLoglikes (const CuVector< BaseFloat > &log_inv_prior, CuMatrixBase< BaseFloat > *loglikes) |

| bool | FeatureComputation (int32 num_frames_output) |

| bool | RunDecoderSearchInternal () |

Static Private Member Functions | |

| static void | RunNnetEvaluation (SingleUtteranceNnet2DecoderThreaded *me) |

| static void | RunDecoderSearch (SingleUtteranceNnet2DecoderThreaded *me) |

Private Attributes | |

| OnlineNnet2DecodingThreadedConfig | config_ |

| const nnet2::AmNnet & | am_nnet_ |

| const TransitionModel & | tmodel_ |

| BaseFloat | sampling_rate_ |

| int64 | num_samples_received_ |

| bool | input_finished_ |

| std::deque< Vector< BaseFloat > *> | input_waveform_ |

| ThreadSynchronizer | waveform_synchronizer_ |

| OnlineNnet2FeaturePipeline | feature_pipeline_ |

| std::mutex | feature_pipeline_mutex_ |

| std::deque< Vector< BaseFloat > *> | processed_waveform_ |

| int64 | num_samples_discarded_ |

| OnlineSilenceWeighting | silence_weighting_ |

| std::mutex | silence_weighting_mutex_ |

| DecodableMatrixMappedOffset | decodable_ |

| int32 | num_frames_decoded_ |

| ThreadSynchronizer | decodable_synchronizer_ |

| LatticeFasterOnlineDecoder | decoder_ |

| std::mutex | decoder_mutex_ |

| std::thread | threads_ [2] |

| bool | abort_ |

| bool | error_ |

You will instantiate this class when you want to decode a single utterance using the online-decoding setup for neural nets.

Each time this class is created, it creates three background threads, and the feature extraction, neural net evaluation, and search aspects of decoding all happen in different threads. Note: we assume that all calls to its public interface happen from a single thread.

Definition at line 190 of file online-nnet2-decoding-threaded.h.

| SingleUtteranceNnet2DecoderThreaded | ( | const OnlineNnet2DecodingThreadedConfig & | config, |

| const TransitionModel & | tmodel, | ||

| const nnet2::AmNnet & | am_nnet, | ||

| const fst::Fst< fst::StdArc > & | fst, | ||

| const OnlineNnet2FeaturePipelineInfo & | feature_info, | ||

| const OnlineIvectorExtractorAdaptationState & | adaptation_state, | ||

| const OnlineCmvnState & | cmvn_state | ||

| ) |

Definition at line 112 of file online-nnet2-decoding-threaded.cc.

References SingleUtteranceNnet2DecoderThreaded::decoder_, SingleUtteranceNnet2DecoderThreaded::feature_pipeline_, LatticeFasterDecoderTpl< FST, Token >::InitDecoding(), SingleUtteranceNnet2DecoderThreaded::RunDecoderSearch(), SingleUtteranceNnet2DecoderThreaded::RunNnetEvaluation(), OnlineNnet2FeaturePipeline::SetAdaptationState(), OnlineNnet2FeaturePipeline::SetCmvnState(), and SingleUtteranceNnet2DecoderThreaded::threads_.

Definition at line 141 of file online-nnet2-decoding-threaded.cc.

References SingleUtteranceNnet2DecoderThreaded::abort_, SingleUtteranceNnet2DecoderThreaded::AbortAllThreads(), SingleUtteranceNnet2DecoderThreaded::input_waveform_, SingleUtteranceNnet2DecoderThreaded::processed_waveform_, and SingleUtteranceNnet2DecoderThreaded::WaitForAllThreads().

|

private |

Definition at line 344 of file online-nnet2-decoding-threaded.cc.

References SingleUtteranceNnet2DecoderThreaded::abort_, SingleUtteranceNnet2DecoderThreaded::decodable_synchronizer_, SingleUtteranceNnet2DecoderThreaded::error_, ThreadSynchronizer::SetAbort(), and SingleUtteranceNnet2DecoderThreaded::waveform_synchronizer_.

Referenced by SingleUtteranceNnet2DecoderThreaded::RunDecoderSearch(), SingleUtteranceNnet2DecoderThreaded::RunNnetEvaluation(), SingleUtteranceNnet2DecoderThreaded::TerminateDecoding(), and SingleUtteranceNnet2DecoderThreaded::~SingleUtteranceNnet2DecoderThreaded().

| void AcceptWaveform | ( | BaseFloat | samp_freq, |

| const VectorBase< BaseFloat > & | wave_part | ||

| ) |

You call this to provide this class with more waveform to decode.

This call is, for all practical purposes, non-blocking.

Definition at line 160 of file online-nnet2-decoding-threaded.cc.

References VectorBase< Real >::Dim(), SingleUtteranceNnet2DecoderThreaded::input_waveform_, KALDI_ASSERT, KALDI_ERR, ThreadSynchronizer::kProducer, ThreadSynchronizer::Lock(), SingleUtteranceNnet2DecoderThreaded::num_samples_received_, SingleUtteranceNnet2DecoderThreaded::sampling_rate_, ThreadSynchronizer::UnlockSuccess(), and SingleUtteranceNnet2DecoderThreaded::waveform_synchronizer_.

| bool EndpointDetected | ( | const OnlineEndpointConfig & | config | ) |

This function calls EndpointDetected from online-endpoint.h, with the required arguments.

Definition at line 651 of file online-nnet2-decoding-threaded.cc.

References SingleUtteranceNnet2DecoderThreaded::decoder_, SingleUtteranceNnet2DecoderThreaded::decoder_mutex_, kaldi::EndpointDetected(), SingleUtteranceNnet2DecoderThreaded::feature_pipeline_, OnlineNnet2FeaturePipeline::FrameShiftInSeconds(), and SingleUtteranceNnet2DecoderThreaded::tmodel_.

Definition at line 412 of file online-nnet2-decoding-threaded.cc.

References OnlineNnet2FeaturePipeline::AcceptWaveform(), SingleUtteranceNnet2DecoderThreaded::config_, SingleUtteranceNnet2DecoderThreaded::feature_pipeline_, OnlineNnet2FeaturePipeline::FrameShiftInSeconds(), SingleUtteranceNnet2DecoderThreaded::input_finished_, SingleUtteranceNnet2DecoderThreaded::input_waveform_, OnlineNnet2FeaturePipeline::InputFinished(), OnlineNnet2FeaturePipeline::IsLastFrame(), KALDI_ASSERT, ThreadSynchronizer::kConsumer, ThreadSynchronizer::Lock(), OnlineNnet2DecodingThreadedConfig::nnet_batch_size, SingleUtteranceNnet2DecoderThreaded::num_frames_decoded_, SingleUtteranceNnet2DecoderThreaded::num_samples_discarded_, OnlineNnet2FeaturePipeline::NumFramesReady(), SingleUtteranceNnet2DecoderThreaded::processed_waveform_, SingleUtteranceNnet2DecoderThreaded::sampling_rate_, ThreadSynchronizer::UnlockFailure(), ThreadSynchronizer::UnlockSuccess(), and SingleUtteranceNnet2DecoderThreaded::waveform_synchronizer_.

Referenced by SingleUtteranceNnet2DecoderThreaded::RunNnetEvaluationInternal().

| void FinalizeDecoding | ( | ) |

Finalizes the decoding.

Cleans up and prunes remaining tokens, so the final lattice is faster to obtain. May not be called unless either InputFinished() or TerminateDecoding() has been called. If InputFinished() was called, it calls Wait() to ensure that the decoding has finished (it's not an error if you already called Wait()).

Definition at line 228 of file online-nnet2-decoding-threaded.cc.

References SingleUtteranceNnet2DecoderThreaded::decoder_, LatticeFasterDecoderTpl< FST, Token >::FinalizeDecoding(), KALDI_ERR, and SingleUtteranceNnet2DecoderThreaded::threads_.

| void GetAdaptationState | ( | OnlineIvectorExtractorAdaptationState * | adaptation_state | ) |

Outputs the adaptation state of the feature pipeline to "adaptation_state".

This mostly stores stats for iVector estimation, and will generally be called at the end of an utterance, assuming it's a scenario where each speaker is seen for more than one utterance. You may only call this function after either calling TerminateDecoding() or InputFinished, and then Wait(). Otherwise it is an error.

Definition at line 283 of file online-nnet2-decoding-threaded.cc.

References SingleUtteranceNnet2DecoderThreaded::feature_pipeline_, SingleUtteranceNnet2DecoderThreaded::feature_pipeline_mutex_, and OnlineNnet2FeaturePipeline::GetAdaptationState().

| void GetBestPath | ( | bool | end_of_utterance, |

| Lattice * | best_path, | ||

| BaseFloat * | final_relative_cost | ||

| ) | const |

Outputs an FST corresponding to the single best path through the current lattice.

If "use_final_probs" is true AND we reached the final-state of the graph then it will include those as final-probs, else it will treat all final-probs as one. If no frames have been decoded yet, it will set best_path to a lattice with a single state that is final and with unit weight (no cost). The output to final_relative_cost (if non-NULL) is a number >= 0 that's closer to 0 if a final-state were close to the best-likelihood state active on the last frame, at the time we got the best path.

Definition at line 323 of file online-nnet2-decoding-threaded.cc.

References SingleUtteranceNnet2DecoderThreaded::decoder_, SingleUtteranceNnet2DecoderThreaded::decoder_mutex_, LatticeFasterDecoderTpl< FST, Token >::FinalRelativeCost(), LatticeFasterOnlineDecoderTpl< FST >::GetBestPath(), LatticeFasterDecoderTpl< FST, Token >::NumFramesDecoded(), and LatticeWeightTpl< BaseFloat >::One().

| void GetCmvnState | ( | OnlineCmvnState * | cmvn_state | ) |

Outputs the OnlineCmvnState of the feature pipeline to "cmvn_stat".

This stores cmvn stats for the non-iVector features, and will be called at the end of an utterance, assuming it's a scenario where each speaker is seen for more than one utterance. You may only call this function after either calling TerminateDecoding() or InputFinished, and then Wait(). Otherwise it is an error.

Definition at line 290 of file online-nnet2-decoding-threaded.cc.

References SingleUtteranceNnet2DecoderThreaded::feature_pipeline_, SingleUtteranceNnet2DecoderThreaded::feature_pipeline_mutex_, and OnlineNnet2FeaturePipeline::GetCmvnState().

| void GetLattice | ( | bool | end_of_utterance, |

| CompactLattice * | clat, | ||

| BaseFloat * | final_relative_cost | ||

| ) | const |

Gets the lattice.

The output lattice has any acoustic scaling in it (which will typically be desirable in an online-decoding context); if you want an un-scaled lattice, scale it using ScaleLattice() with the inverse of the acoustic weight. "end_of_utterance" will be true if you want the final-probs to be included. If this is at the end of the utterance, you might want to first call FinalizeDecoding() first; this will make this call return faster. If no frames have been decoded yet, it will set clat to a lattice with a single state that is final and with unit weight (no cost or alignment). The output to final_relative_cost (if non-NULL) is a number >= 0 that's closer to 0 if a final-state was close to the best-likelihood state active on the last frame, at the time we obtained the lattice.

Definition at line 297 of file online-nnet2-decoding-threaded.cc.

References SingleUtteranceNnet2DecoderThreaded::config_, SingleUtteranceNnet2DecoderThreaded::decoder_, SingleUtteranceNnet2DecoderThreaded::decoder_mutex_, OnlineNnet2DecodingThreadedConfig::decoder_opts, LatticeFasterDecoderConfig::det_opts, LatticeFasterDecoderConfig::determinize_lattice, fst::DeterminizeLatticePhonePrunedWrapper(), LatticeFasterDecoderTpl< FST, Token >::FinalRelativeCost(), LatticeFasterDecoderTpl< FST, Token >::GetRawLattice(), KALDI_ERR, LatticeFasterDecoderConfig::lattice_beam, LatticeFasterDecoderTpl< FST, Token >::NumFramesDecoded(), CompactLatticeWeightTpl< WeightType, IntType >::One(), and SingleUtteranceNnet2DecoderThreaded::tmodel_.

Gets the remaining, un-decoded part of the waveform and returns the sample rate.

May only be called after Wait(), and it only makes sense to call this if you called TerminateDecoding() before Wait(). The idea is that you can then provide this un-decoded piece of waveform to another decoder.

Definition at line 235 of file online-nnet2-decoding-threaded.cc.

References VectorBase< Real >::Dim(), SingleUtteranceNnet2DecoderThreaded::feature_pipeline_, OnlineNnet2FeaturePipeline::FrameShiftInSeconds(), rnnlm::i, SingleUtteranceNnet2DecoderThreaded::input_waveform_, KALDI_ASSERT, KALDI_ERR, kaldi::kUndefined, SingleUtteranceNnet2DecoderThreaded::num_frames_decoded_, SingleUtteranceNnet2DecoderThreaded::num_samples_discarded_, SingleUtteranceNnet2DecoderThreaded::processed_waveform_, VectorBase< Real >::Range(), Vector< Real >::Resize(), SingleUtteranceNnet2DecoderThreaded::sampling_rate_, and SingleUtteranceNnet2DecoderThreaded::threads_.

| void InputFinished | ( | ) |

You call this to inform the class that no more waveform will be provided; this allows it to flush out the last few frames of features, and is necessary if you want to call Wait() to wait until all decoding is done.

After calling InputFinished() you cannot call AcceptWaveform any more.

Definition at line 203 of file online-nnet2-decoding-threaded.cc.

References SingleUtteranceNnet2DecoderThreaded::input_finished_, KALDI_ASSERT, KALDI_ERR, ThreadSynchronizer::kProducer, ThreadSynchronizer::Lock(), ThreadSynchronizer::UnlockSuccess(), and SingleUtteranceNnet2DecoderThreaded::waveform_synchronizer_.

| int32 NumFramesDecoded | ( | ) | const |

Returns the number of frames currently decoded.

Caution: don't rely on the lattice having exactly this number if you get it after this call, as it may increase after this– unless you've already called either TerminateDecoding() or InputFinished(), followed by Wait().

Definition at line 352 of file online-nnet2-decoding-threaded.cc.

References SingleUtteranceNnet2DecoderThreaded::decoder_, SingleUtteranceNnet2DecoderThreaded::decoder_mutex_, and LatticeFasterDecoderTpl< FST, Token >::NumFramesDecoded().

| int32 NumFramesReceivedApprox | ( | ) | const |

Returns *approximately* (ignoring end effects), the number of frames of data that we expect given the amount of data that the pipeline has received via AcceptWaveform().

(ignores small end effects). This might be useful in application code to compare with NumFramesDecoded() and gauge how much latency there is.

Definition at line 198 of file online-nnet2-decoding-threaded.cc.

References SingleUtteranceNnet2DecoderThreaded::feature_pipeline_, OnlineNnet2FeaturePipeline::FrameShiftInSeconds(), SingleUtteranceNnet2DecoderThreaded::num_samples_received_, and SingleUtteranceNnet2DecoderThreaded::sampling_rate_.

| int32 NumWaveformPiecesPending | ( | ) |

Returns the number of pieces of waveform that are still waiting to be processed.

This may be useful for calling code to judge whether to supply more waveform or to wait.

Definition at line 182 of file online-nnet2-decoding-threaded.cc.

References SingleUtteranceNnet2DecoderThreaded::input_waveform_, KALDI_ERR, ThreadSynchronizer::kProducer, ThreadSynchronizer::Lock(), ThreadSynchronizer::UnlockSuccess(), and SingleUtteranceNnet2DecoderThreaded::waveform_synchronizer_.

|

private |

Definition at line 395 of file online-nnet2-decoding-threaded.cc.

References OnlineNnet2DecodingThreadedConfig::acoustic_scale, CuMatrixBase< Real >::AddVecToRows(), CuMatrixBase< Real >::ApplyFloor(), CuMatrixBase< Real >::ApplyLog(), SingleUtteranceNnet2DecoderThreaded::config_, CuMatrixBase< Real >::NumRows(), and CuMatrixBase< Real >::Scale().

Referenced by SingleUtteranceNnet2DecoderThreaded::RunNnetEvaluationInternal().

|

staticprivate |

Definition at line 371 of file online-nnet2-decoding-threaded.cc.

References SingleUtteranceNnet2DecoderThreaded::abort_, SingleUtteranceNnet2DecoderThreaded::AbortAllThreads(), KALDI_ERR, KALDI_WARN, and SingleUtteranceNnet2DecoderThreaded::RunDecoderSearchInternal().

Referenced by SingleUtteranceNnet2DecoderThreaded::SingleUtteranceNnet2DecoderThreaded().

|

private |

Definition at line 615 of file online-nnet2-decoding-threaded.cc.

References OnlineSilenceWeighting::Active(), LatticeFasterDecoderTpl< FST, Token >::AdvanceDecoding(), OnlineSilenceWeighting::ComputeCurrentTraceback(), SingleUtteranceNnet2DecoderThreaded::config_, SingleUtteranceNnet2DecoderThreaded::decodable_, SingleUtteranceNnet2DecoderThreaded::decodable_synchronizer_, OnlineNnet2DecodingThreadedConfig::decode_batch_size, SingleUtteranceNnet2DecoderThreaded::decoder_, SingleUtteranceNnet2DecoderThreaded::decoder_mutex_, DecodableMatrixMappedOffset::IsLastFrame(), KALDI_ASSERT, ThreadSynchronizer::kConsumer, ThreadSynchronizer::Lock(), SingleUtteranceNnet2DecoderThreaded::num_frames_decoded_, LatticeFasterDecoderTpl< FST, Token >::NumFramesDecoded(), DecodableMatrixMappedOffset::NumFramesReady(), SingleUtteranceNnet2DecoderThreaded::silence_weighting_, SingleUtteranceNnet2DecoderThreaded::silence_weighting_mutex_, ThreadSynchronizer::UnlockFailure(), and ThreadSynchronizer::UnlockSuccess().

Referenced by SingleUtteranceNnet2DecoderThreaded::RunDecoderSearch().

|

staticprivate |

Definition at line 357 of file online-nnet2-decoding-threaded.cc.

References SingleUtteranceNnet2DecoderThreaded::abort_, SingleUtteranceNnet2DecoderThreaded::AbortAllThreads(), KALDI_ERR, KALDI_WARN, and SingleUtteranceNnet2DecoderThreaded::RunNnetEvaluationInternal().

Referenced by SingleUtteranceNnet2DecoderThreaded::SingleUtteranceNnet2DecoderThreaded().

|

private |

Definition at line 470 of file online-nnet2-decoding-threaded.cc.

References DecodableMatrixMappedOffset::AcceptLoglikes(), OnlineSilenceWeighting::Active(), SingleUtteranceNnet2DecoderThreaded::am_nnet_, CuVectorBase< Real >::ApplyFloor(), SingleUtteranceNnet2DecoderThreaded::config_, SingleUtteranceNnet2DecoderThreaded::decodable_, SingleUtteranceNnet2DecoderThreaded::decodable_synchronizer_, OnlineNnet2FeaturePipeline::Dim(), SingleUtteranceNnet2DecoderThreaded::feature_pipeline_, SingleUtteranceNnet2DecoderThreaded::feature_pipeline_mutex_, SingleUtteranceNnet2DecoderThreaded::FeatureComputation(), DecodableMatrixMappedOffset::FirstAvailableFrame(), OnlineSilenceWeighting::GetDeltaWeights(), OnlineNnet2FeaturePipeline::GetFrame(), AmNnet::GetNnet(), rnnlm::i, DecodableMatrixMappedOffset::InputIsFinished(), OnlineNnet2FeaturePipeline::IsLastFrame(), OnlineNnet2FeaturePipeline::IvectorFeature(), KALDI_ASSERT, ThreadSynchronizer::kProducer, ThreadSynchronizer::Lock(), OnlineNnet2DecodingThreadedConfig::max_loglikes_copy, OnlineNnet2DecodingThreadedConfig::nnet_batch_size, SingleUtteranceNnet2DecoderThreaded::num_frames_decoded_, OnlineNnet2FeaturePipeline::NumFramesReady(), OnlineIvectorFeature::NumFramesReady(), MatrixBase< Real >::NumRows(), CuMatrixBase< Real >::NumRows(), AmNnet::Priors(), SingleUtteranceNnet2DecoderThreaded::ProcessLoglikes(), Matrix< Real >::Resize(), SingleUtteranceNnet2DecoderThreaded::silence_weighting_, SingleUtteranceNnet2DecoderThreaded::silence_weighting_mutex_, Matrix< Real >::Swap(), CuMatrix< Real >::Swap(), ThreadSynchronizer::UnlockFailure(), ThreadSynchronizer::UnlockSuccess(), and OnlineIvectorFeature::UpdateFrameWeights().

Referenced by SingleUtteranceNnet2DecoderThreaded::RunNnetEvaluation().

| void TerminateDecoding | ( | ) |

You can call this if you don't want the decoding to proceed further with this utterance.

It just won't do any more processing, but you can still use the lattice from the decoding that it's already done. Note: it may still continue decoding up to decode_batch_size (default: 2) frames of data before the decoding thread exits. You can call Wait() after calling this, if you want to wait for that.

Definition at line 215 of file online-nnet2-decoding-threaded.cc.

References SingleUtteranceNnet2DecoderThreaded::AbortAllThreads().

| void Wait | ( | ) |

This call will block until all the data has been decoded; it must only be called after either InputFinished() has been called or TerminateDecoding() has been called; otherwise, to call it is an error.

Definition at line 220 of file online-nnet2-decoding-threaded.cc.

References SingleUtteranceNnet2DecoderThreaded::abort_, SingleUtteranceNnet2DecoderThreaded::input_finished_, KALDI_ERR, and SingleUtteranceNnet2DecoderThreaded::WaitForAllThreads().

|

private |

Definition at line 385 of file online-nnet2-decoding-threaded.cc.

References SingleUtteranceNnet2DecoderThreaded::error_, rnnlm::i, KALDI_ERR, and SingleUtteranceNnet2DecoderThreaded::threads_.

Referenced by SingleUtteranceNnet2DecoderThreaded::Wait(), and SingleUtteranceNnet2DecoderThreaded::~SingleUtteranceNnet2DecoderThreaded().

|

private |

Definition at line 427 of file online-nnet2-decoding-threaded.h.

Referenced by SingleUtteranceNnet2DecoderThreaded::AbortAllThreads(), SingleUtteranceNnet2DecoderThreaded::RunDecoderSearch(), SingleUtteranceNnet2DecoderThreaded::RunNnetEvaluation(), SingleUtteranceNnet2DecoderThreaded::Wait(), and SingleUtteranceNnet2DecoderThreaded::~SingleUtteranceNnet2DecoderThreaded().

|

private |

Definition at line 353 of file online-nnet2-decoding-threaded.h.

Referenced by SingleUtteranceNnet2DecoderThreaded::RunNnetEvaluationInternal().

|

private |

Definition at line 351 of file online-nnet2-decoding-threaded.h.

Referenced by SingleUtteranceNnet2DecoderThreaded::FeatureComputation(), SingleUtteranceNnet2DecoderThreaded::GetLattice(), SingleUtteranceNnet2DecoderThreaded::ProcessLoglikes(), SingleUtteranceNnet2DecoderThreaded::RunDecoderSearchInternal(), and SingleUtteranceNnet2DecoderThreaded::RunNnetEvaluationInternal().

|

private |

Definition at line 407 of file online-nnet2-decoding-threaded.h.

Referenced by SingleUtteranceNnet2DecoderThreaded::RunDecoderSearchInternal(), and SingleUtteranceNnet2DecoderThreaded::RunNnetEvaluationInternal().

|

private |

|

private |

Definition at line 412 of file online-nnet2-decoding-threaded.h.

Referenced by SingleUtteranceNnet2DecoderThreaded::EndpointDetected(), SingleUtteranceNnet2DecoderThreaded::FinalizeDecoding(), SingleUtteranceNnet2DecoderThreaded::GetBestPath(), SingleUtteranceNnet2DecoderThreaded::GetLattice(), SingleUtteranceNnet2DecoderThreaded::NumFramesDecoded(), SingleUtteranceNnet2DecoderThreaded::RunDecoderSearchInternal(), and SingleUtteranceNnet2DecoderThreaded::SingleUtteranceNnet2DecoderThreaded().

|

mutableprivate |

Definition at line 417 of file online-nnet2-decoding-threaded.h.

Referenced by SingleUtteranceNnet2DecoderThreaded::EndpointDetected(), SingleUtteranceNnet2DecoderThreaded::GetBestPath(), SingleUtteranceNnet2DecoderThreaded::GetLattice(), SingleUtteranceNnet2DecoderThreaded::NumFramesDecoded(), and SingleUtteranceNnet2DecoderThreaded::RunDecoderSearchInternal().

|

private |

Definition at line 432 of file online-nnet2-decoding-threaded.h.

Referenced by SingleUtteranceNnet2DecoderThreaded::AbortAllThreads(), and SingleUtteranceNnet2DecoderThreaded::WaitForAllThreads().

|

private |

Definition at line 380 of file online-nnet2-decoding-threaded.h.

Referenced by SingleUtteranceNnet2DecoderThreaded::EndpointDetected(), SingleUtteranceNnet2DecoderThreaded::FeatureComputation(), SingleUtteranceNnet2DecoderThreaded::GetAdaptationState(), SingleUtteranceNnet2DecoderThreaded::GetCmvnState(), SingleUtteranceNnet2DecoderThreaded::GetRemainingWaveform(), SingleUtteranceNnet2DecoderThreaded::NumFramesReceivedApprox(), SingleUtteranceNnet2DecoderThreaded::RunNnetEvaluationInternal(), and SingleUtteranceNnet2DecoderThreaded::SingleUtteranceNnet2DecoderThreaded().

|

private |

Definition at line 381 of file online-nnet2-decoding-threaded.h.

Referenced by SingleUtteranceNnet2DecoderThreaded::GetAdaptationState(), SingleUtteranceNnet2DecoderThreaded::GetCmvnState(), and SingleUtteranceNnet2DecoderThreaded::RunNnetEvaluationInternal().

|

private |

Definition at line 370 of file online-nnet2-decoding-threaded.h.

Referenced by SingleUtteranceNnet2DecoderThreaded::FeatureComputation(), SingleUtteranceNnet2DecoderThreaded::InputFinished(), and SingleUtteranceNnet2DecoderThreaded::Wait().

Definition at line 371 of file online-nnet2-decoding-threaded.h.

Referenced by SingleUtteranceNnet2DecoderThreaded::AcceptWaveform(), SingleUtteranceNnet2DecoderThreaded::FeatureComputation(), SingleUtteranceNnet2DecoderThreaded::GetRemainingWaveform(), SingleUtteranceNnet2DecoderThreaded::NumWaveformPiecesPending(), and SingleUtteranceNnet2DecoderThreaded::~SingleUtteranceNnet2DecoderThreaded().

|

private |

Definition at line 408 of file online-nnet2-decoding-threaded.h.

Referenced by SingleUtteranceNnet2DecoderThreaded::FeatureComputation(), SingleUtteranceNnet2DecoderThreaded::GetRemainingWaveform(), SingleUtteranceNnet2DecoderThreaded::RunDecoderSearchInternal(), and SingleUtteranceNnet2DecoderThreaded::RunNnetEvaluationInternal().

|

private |

Definition at line 390 of file online-nnet2-decoding-threaded.h.

Referenced by SingleUtteranceNnet2DecoderThreaded::FeatureComputation(), and SingleUtteranceNnet2DecoderThreaded::GetRemainingWaveform().

|

private |

Definition at line 362 of file online-nnet2-decoding-threaded.h.

Referenced by SingleUtteranceNnet2DecoderThreaded::AcceptWaveform(), and SingleUtteranceNnet2DecoderThreaded::NumFramesReceivedApprox().

|

private |

Definition at line 359 of file online-nnet2-decoding-threaded.h.

Referenced by SingleUtteranceNnet2DecoderThreaded::AcceptWaveform(), SingleUtteranceNnet2DecoderThreaded::FeatureComputation(), SingleUtteranceNnet2DecoderThreaded::GetRemainingWaveform(), and SingleUtteranceNnet2DecoderThreaded::NumFramesReceivedApprox().

|

private |

Definition at line 394 of file online-nnet2-decoding-threaded.h.

Referenced by SingleUtteranceNnet2DecoderThreaded::RunDecoderSearchInternal(), and SingleUtteranceNnet2DecoderThreaded::RunNnetEvaluationInternal().

|

private |

Definition at line 395 of file online-nnet2-decoding-threaded.h.

Referenced by SingleUtteranceNnet2DecoderThreaded::RunDecoderSearchInternal(), and SingleUtteranceNnet2DecoderThreaded::RunNnetEvaluationInternal().

|

private |

Definition at line 423 of file online-nnet2-decoding-threaded.h.

Referenced by SingleUtteranceNnet2DecoderThreaded::FinalizeDecoding(), SingleUtteranceNnet2DecoderThreaded::GetRemainingWaveform(), SingleUtteranceNnet2DecoderThreaded::SingleUtteranceNnet2DecoderThreaded(), and SingleUtteranceNnet2DecoderThreaded::WaitForAllThreads().

|

private |

Definition at line 355 of file online-nnet2-decoding-threaded.h.

Referenced by SingleUtteranceNnet2DecoderThreaded::EndpointDetected(), and SingleUtteranceNnet2DecoderThreaded::GetLattice().

|

private |

Definition at line 374 of file online-nnet2-decoding-threaded.h.

Referenced by SingleUtteranceNnet2DecoderThreaded::AbortAllThreads(), SingleUtteranceNnet2DecoderThreaded::AcceptWaveform(), SingleUtteranceNnet2DecoderThreaded::FeatureComputation(), SingleUtteranceNnet2DecoderThreaded::InputFinished(), and SingleUtteranceNnet2DecoderThreaded::NumWaveformPiecesPending().