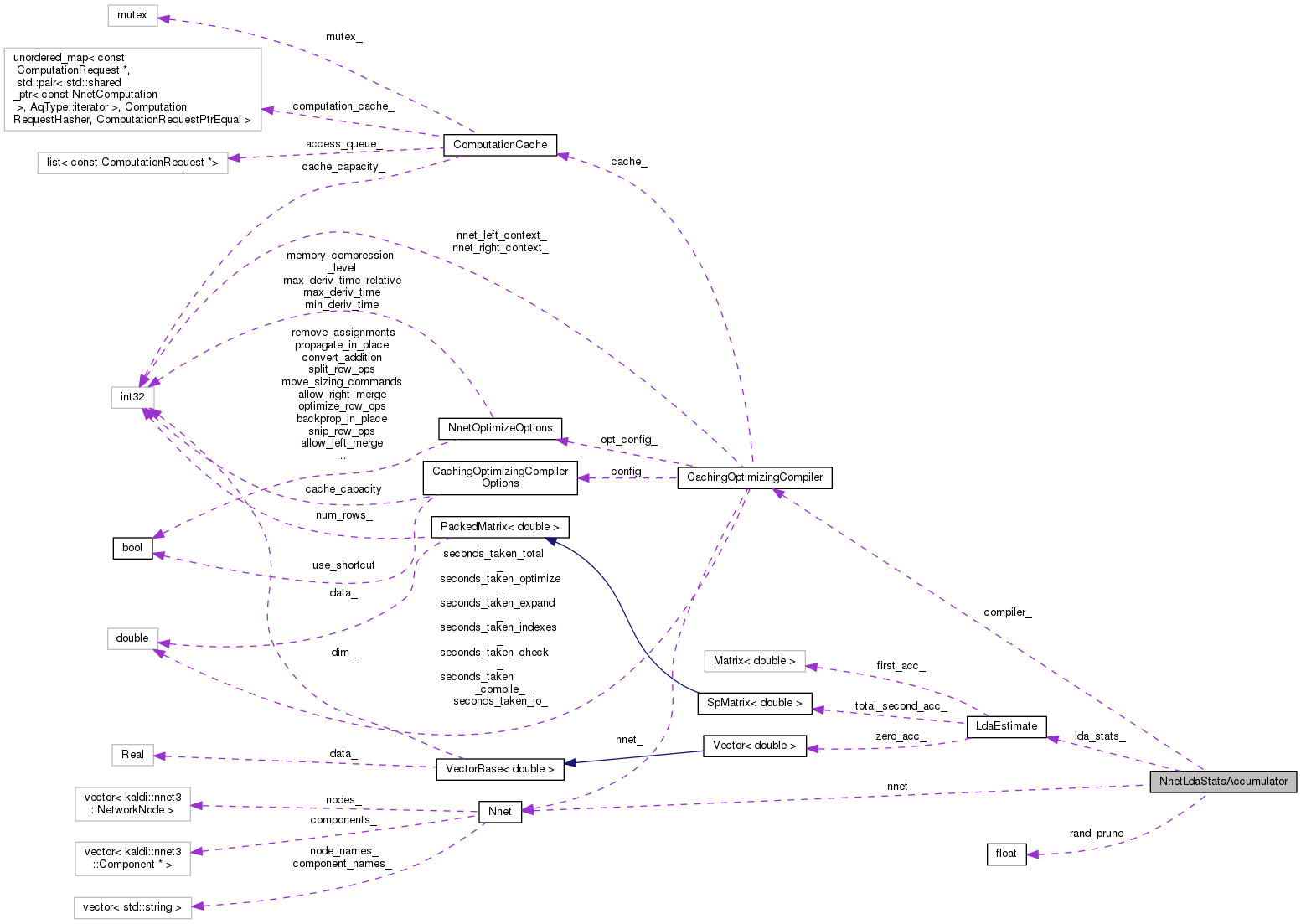

Public Member Functions | |

| NnetLdaStatsAccumulator (BaseFloat rand_prune, const Nnet &nnet) | |

| void | AccStats (const NnetExample &eg) |

| void | WriteStats (const std::string &stats_wxfilename, bool binary) |

Private Member Functions | |

| void | AccStatsFromOutput (const NnetExample &eg, const CuMatrixBase< BaseFloat > &nnet_output) |

Private Attributes | |

| BaseFloat | rand_prune_ |

| const Nnet & | nnet_ |

| CachingOptimizingCompiler | compiler_ |

| LdaEstimate | lda_stats_ |

Definition at line 32 of file nnet3-acc-lda-stats.cc.

|

inline |

Definition at line 34 of file nnet3-acc-lda-stats.cc.

|

inline |

Definition at line 38 of file nnet3-acc-lda-stats.cc.

References NnetComputer::AcceptInputs(), NnetLdaStatsAccumulator::AccStatsFromOutput(), CachingOptimizingCompiler::Compile(), NnetLdaStatsAccumulator::compiler_, NnetComputeOptions::debug, kaldi::nnet3::GetComputationRequest(), kaldi::GetVerboseLevel(), NnetExample::io, and NnetLdaStatsAccumulator::nnet_.

Referenced by main().

|

inlineprivate |

Definition at line 65 of file nnet3-acc-lda-stats.cc.

References LdaEstimate::Accumulate(), SparseVector< Real >::Data(), VectorBase< Real >::Dim(), LdaEstimate::Dim(), NnetIo::features, GeneralMatrix::GetMatrix(), GeneralMatrix::GetSparseMatrix(), rnnlm::i, LdaEstimate::Init(), NnetExample::io, KALDI_ASSERT, kaldi::kSparseMatrix, NnetLdaStatsAccumulator::lda_stats_, CuMatrixBase< Real >::NumCols(), GeneralMatrix::NumCols(), SparseVector< Real >::NumElements(), CuMatrixBase< Real >::NumRows(), GeneralMatrix::NumRows(), NnetLdaStatsAccumulator::rand_prune_, kaldi::RandPrune(), SparseMatrix< Real >::Row(), and GeneralMatrix::Type().

Referenced by NnetLdaStatsAccumulator::AccStats().

|

inline |

Definition at line 54 of file nnet3-acc-lda-stats.cc.

References KALDI_ERR, KALDI_LOG, NnetLdaStatsAccumulator::lda_stats_, LdaEstimate::TotCount(), and kaldi::WriteKaldiObject().

Referenced by main().

|

private |

Definition at line 127 of file nnet3-acc-lda-stats.cc.

Referenced by NnetLdaStatsAccumulator::AccStats().

|

private |

Definition at line 128 of file nnet3-acc-lda-stats.cc.

Referenced by NnetLdaStatsAccumulator::AccStatsFromOutput(), and NnetLdaStatsAccumulator::WriteStats().

|

private |

Definition at line 126 of file nnet3-acc-lda-stats.cc.

Referenced by NnetLdaStatsAccumulator::AccStats().

|

private |

Definition at line 125 of file nnet3-acc-lda-stats.cc.

Referenced by NnetLdaStatsAccumulator::AccStatsFromOutput().